The Wireless Business Unit (WBU) from TI was created in the mid 90’s to structure the chip business in wireless handset made with customers like Nokia, Ericsson or Alcatel. I had a deep look at the WBU results: quickly growing from $1B in 2000 to reach about $5B in 2005… to finally decrease by 70%, down to $1.3B in 2012.

Let’s make it clear: TI is a great company, which has enabled the modern SC industry as we know it today, based on “Integrated” Circuit (invented by Jack Kilby in 1958). TI is also a company I had the great opportunity to work with, able to train a pure ASIC designer like me to a business-oriented engineer, allowing me to benefit from MBA level trainings, as well as exciting work environment – and great colleagues. Today, I will just use this market oriented education to try to understand what the mistake was. No doubt mistakes have been done, precisely understanding the nature of the mistake(s) just could help avoiding making it again.

In the early 90’s, TI top customer moved from IBM (DRAM and commodities for PC) to Ericsson, Cisco or Alcatel. If you prefer, the move was from the PC to the communication segment or from a commodity business (DRAM, TTL…) to the so-called Application Specific Product (ASIC, DSP…) business type. TI was leader in DSP, like TMS320C50 family, essential to support the new digital signal processing techniques used in modern communication. TI was lucky enough to have customers like Alcatel, able to precisely specify the ideal DSP core to support baseband processing of the emerging wireless (digital) standard, the GSM. TI management was cleaver enough to accept to develop the LEAD, a DSP core to be integrated into a chip using ASIC technology, along with a CPU core from a young UK based company, ARM Ltd…., into a single chip, that we know today under the “System-on-Chip”, this SoC being used to support the complexes GSM algorithms’ (baseband processing) and wireless handset related applications. Like for example to support this Ericsson handset model from 1998:

In 1995, all the pieces were already in place: Technology (ASIC), IP cores (LEAD DSP and ARM7 CPU) and customers: Ericsson, Nokia, Alcatel and more. By the way, at that time, TI Dallas based upper management was not aware that Nice (south of France) based European DSP marketing team, headed by Gilles Delfassy and counting Edgar Ausslander and Christian Dupont, had started deploying this wireless strategy! This team was cleaver enough to wait until the market clearly emerges, and the business figure to become significant, before to ask for massive funding. They literally behaved like they were managing a Start-up, except the start-up was nested in a $6B company!

President at Delfassy Consulting, Founder and GM (retired 2007) of Texas Instruments Wireless BusinessGilles Delfassy: President at Delfassy Consulting, Founder and GM (retired 2007) of Texas Instruments Wireless Business

Edgar Ausslander: VP, Qualcomm, Responsible for QMC (Qualcomm Mobile and Computing) product roadmap

Louis Tannyeres: CTO (TI Senior Fellow) at Texas Instruments

Remark: their respective titles are the currentLinkedIn title; a couple of years ago, Gilles Delfassy was CEO, Edgar Auslander VP Planning Strategy and Louis Tannyeres SoC Chief Architect, all of them with ST-Ericsson.

The WBU was officially created in 1998 and was weighting a couple of $100 million. The strategy was good, the market was exploding, the design team quickly growing, with people like Louis Tannyeres joining as technical guru (Louis is TI senior fellow), allowing to support as many customers as TI could eat, including Motorola (TI historical competitor) in 1999, it was not a surprise that WBU reach 1B$ mark in 2000. At that time, TI was offering a very complete solution: digital baseband modem and application processing, plus several companion chips (RF, power management, audio…) but let’s concentrate on the first mentioned. TI was the first company to introduce a Wireless dedicated SoC platform, OMAP, in 2002. Please note that this was possible because TI could offer both the digital baseband modem and the application processor, integrated into a single chip…

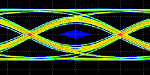

Again this was a winning strategy, explaining why WBU grew from $1B in 2000 up to $4.4B in 2005. In the meantime, CDMA had emerged and was competing with GSM, as precisely described in this brilliant post from Paul McLelan. Every company developing a CDMA compliant modem chip has to pay a license to Qualcomm, and Qualcomm was necessarily a competitor to this chip maker. Not a very comfortable position, but CDMA was opening most of the US based wireless market, so the choice was between getting almost no revenue in the US (and a few other countries)… or negotiating with Qualcomm. The below picture, showing how many CDMA royalties Samsung had to pay to Qualcomm during 1995-2005, clearly indicates that the CDMA royalty level is far to be negligible!

This 2005-2006 times was the apogee of TI WBU. In 2006, Qualcomm revenues from QCT (equivalent to WBU, chip business only, not integrating licenses) were $4.33B when TI WBU was above $5B. TI was already selling OMAP platform on the wireless market, when Gobi and Snapdragon were two years to be launched. TI’s position was still the leader, even if it was clear that Qualcomm was becoming stronger year after year. The only smartphone available on the market was from Nokia, and the iPhone was still not launched. Then, in 2007, two consecutive events happened: TI decided not to develop baseband modem any longer, and Gilles Delfassy retired…

If I remember well, the “official” reason to stop new modem development was because “digital baseband modem was becoming a commodity product”. Commodity means that the only differentiation can be done on the product price, on large production volume sales. Maybe Samsung, still fighting to launch an efficient 3G modem, would like to comment about the commodity nature of the digital baseband modem? In fact, the real reason was that TI was unable to release a 100% at spec, working 3G modem.

This sounds like in a tale from La Fontaine, “Le Renard et les Raisins”. In the tale, a fox is trying to catch grapes. Unfortunately, these grapes are located too high for him, so he fails to catch (and eat) these. Very disappointed, but pride, he says: ”Ils sont trop verts, et bon pour les goujats”, which means “these grapes are not ripen and good only for coarse person”. Just like TI upper management saying that “3G modem IC is a commodity market that we prefer not to attack”…

Moreover, even such a customer may hesitate before choosing TI when the competition (Qualcomm, Broadcom, STM) can propose to support a roadmap, where the next generation will be based on a cheaper solution: a single chip, integrating AP and Modem. Which is even more dramatic is that TI took this decision in 2007, if you remember it was precisely the year when Apple has launched the first iPhone, creating the smartphone market segment. The smartphone shipments have reached a record in 2012, with more than 700 million units being shipped. But TI has decided to concentrate on the Analog business, not on the wireless handset segment anymore. Who has said that Analog was a commodity business? I remember, it was during one of the training in marketing I had during my TI days…

By the way, any idea about TI WBU revenue in 2012? It was $1.36B, or 30% of 2006 revenue. But this trend could have been projected back in 2007, when TI has decided not to develop Digital Modem for the wireless market, wasting 10+ years R&D and business development effort.

From Eric Esteve from IPNEST