As seems to be traditional, Wally Rhines gave a keynote here at the GlobalPress Electronics Summit here in sunny Santa Cruz. It was entitled Embedded Software, the Next Revolution in EDA. Unlike Cadence and Synopsys, Mentor has a strong position in embedded software. It has been build up over a long time through a series of acquisitions (plus lots of internal development, of course).

First they acquired Microtec in 1998 and got the XRAY debugger and VRTX RTOS. Next, in 2002 they acquired Accelerated Technology (ATI) along with the Nucleus operating system (the volume leader if not the $ leader at the time, since it was royalty free). A couple of years later they acquired Embedded Alley with expertise in Android and Linux. In 2010 they acquired CodeSourcery, a major player in open source tools and services. And just recently they acquired MontaVista automotive from Cavium (who acquired all of MontaVista back in 2010).

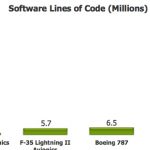

The growth in software, both in the number of lines of code in a typical SoC or embedded system (think a car or a smartphone) and in the number of engineers involved is incredible. And it is only going to get worse, so if the secret can be cracked it is a huge opportunity.

Of course the holy grail in EDA is to get the hardware people and the software people onto some sort of common infrastructure, since the chip and the software and the IP are now so intertwined. Mentor tried this early on with their Seamless product. But despite having designed the product for the software developer, only hardware developers bought it. So instead of being used for software development, designers were using it to stimulate their design using software. Software people didn’t use it for a couple of reasons. Firstly, it cost $50,000. To an design group, that is a reasonable cost for an EDA tool. For a software group, even $1,000 is too much. But also it didn’t give the software people what they needed, namely to live in the environment that they were used to and do things the way they were used to.

So gradually it dawned on everyone that the grand unified field theory of EDA wasn’t going to work. What was actually required was to let software developers live in their favorite environment and deliver them what limited information they needed in terms they can understand (which means not talking about SystemC TLMs or Verilog), and correspondingly give the hardware designers whatever software information they needed in terms they could understand.

CodeSourcery is widely used. It is the preferred toolchain for leading edge semiconductor companies. It is downloaded 15,000 times…every month. Last year it was downloaded 150,000 times and there were 300 releases. The scale of a large open source project is incredible when you are used to EDA volumes (but correspondingly the price point is disappointing when you are used to EDA prices, namely free).

I asked Wally if they actually made money on the software part of the business. He emphasized that the embedded division is profitable, it is the fastest growing part of Mentor. Their CodeSourcery service business that tailors the open source code for specific companies is the most profitable service business in the company with margins above emulators and nearly as high as pure software. Wow, that’s pretty impressive for software that anyone can “just” download and alter themselves.

I believe that this is a rough preview of Wally’s keynote at U2U next week. The detailed agenda for U2U is here. Details about attending, including a link to register are here. The conference is free and includes a free lunch (so there is such a thing).