That’s too bad, as you have missed latest news about the Hybrid Memory Cube (presentation by Micron), Wide I/O 2 standard, as well as other standards like LPDDR4, eMMC 5.0, and LRDIMM,the good news is that you may find all these presentations on MemCon proceedings web site.

I first had a look at Richard Goering excellent blog: wideI/O and Memory cube, and then I had a look at the HMC presentation made by Mike Black from Micron. HMC is an amazing technology, the comparison table as shown by Micron help understanding why:

- Channel complexity: HMC is 90% simpler than DDR3, using 70 pins instead of… 715 pins.

- Board Footprint: HMC board space occupies 378 mm[SUP]2[/SUP] instead of 8,250 mm[SUP]2[/SUP] for DDR3!

- Energy efficiency is 75% better

- Bandwidth: HMC delivers 857 MB/pin compared with 18 MB/pin for DDR3 and 29 MB/pin for DDR4

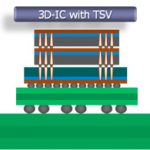

What is the secret sauce for such amazing performance? Once again, it’s because the protocol uses Very High Speed, SerDes based, serial link, instead of Parallel data transfer. Like for PCI Express instead of PCI, SATA instead of PATA and so on. Except that the link is defined at speed rate between 15 Gbps to 28 Gbps! That is, delivering more than 3X bandwidth per lane that PCIe gen-3 (8Gbps) or more than 4X than SATA III (6Gbps). To be honest, I am not completely surprised to see the emergence of such a high speed serial link protocol for DRAM, I rather think that Memory SC industry has been late compared with the rest of the industry. PCI Express has been defined in 2004, as well as SATA, and Ethernet protocols are even older. Nevertheless, I completely applaud, as HMC is expected to be a real revolution in many electronic industries, like Computing, Networking or Servers. By the way, don’t expect your smartphone or tablet to be HMC equipped… the 3D-IC form factor will prevent these devices to use HMC, see picture below:

The HMC typically includes a high-speed control logic layer below a vertical stack of four or eight TSV-bonded DRAM dies. The DRAM handles data only, while the logic layer handles all control within the HMC. In the example configuration shown at right, each DRAM die is divided into 16 cores and then stacked. The logic die is on the bottom and has 16 different logic segments, with each segment controlling the DRAMs that sit on top. The architecture uses “vaults” instead of memory arrays (you could think of these as channels).

The HMC was originally designed by Micron and is now under development by the Hybrid Memory Cube Consortium (HMCC), which is currently offering its 1.0 specification for public download and review. The HMCC includes eight “developer members” – Altera, ARM, IBM, Micron, Open-Silicon, Samsung, SK-Hynix, and Xilinx – and many “adopter members” including Cadence. I will not reproduce the adopter list, as it’s too long to fit here, as more than 110 companies are part of the consortium so far!

In addition to Wide I/O 2 and HMC, Cadence is announcing memory model support for these emerging standards:

LPDDR4 – Promises 2X the bandwidth of LPDDR3 at similar power and cost points. Lower page size and multiple channels reduce power. This JEDEC standard is in balloting, and mass production is expected in 2014.

eMMC 5.0 – Embedded storage solution with a MMC (MultiMedia Card) interface. eMMC 5.0 offers more performance at the same cost as eMMC 4.5. Samsung announced the industry’s first eMMC 5.0 chips July 27, 2013.

LRDIMM– Supports DDR4 LRDIMMs (load reduced DIMMs) and RDIMMs. This standard is mostly used in computers, especially servers.

Cadence memory models support all leading simulators, verification languages, and methodologies. “We’re involved early on in the standards development,” Jacobson noted. “We are out there developing third-party models early. We work closely with vendors to get the models certified. If you’re looking for a third-party solution for memory models, that’s what we do.”

I have extracted from Martin Lund introduction a very interesting picture, as it can help analyst to understand the new memory standards adoption behavior, as well as shows that the “old” standards (DDR1 or DDR2) are not vanishing so fast. Be careful, this is a log scale!

Just a last point, IPNEST is taking a close look at Verification IP market these days, and I had a look at the various memory standards supported by Cadence, or the associated memory models that the company provides… that’s also a pretty long list, as you can see:

Eric Esteve from IPNEST –

lang: en_US