At the ITC test conference in early September, Mentor made three announcements. ITC is a big event for Mentor’s test group, and where they usually roll out their new tools and capabilities. The indefatigable Steve Pateras was captured on film describing them.

I’ve summarize Mentor’s three announcements and added links to resources.

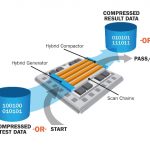

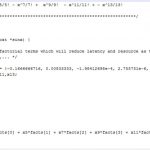

Hybrid compression ATPG/LBIST, which is useful for testing high-reliability and safety-critical ICs, for instance in automotive and medical areas. This is a newer technology that re-uses the same scan, clocking, and other test logic to apply both deterministic and random test patterns. See the new whitepaper“Improve Logic Test with a Hybrid ATPG/BIST Solution” (requires registration). They announcedthat Renesas adopted Mentor’s hybrid TK/LBIST solution to satisfy the in-system test requirements mandated by the ISO 26262 automotive quality standard.

An IJTAG (P1687) ecosystem. IJTAG is the spiffy new standard for control and test of IP, which is billed as a real plug-n-play IP integration standard. Last year, Mentor announced their Tessent IJTAG tool that automates the support of the standard. See the Tessent IJTAG datasheetand a whitepaper, “Automated Test Creation for Mixed Signal IP using IJTAG.” The announcementwas that that they worked with Asset Intertech to ensure interoperability between Tessent IJTAG and Asset’s ScanWorks IJTAG solution, allowing engineers to access the operational and diagnostic features of all IP blocks in the design from a top-level interface. This greatly simplifies the job of integrating the hundreds of IP blocks in a typical system, and represents a large step towards creating an IJTAG ecosystem between EDA, IP providers and hardward/software debug tools.

The transistor-level ATPG that they call Cell-Aware test has lots of published industry data showing improved test quality for very-low DPM applications, including automotive, medical, aerospace…really anyone who wants fewer failing devices making it to the field. They have a great web seminar on Cell-Aware test (registration required), and a Cell-Aware Test datasheet. Their announcement is that Open-Silicon is using Cell-Aware test meet demand for very low DPM by detecting defects within cells.

More articles by Beth Martin…