I was the VP marketing at VaST Systems Technology and then at Virtutech. Both companies sold virtual platform technology which consisted of two parts:

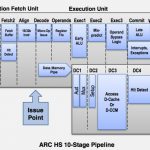

- an extremely fast processor emulation technology that actually worked by doing a binary translation of the target binary code (e.g. an ARM) into the native instruction set of the server on which the emulation was running (usually an x86 workstation of some sort). This was done dynamically as the code was executed in the same way as JIT compilers for Java (and other bytecodes) work

- a modeling technology for other devices in the system (or on the chip if it was an SoC design) along with libraries of pre-existing models

Both companies were moderately successful at selling their technology, VaST especiallly in automotive and Virtutech especially in networking and base-stations. VaST ended up being acquired by Synopsys and Virtutech by the Wind River division of Intel.

But the big hurdle to using the technology was the need to create models for all the “other” devices. Everyone loved the processor technology and found its performance unbelievable. But if it took 3 months of the 6 months you were going to save to develop those models, the ROI was a lot less compelling. Plus blocks were always changing so there was always the problem to ensure that the models matched the RTL (or whatever representation was being used). One answer would have been to use emulation and just run the RTL fast. But in the mid-2000s emulators were million dollar boxes used at only a handful of the biggest semiconductor companies, certainly not available to embedded software developers. But the economics have changed. By some estimates, emulation is now the cheapest way to do simulation per cycle, beating even verilog simulators on big server farms, which has been the simulation infrastructure of choice for a long time.

At ARM TechCon last week nVidia were reporting on how they had used Cadence’s hybrid virtual platform and emulation system to bring up their latest Tegra chips.

You might think that with a big emulator lying around then you just load up the RTL for the ARM processor into the box too. But the problem is that to do real software development requires that you first boot an operating system before you get to run the code you are really working on. Linux takes 1B instructions to boot, Android takes 20B and Windows RT 50B. Booting Windows would take days on the emulator. Instead the fast processor models are used along with the rest of the chip loaded in the emulator. Obviously there is some clever glue making all this work cleanly together as the Palladium/VSP Hybrid.

Results? Linux boots in 2 minutes versus 45 minutes using just the emulator. Android in 45 minutes versus hours. Windows RT in 90 minutes versus days. Nobody knows how many days since nobody bothered to try.

Ultimately, though, what is important is whether all this made any difference to the design. Here is nVidia’s experience:

- eliminated reliance on other pre-silicon platforms

- found some software race conditions

- found some memory management bugs

- found some code completeness issues

Then silicon came back from the fab. Of course system bringup requires running the software on the actual silicon:

- this was the smoothest bringup they had done

- software was ready to demo product at Speed-Of-Light (SOL)

- fewer bugs meant they could put more effort into optimizing for power and performance.

The nVidia presentation is on the Cadence website here.