The Internet of Things is on every technology mind these days, but what does it mean for the EDA community? Dennis Brophy of Mentor Graphics says the billions of things we are hearing about will not happen unless we find a way to build a lot more things, efficient things, and connected things. He has more thoughts in our recent interview.

Continue reading “IoT begets silicon, interoperability, and standards”

Interface Protocols, USB3, PCI Express, MIPI, DDRn… the winner and losers in 2013

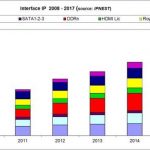

How to best forecast a specific protocol adoption? One option is to look at the various IP sales, it will give you a good idea of the number of SoC or IC offering this feature on the market in the next 12 months. Once again, if you wait for the IP sale to have reached a maximum, it will be too late, so you have to monitor the IP sales dynamic when the sale volume is still in the low range to make an efficient analysis, which can help you taking the right decision just a little bit in advance in respect with your competitor – to benefit from a Time-To-Market advantage. That’s why we will mention the clear winners, demonstrating high market penetration (and becoming “de facto” standard in certain market segments), and also put the focus on the emerging protocols demonstrating fast growing penetration.

The above table is extracted from the “Interface IP Survey” version 5, just completed. In short you will discover in this survey:

- IP vendor ranking, protocol by protocol, by IP License revenue, for USB, PCI Express, HDMI, SATA, MIPI, DisplayPort, Ethernet and DDRn Memory Controller,

- Competitive analysis by protocol

- Controller and PHY IP license price (by technology node for PHY)

- By protocol adoption rate and market trends

In fact, IPnest is the only analyst proposing such a granularity and this approach has allowed building a large customer base, including IP vendors, ASIC Design House, Foundries, Fabless and IDM. Ranking of the numerous IP vendors by protocol is very useful, but not enough, IPNEST has inserted market intelligence and not only raw data!

The winners in 2013

HDMI is again this year a very successful protocol, both in term of market penetration in the Consumer/HDTV segment and in term of pervasion in various segments like PC, Wireless Handset (smartphone), Set-Top-Box, DVD players and recorders, Digital Camcorder, Digital still camera and even Automotive (I guess thanks to platforms like TI OMAP, as the chip maker has to enter in new segments after giving up in the wireless). Analyst consensus is that almost 3 billion HDMI ports have been shipped since the protocol inception. DisplayPort has become complementary to HDMI, the adoption has been confirmed in 2012, after a strong growth in 2011: the protocol is well tailored for interfacing a PC and a screen, that’s naturally here that the adoption is high. We clearly rank DisplayPort in the winner list.

According with Silicon Image, the adoption for “Mobile High-Definition Link” (MHL) is growing very fast. MHL provides the same bandwidth capability (and compatibility) that HDMI 1.4… but with a micro-USB (5 pin) connector instead of the traditional HDMI connector. Just keep in mind that MHL will be primarily used in mobile electronic systems, smartphones, media tablet and probably notebook and ultrabook PC. That makes over one BILLION potential devices integrating MHL in 2013…

The semiconductor and electronic industry had in the past some concerns with HDMI protocol: they had to pay royalties to HDMI LLC, but they could not influence the specifications. Until late 2011, HDMI LLC was a closed standard body that consisted in seven founders and over 1,000 adopters. The HDMI specification was architecture by the seven founders in a closed-door environment. In October 2011, the HDMI founders established a nonprofit corporation called HDMI Forum, with the purpose to foster broad industry participation in the development of future versions of the HDMI specification.

Efficient basic protocol, plus two new releases, one addressing the form factor of the connector (MHL) and the second extending HDMI to more HD with 2.0, sold to customers happier today than in the past, that makes good reasons for HDMI IP sales to jump in 2012 and growing again in 2013.

MIPI is a set of interface specifications initially specifically tailored for wireless phones system, defining almost any kind of chip to chip interface: Camera to Application Processor (AP) with CSI, Display with AP (DSI), Baseband with AP (Low Latency Interface, allowing to share an external DRAM), main SoC with RF chipset (DigRF) and another dozen specifications. I will come back to MIPI in a next blog very soon, explaining why MIPI IP sales have been multiplied by x4 from 2010 to 2012, seeing a 60% increase in 2012.

To give a complete picture about MIPI, it’s important to notice that the MIPI Alliance has consolidated MIPI positioning within the Interface Ecosystem, by concluding very promising agreements with three standard organizations:

- JEDEC: definition of Universal Flash Storage (UFS), to be used in conjunction with MIPI M-PHY, offering within a mobile system

- USB-IF: specification of SuperSpeed USB Inter Chip (SSIC), where the USB 3.0 PHY can be replaced by MIPI M-PHY, offering high bandwidth and low power capabilities for chip to chip communication in a mobile system

- PCI-SIG: definition of “Mobile Express”, delivering an adaptation of the PCI Express® (PCIe®) architecture to operate over the MIPI M-PHY® physical layer technology

We will use these agreements to introduce the next two Interface protocol winners: USB 3.0 and PCI Express.

SuperSpeed USB IP have started selling well during 2012, with a design start count being last year equal to the sum of design starts during 2009, 2010 and 2011. In the meantime, many IP vendors have given up (PLDA, Snowbush to name a few), and the result is that there is a clear, undisputed winner in the USB 3.0 market: Synopsys! But Cadence has made two acquisitions during 2013, Cosmic Circuit and Evatronix, the first bringing USB 3.0 PHY IP and the second USB 2.0 integrated solution plus USB 3.0 Controller IP, indicating that the EDA & IP vendor did not give up about MIPI. Fair competition is always good for the market! Moreover, USB-IF is launching USB 3.1, offering a (doubled) 10 Gbps data rate. Offering a solid roadmap is always a good indication that a protocol will live for a long time; it could also be a good way to change the deal, or the IP vendor landscape.

PCI Express penetration has started in 2005 and has never stopped since then. The technology has been adopted in many, many market segments, with the notable exception of Consumer Electronic and mobile wireless. In fact PCIe success will go even further, as at the end of 2011, SATA-IO Organization has decided to offer ‘SATA Express”, the Non Volatile Memory storage application interface will be supported by NVM Express and in 2012 MIPI Alliance has defined “Mobile Express”.

If we take a look at PCI Express IP cumulated revenues since its inception, we realize that the technology has generated more than $300M of license IP business. We can mention four reasons why PCIe IP sales should continue to grow:

- PCIe gen-3 (8 Gbps) is selling well, at higher price than gen-2

- PCIe gen-4 (16 Gbps) is probably in the pipe to be finalized in early 2015, expect fewer IP sales than gen-3, BUT at much higher pricing (PHY IP over $1M)

- Mobile Express IP sales are net growth, the standard is new

- SATA Express as well, NVMe is more questionable

This last protocol is seeing a wide adoption, or more precisely a growing outsourcing rate and simply the faster growing and larger IP sales: DDRn. DDRn controller is a mean to interconnect a SoC with memory, using a digital part (Controller) and a physical media access (PHY), so it’s built like every other modern high speed protocol. We have shown in the “Interface IP Survey” that, even if the ASIC design starts decline year after year, the SoC proportion of these design start is growing higher. Because there is more SoC design starts, a SoC being defined as a chip integrating one or more processor (CPU, GPU, DSP, and Microcontroller), the net number of DDRn controller is growing at the same rate. Because the DDRn controller design from scratch is becoming more difficult to manage with DRAM frequency increase, leading to move from ‘Soft PHY” to hardened PHY for example, the move to external sourcing of DDRn controller IP is growing faster than any other Interface IP. This looks theory, but we can see effective sales of DDRn controller IP growing on line with the thory! Just look at these results from IPNEST for 2008-2012. DDRn IP segment is strongly growing, and the leadership is split between Synopsys (again), Cadence (thanks to Denali acquisition) and ARM.

Misc… but not least: Network-onChip

Network on Chip (NoC) is not a protocol, neither an interface, rather an interconnect function, buried into a SoC, to connect, manage and monitor the multiple IP blocks. As such a NoC will be, by definition, connected to all the interface functions, from DDRn memory controller to USB, PCIe, UFS and so on. That we have seen in 2011-2013 is the strong penetration of NoC IP into various market segments (Wireless, Consumer Electronics, Automotive and more), although the NoC was at concept stage in the mid-2000. This trend has been so effective that a NoC IP vendor like Arteris has incredibly increase revenue coming from upfront licenses between 2010 and 2012. But trees don’t grow up to sky, everybody knows this… Qualcomm buy it before, like the company did with Arteris for a (supposedly) amount of a quarter billion dollar. Semiwiki told you last year how good was Arteris, thanks to Qualcomm to confirm our views.

The losers in 2013

Just take a look at the same article written last year, as there is no new comer in this list!

Eric Esteve from IPNEST –

Table of Content for “Interface IP Survey 2008-2012 – Forecast 2013-2017” available here.

More Articles by Eric Esteve …..

lang: en_US

The type of answers IPNEST customers find in the “Interface IP Survey” are:

- 2013-2017 Forecast, by protocol, for USB, PCIe, SATA, HDMI, DDRn, MIPI, Ethernet, DisplayPort, based on a bottom-up approach, by design start by application

- License price by type for the Controller (Host or Device, dual Mode)

- License price by technology node for the PHY

- License price evolution: technology node shift for the PHY, Controller pricing by protocol generation

- By protocol, competitive analysis of the various IP vendors: when you buy an expensive and complex IP, the price is important, but other issues count as well, like

- Will the IP vendor stay in the market, keep developing the new protocol generations?

- Is the PHY IP vendor linked to one ASIC technology provider only or does he support various foundries?

- Is one IP vendor “ultra-dominant” in this segment, so the success chance is weak, if I plan to enter this protocol market?

Meeting the Challenges of Designing Internet of Things SoCs with the Right Design Flow and IP

Connecting “things” to the Internet and enabling sensing and remote control, data gathering, transmission, and analysis improves many areas: safety and quality of life, healthcare, manufacturing and service delivery, energy efficiency, and the environment. The concept of the Internet of Things (IoT) is quickly becoming a reality. At this year’s IDC Smart TECHnology Conference, attendees learned that IoT connected devices could number 50 billion by 2020 and the data generated by these devices could reach 50 trillion gigabytes. Clearly, there is significant opportunity for system and semiconductor companies developing the connected technologies that are fueling this space.

A typical IoT node integrates one or multiple sensors, analog front-end (AFE) modules, micro-electro-mechanical systems (MEMS), analog-to-digital converters (ADC), communication interfaces, wireless receivers/transmitters, a processor, and memory. Therefore, the system on chip (SoC) embodying the IoT node function is a microcontroller integrated with analog peripherals, creating an inherently mixed-signal design. To design SoCs for IoT applications in a competitive landscape where differentiation in features and price is critical, designers must address some key challenges, including:

- Integration of analog and digital functions

- Software-hardware verification

- Power consumption

Low Power: How Low Can You Go?

Power consumption is one of the most critical considerations for IoT applications because the devices typically operate on batteries for many years, ideally recharging by harvesting energy from the environment. To minimize power consumption, designers choose power-efficient processors, memory, and analog peripherals, and optimize the system such that only the necessary parts operate at a given time, while the rest of the system remains shut down. For example, consider a device that senses pressure—if there are no changes, only the peripheral monitoring sensor is powered on until a pressure change is detected, awaking the rest of the system to process the information and send it to the host. Another example is a smart meter. Most of the time, this type of device will be in standby mode, waking up every so often to collect power usage data and sending this data perhaps once daily to the power company. Some parts of the design are off, others are on. There might be about a dozen different modes of operation within the system, and all of them need to be verified.

To optimize power consumption, designers use many different techniques, including multiple supply voltages, power shutoff with or without state retention, adaptive and dynamic frequency scaling, and body biasing. In a pure digital design, implementation and verification of these low-power techniques are highly automated in a top-down methodology following common power specifications.

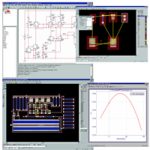

Analog content in IoT devices represents more challenges since it is usually implemented bottom up without explicit low-power specifications, leaving transistor-level simulation as the only verification option. Cadence has automated mixed-signal simulation using Common Power Format (CPF) for specifying behavior at crossing between analog and digital domains in case of power domain changes and power shut-offs. Furthermore, Cadence® Virtuoso® Schematic Editor is able to capture power intent for a custom circuit and export it in the CPF format for static low-power verification. The static method is much faster than simulation for discovering common low-power errors, like missing level shifters or isolation cells.

Hardware-Software Verification

Software plays a crucial role in IoT devices as sensor controls, data processing, and communication protocols are functions often implemented in software. Therefore, system verification must include both software and hardware. To reduce verification time, it is important to start software and hardware development and verification in parallel. For example, instead of waiting for silicon, software development and debugging should start earlier using a virtual prototyping methodology. Cadence Virtual System Platform provides the capability to create virtual models and integrate them into a virtual system prototype for early system verification, software development, and debugging.

When it comes to systems including analog, Cadence offers some unique capabilities. Incisive® Enterprise Simulator is capable of simulating an entire system, including register-transfer level (RTL), for a processor with a compiled instruction set, digital block in RTL, and analog modeled using real number models. This enables hardware and software engineers to start collaborating sooner on developing software and hardware concurrently, instead of sequentially.

High Level of Integration

To ease the development process and shorten the design cycle for IoT devices, designers re-use intellectual property (IP) blocks for a variety of functions. They either design these IP blocks in house, or acquire them from outside vendors, so they can focus on a few differentiating blocks and on integration. Getting the SoC integrated quickly and cost-effectively is the key to success.

Integrating analog IP requires special care. To verify system functions properly in all possible scenarios, designers use simulation. Simulating analog parts at the transistor level, although necessary for some aspects of performance verification, is not the most efficient method to incorporate analog into SoC functional verification. Cadence has developed a methodology based on very efficient real number models (RNM) for abstracting analog at a higher level and for SoC verification without a major performance penalty. Automated model generation and validation capabilities in the Virtuoso platform assist designers in overcoming traditional modeling challenges and taking advantage of simulation using RNM supported in Verilog-AMS or recently standardized System Verilog, IEEE1800 extensions.

Using RNM, designers can validate functionality of the design in many different scenarios more thoroughly and much faster, and leave only specific performance verification to transistor-level simulation.

Once an IoT design is verified, it is important to realize it in silicon, productively. To ensure design convergence throughout the physical implementation process, analog and digital designers must closely collaborate on deriving an optimal floorplan, full-chip integration, and post-layout performance and physical signoff. Cadence integrated its leading Virtuoso analog and Encounter® digital platforms on the industry-standard OpenAccess database to provide a unified flow for mixed-signal designs. The flow operates on the common database for analog and digital that requires no data translation and enables easier iteration between analog and digital designers in optimizing the floorplan, implementing engineering change orders (ECOs), and performing full-chip integration and signoff.

Summary

The modern world will continue to get more connected, and the electronic products that make this possible, smarter. This creates not only more challenges but more opportunities for design engineers creating the complex SoCs that power these smart, connected products. Processors, analog components, IP blocks, tools, and methodologies all play important roles in addressing power, integration, and price challenges. With the right design solutions, engineers can deliver differentiated products that support what some experts say is a key enabler of the fourth industrial revolution: the Internet of Things.

By Mladen Nizic, Engineering Director, Mixed-Signal Solutions, Cadence

lang: en_US

5 Rules of Power Management Using NoCs

If it has escaped your notice that power management on SoCs is important then you need to get out more. Increasingly, the complexity of the interconnect between the various processors, memories, offload processors, devices, interfaces and other blocks means that the best way to implement it is to use a network on chip (NoC). But without using the NoC optimally, the power dissipated will be much higher than it need to be. The NoC has a level of intelligence within itself, and this can be used, for example, to aggressively power down blocks safe in the knowledge that the NoC will never attempt to deliver data to a sleeping block without waking it.

So here are the five rules of power management using NoCs:

Rule 1: The NoC must be fast.

- A well designed network that is also very fast allows the synthesis tool to use high V[SUB]t[/SUB] transistors. Designs with a high percentage of high V[SUB]t[/SUB] transistors can achieve reductions of as much as 75% leakage power compared to the faster but leakier low V[SUB]t[/SUB]

- A fast NoC means that the synthesis constraints are less tight and so fewer and smaller buffers are required inside blocks resulting in further power saving

Rule 2: The NoC must support both coarse-grain and fine-grain clock gating

- Careful clock gating that is architected into the design will produce a much better result than simply relying on the synthesis tools. Results that clock gate more than 99.9% of the design can be achieved.

- Coarse grained clock gating gates entire branches of the clock tree, saving not just the energy suppressed in the unclocked flops but the power needed to drive the clock network itself. When the clock network can consume up to 30% of the power on a chip, this can lead to big savings.

Rule 3: The NoC must allow clock and power “domain boundaries” within the NoC

- Different clocks for different parts of the network.

- Different voltage supplies for different parts of the network.

- Portions of the network that can be idled or even powered off.

- Sensibly group blocks that are on the same clocks and power to minimize components required on each domain crossing (and the CDC checks required)

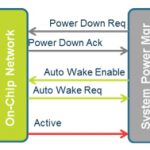

Rule 4: The NoC must be aware of the power state of network components.

- Catch traffic early that accesses powered down parts of the SoC.

- Allows network interfaces to tell the power manager when it is safe to remove power from a block.

- Two alternatives are available when a transaction is received for a component in the system that is powered off: a) reject the traffic (preferably at the source) or b) signal the system power manager that a power domain must be powered up.

Rule 5: Integrate auto-wake-up features into NoC.

- Network requests wake-up of necessary power domains, without software intervention.

- The alternative to rejecting the traffic is to use a high performance Wake-on-Demand system that can operate without SW intervention. Many SoCs have SW controlled system power management schemes that suffer from high latency. A HW based Wake-on-Demand mechanism operates in a few clock cycles and allows the initiator agent to signal for a wake-up and hold the transaction until it can be completed.

Following these rules means that the power can be significantly reduced compared to using alternative interconnect technologies. In particular, a sophisticated approach to powering down blocks can be undertaken without requiring the embedded software engineers to explicitly handle it. The NoC can take care of it, ensuring that no errors occur due to trying to communicate with a powered down block, something very difficult to guarantee with approaches where power down and power up is completely under software control. And another major power saving versus software control is that the (fairly power-hungry) processor does not need to be powered up all the time.

In April, ARM licensed 138 Sonics patents, some of them in these power areas. ARM has its own proprietary NoC technology Amba 4 AXI and ACE but coming relatively late to the NoC game, Sonics had already developed many of the fundamental technologies required for an effective NoC. They are also working with Sonics on their next generation NoC technology.

More information on Sonics’s 4th generation GHz NoC technology is here.

Design Methodology and its Impact on the Future of Electronics

Today at the Semisrael Expo 2013 (in Israel of course) Ajoy Bose gave a keynote on how design methodology will impact electronics. The big pictures is that microelectronics is driven by some major disruptive forces and, as a result, technology and industry are evolving dramatically, which creates a need for research and innovation and also new business opportunities.

What are these disruptive forces?

- Business: industry is now being driven by consumer and mobile

- Design: designing from scratch is being replaced by assembling blocks

- Technology: smaller technology nodes are creating new challenges for power, timing, test, routing congestion and more

- The conventional methods are being fractured

Of course the cost and complexity of designs has been rising fast in recent years. At 45nm an SoC design cost was about $68M and the SoC would contain about 81 blocks. By 22/20nm the cost has risen to $164M and the block count to 190. The probability of a re-spin is about 40% and, of course, is a disaster in terms of time-to-market.

And most of these are consumer markets: if your phone is not available in time for Christmas, you don’t get to sell it next March for less money since everyone will have bought a different phone. The speed of adoption of smartphones compared to older technologies such as television is astounding.

The methodologies to support block assembly type designs are not fully in place. One key is to move as much of design methodology up to the RTL level. It is possible to do a lot with signoff confidence at this level: test, power, congestion, clocks. The advantage of working at this level is that issues are discovered and fixed close to the source. Complexity is a lot reduced (a little RTL can generate a lot of gates and layout). And it is easier to do design exploration at this level. Of course, signoff still needs to be done after layout when all the details are in place. The purpose of the RTL-based approach is to ensure that very few problems remain to be dealt with.

High quality IPs are the starting foundation for an SoC. By using tools such as Atrenta’s IP Kit which is based on SpyGlass then problems in the IP can be detected and fixed early, resulting in SpyGlass Clean IP. The big guys such as TSMC are using this approach. They make most of their money when chips go into volume production. So they have a strong incentive to reduce the delay from availability of IP and processes until production.

The volume of data is overwhelming. An approach based on hierarchical abstraction models is needed, retaining just enough of the internals to be able to assemble models and verify them against each other. This requires two sets of tools: at the IP level to create the models and at the SoC level to put them together and debug and fix any issues. With this approach run times and memory requirements can be reduced by 10x to 100x.

In the new mobile driven world, fast accurate SoC design is the key factor for success.

Raptor Image Signal Processor

This fall there seems to be a bumper harvest of cores. Today Imagination Technologies announced their latest core for image signal processing. Like all of their cores, it is designed to be part of an SoC and is designed to work with other Imagination cores to build a complete image processing system. In particular, it is designed to work directly with the CMOS sensor(s) in cameras instead of having a separate external ISP (often stacked on the sensor itself), with the obvious cost and power reduction. Bringing the ISP onto the main application processor has many advantages such as higher performance due to the advanced process node typically used, access to main system memory, reduced cost and increased flexibility in sensor choice and, as just stated, the ability to leverage other Imagination IP to create optimized subsystems.

In 2012 Imagination acquired Nethra, who built standalone image processors, and this is the next generation as a core. They combined the high performance Nethra camera SoC with 5MP, 1080P, 12 bit, full functionality, already proven in the market by volume shipments along with Nethra’s expertise in lens and sensor tuning. Adding Imagination’s expertise in IP creation and delivery and their existing image quality and image technology and the PowerVR Raptor ISP was born.

The core is a low-power imaging pipeline developed specifically to enable:

- ultra HD video

- high pixel count photography

- high performance vision systems

- low power wearables and mobile

- augmented reality

- computer vision

This ties into the trend for everything to go mobile. Standalone HD video and still cameras are converging onto smartphones and tablets and, in the future, wearables (think Google Glass). This is a challenge since the functionality and quality of the specialist solutions now needs to be achieved in the size, cost and power envelope of a smartphone.

But consumer is not the only place for vision. Raptor supports up to 16 pixel depth which gives access to demanding markets requiring high dynamic range like auto (self-driving cars etc) and industrial. With support for 10 bit imaging they have what is needed for 4K pixel Ultra-HD TV. They also support multiple sensors so can handle front and back cameras with a single core, and can handle stereo and multi-camera arrays.

As I said earlier, the core is not designed to be an entire camera system on its own, but is intended to work with a CPU (I’m sure Imagination would love you to pick MIPS but you don’t have to) and a GPU. This allows for optimization between blocks to keep the power down. Raptor even has a re-entrant streaming port to allow image data to be streamed to custom processors and then reinserted back into the ISP pipe.

The core is available for licensing to lead partners today with delivery starting in Q1 2014.

There is an Imagination blog with greater detail about Raptor here.

Cadence Design Systems’ Shares Are Surprisingly Cheap

In the third and final (for now) part of this series on the EDA design tool vendors, I’d like to take a closer look at Cadence Design Systems. This is probably the most interesting of the three from both an industry perspective as well as an investment perspective for a variety of reasons. With that said I’d like to first provide some background on the company and its management and then take a closer look at the company’s financials and stock.

Some Rough Times

Rewinding back a bit to around the time of the financial crisis, it’s interesting to see the kind of mess that Cadence (and its shareholders) got into at the time. As it turns out, Cadence – not unlike other software companies – had faced some trouble with respect to the timing of revenue recognition. This wasn’t anything too egregious, particularly as the restatement was only for about $24 million worth of revenues against a revenue base that at the time was nearly $1 billion, but it definitely served to put off some investors. Couple that with the global economy melting down (which took its toll on the financial results of just about every tech company on the planet) and you have a recipe for disaster.

That wasn’t the extent of it, however. On Oct. 15, the company’s president and CEO and Michael Fister, along with four other top level executives, left the company. At the time, the growth in the EDA space was sputtering, Cadence’s customers (and in particular NXP and Freescale) were seeing significant business pressures, and Cadence itself was seeing rather fierce competition from both Magma Design Automation as well as Synopsys in analog and mixed signal design. Times were truly tough, and it seemed that Fister decided that it would be best to hand the company off to somebody else.

A new era of prosperity

Cadence landed itself a new president and CEO, Lip-Bu Tan, in January 2009. Prior to taking the helm in 2009, he had been on the board of Cadence since 2004. Additionally, he is the chairman of Walden International (a venture capital firm he founded in 1987) and sits on the boards of Ambarella, SINA, and Semiconductor Manufacturing International. Under his leadership, Cadence entered a new era of prosperity.

A quick look at Cadence’s stock price today suggests that the company is much healthier than it was in the 2008/2009 period with shares having roughly tripled from their lows. However, while Synopsys trades at all-time highs, and while Mentor Graphics is quickly approaching its all-time highs, Cadence trades at just under half of its pre-crash 2007 highs and about a third of its 1999/2000 Nasdaq bubble highs (it would be unreasonable to expect many tech companies to reach their 1999/2000 valuations, though, as this was a period of near insanity).

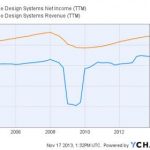

What’s more important to look at, though, is the underlying businessperformance. Here is a plot of Cadence’s revenue base and its net income over the last ten years:

Notice something interesting? The company is earning more money today than it has over the last ten years, having fully recovered from its 2008/2009 slump. Its revenue base is still a bit off of its 2007 highs, but overall, the company is in good shape. That being said, when you look at how the market actually values the company (that is, what multiple of the company’s earnings investors are willing to pay for the shares), it’s, frankly, not much. While investors are willing to pay 23.5 times earnings for Mentor Graphics and 25.9 times earnings for Synopsys, they’re only willing to cough up 8.30 times earnings for Cadence. Hmm!

Typically speaking, Wall Street (that is, the investment community as a whole) is more willing to pay a premium for today’searnings as long as they expect tomorrow’sto be significantly more robust. So what this would suggest is that Mentor and Synopsys are likely to grow at a faster pace and/or have more robust businesses than Cadence. However, what do the professionals think?

What gives?

The analysts covering Cadence believe that it will grow its net income per share by about 14% next year while at the same time growing its top line by about 7%. What’s really intriguing is that Wall Street expects Mentor Graphics to grow its bottom line by 10% and its top line by 6%, and it expects Synopsys to grow its bottom line by just 4.5% and its top line by 6.6%. This is actually pretty interesting – all of the EDA players are expected to grow at just about the same rate, but Cadence is by far the cheapest. Why could this be?

Well, while the financials are an important part of valuing any stock – tech or not – tech is unique in that investors care a lot about the perceived barriers to entry. Synopsys, for instance, is not only the leading EDA tool vendor, but it is also the second largest provider of semiconductor IP – an enviable position to be in. However, what’s peculiar is that Cadence is probably in a better position for, say, FinFET designs than Mentor Graphics is, if the design enablement readiness charts given by TSMC about the readiness of tools from the various vendors (found here) still hold true.

Indeed, while it’s always important to do your own due diligence before following the recommendations of any analysts, it’s interesting to note that the vast majority of the sell-side analysts think that Cadence is either a “buy” or an “outperform”. My impressions thus far is that Cadence is probably the best deal in the EDA space today for investors, although I do plan to do much more work on the stock and report back.

More articles by Ashraf Eassa…

Also Read: A Brief History of Cadence

lang: en_US

A Brief History of eSilicon

eSilicon Corporation was founded in 2000 with Jack Harding as the founding CEO and Seth Neiman of Crosspoint Venture Partners as the first venture investor and outside Board member. They both remain involved in the company today, with Jack continuing as CEO and Seth now serving as Chairman of the Board.

Both Harding and Neiman brought important and complementary skills to eSilicon that helped the company maneuver through some very challenging times. Prior to eSilicon, Jack was President and CEO of Cadence Design Systems, at the time the largest EDA supplier in the industry. He assumed the leadership role at Cadence after its acquisition of Cooper and Chyan Technology (CCT), where Jack was CEO. Prior to CCT, Jack served as Executive Vice President of Zycad Corporation, a specialty EDA hardware supplier. He began his career at IBM.

Seth Neiman is Co-Managing Partner at Crosspoint Venture Partners, where he has been an active investor since 1994. Seth’s investments include Brocade, Foundry, Juniper and Avanex among many others. Prior to joining Crosspoint, Seth was an engineering and strategic product executive at a number of successful startups including Dahlgren Control Systems, Coactive Computing, and the TOPS division of Sun Microsystems. Seth was the lead investor in eSilicon, and incubated the company with Jack at the dawn of the Pleistocene epoch.

THEEARLYYEARS

eSilicon’s original vision was to develop an online environment where members of the globally disaggregated fabless semiconductor supply chain could collaborate with end customers looking to re-aggregate their services. The idea was straight-forward – bring semiconductor suppliers and consumers together and use the global reach of the Internet to facilitate a marketplace where consumers could configure a supply chain online. The resultant offering would simplify access to complex technology and reduce the risk associated with complex design decisions.

Many fabless enterprises had struggled with these issues, taking weeks to months to develop a complete plan for the implementation of a new custom chip. Chip die size and cost estimates were difficult to develop, technology choices were varied and somewhat confusing, and contractual commitments from supply chain members took many iterations and often required a team of lawyers to complete.

The original vision was simple, elegant and sorely needed. However, it proved to be anything but simple to implement. In the very early days of the company’s existence, two things happened that caused a shift in strategy. First, a close look at the technical solutions required to create a truly automated marketplace yielded significant challenges. Soon after formation of the company, eSilicon hired a group of very talented individuals who did their original research and development work at Bell Labs. This team had broad knowledge of all aspects of semiconductor design. It was this team’s detailed analysis that lead to a better understanding of the challenges that were ahead.

Second, a worldwide collapse of the Internet economy occurred soon after the company was founded. The “bursting” of the Internet bubble created substantial chaos for many companies. For eSilicon, it meant that a reliable way to monetize its vision would be challenging, even if the company could solve the substantial technical issues it faced. As a result, most of the original vision was put on the shelf. The complete realization of the “e” in eSilicon would have to wait for another day. All was not lost in the transition, however. Business process automation and worldwide supply chain relationships did foster the development of a unique information backbone that the company leverages even today. More on that later.

THEFABLESSASIC MODEL

Mounting technical challenges and an economic collapse of the target market have killed many companies. Things didn’t turn out that way at eSilicon. Thanks to a very strong early team, visionary leadership and a little luck, the company was able to redirect its efforts into a new, mainstream business model. It was clear from the beginning that re-aggregating the worldwide semiconductor supply chain was going to require a broad range of skills. Certainly design skills would be needed. But back-end manufacturing knowledge was also going to be critical. Everything from package design, test program development, early prototype validation, volume manufacturing ramp, yield optimization, life testing and failure analysis would be needed to deliver a complete solution. Relationships with all the supply chain members would be required and that took a special kind of person with a special kind of network.

eSilicon assembled all these skill sets. That deep domain expertise and broad supply chain network allowed the company to pioneer the fabless ASIC model. The concept was simple – provide the complete, design-to-manufacturing services provided by the current conventional ASIC suppliers, such as LSI Logic, but do it by leveraging a global and outsourced supply chain. Customers would no longer be limited to the fab that their ASIC supplier owned, or their cell libraries and design methodology.

Instead, a supply chain could be configured that optimally served the customer’s needs. And eSilicon’s design and manufacturing skills and supply chain network would deliver the final chip. The volume purchasing leverage that eSilicon would build, coupled with the significant learning eSilicon would achieve by addressing advanced design and manufacturing problems on a daily basis would create a best-in-class experience for eSilicon’s customers.

As the company launched in the fall of 2000, the fabless ASIC segment of the semiconductor market was born. Gartner/Dataquest began coverage of this new and growing business segment. Many new fabless ASIC companies followed. Antara.net was eSilicon’s first customer. The company produced a custom chip that would generate real-world network traffic to allow stress-testing of ebusiness sites before they went live. Technology nodes were in the 180nm to 130nm range and between eSilicon’s launch in 2000 and 2004, 37 designs were taped out and over 14 million chips were shipped.

Fabless ASIC was an adequate description for the business model as everyone knew what an ASIC was, but the description fell short. A managed outsourced model could be applied to many chip projects, both standard and custom. As a result, eSilicon coined the term Vertical Service Provider (VSP), and that term was used during the company’s initial public exposure at the Design Automation Conference (DAC) in 2000.

The model worked. eSilicon achieved a fair amount of notoriety in the early days as the supplier of the system chip that powered the original iPod for Apple Computer. The company also provided silicon for 2Wire, a company that delivered residential Internet gateways and associated services for providers such as AT&T. But it wasn’t only the delivery of “rock star” silicon that set the company apart. Some of the original ebusiness vision of eSilicon did survive.

The company launched a work-in-process (WIP) management and logistics tracking system dubbed eSilicon Access[SUP]®[/SUP] during its first few years. The company received a total of four patents for this technology between 2004 and 2010. eSilicon Access, for the first time, put the worldwide supply chain on the desktop of all eSilicon’s customers. Using this system, any customer could determine the status of its orders in the manufacturing process and receive alerts when the status changed. eSilicon uses this same technology to automate its internal business operations today.

GROWINGTHEBUSINESS

During the next phase of growth for the company, from 2005 to 2009, an additional 135 designs were taped out and an additional 30 million chips were shipped. Technology nodes now ranged mainly from 90nm down to 40nm. It was during this time that the company began expanding beyond US operations. Through the acquisition of Sycon Design, Inc., the company established a design center in Bucharest, Romania. A production operations center was also opened shortly thereafter in Shanghai, China.

Recognizing the growing popularity of outsourcing, eSilicon expanded the VSP model to include semiconductor manufacturing services (SMS). SMS allowed fabless chip and OEM companies to transition the management of existing chip production or the ramp-up and management of new chip production to eSilicon. The traditional design handoff of the ASIC model was now expanded to support manufacturing handoff. The benefits of SMS included a reduction in overhead for the customer as well as the ability to focus more resources on advanced product development.

Extensions such as SMS caused the Vertical Service Provider model to expand, creating the Value Chain Producer (VCP) model. The Global Semiconductor Alliance (GSA) recognized the significance of this new model and elected Jack Harding to their Board to represent the VCP segment of the fabless industry.

In the years that followed, up to the present day, eSilicon has grown substantially. The number of tape-outs the company has achieved is now approaching 300 and the number of chips shipped is on its way to 200 million. The company has also expanded into the semiconductor IP space. While its worldwide relationships for third-party semiconductor IP are critical to eSilicon’s success, the company recognized that the ability to deliver specific, targeted forms of differentiating IP could significantly improve the customer experience.

Since so many of today’s advanced chip designs contain substantial amounts of on-board memory, this is the area that was chosen for eSilicon’s initial IP focus. The company acquired Silicon Design Solutions, a custom memory IP provider with operations in Ho Chi Minh City and Da Nang, Vietnam. This acquisition added 150 engineers to focus on custom memory solutions for eSilicon’s customers.

As of June 30, 2013, eSilicon employs over 420 full-time people worldwide, of which over 350 are dedicated to engineering. Headquartered in San Jose, California, the company maintains operations in New Providence, New Jersey and Allentown, Pennsylvania; Shanghai, China; Seoul, South Korea; Bucharest, Romania; Singapore and Ho Chi Minh City and Da Nang, Vietnam. The company’s diverse global customer base consists of fabless semiconductor companies, integrated device manufacturers, original equipment manufacturers and wafer foundries. eSilicon sells through both an internal sales force and a network of representatives.

THEEVOLVINGMODEL

The eSilicon business model has evolved further. VSP and VCP are now SDMS (semiconductor design and manufacturing services). Arguably the longest, but perhaps the most intuitive name. Through the years, eSilicon has allowed a broad range of companies to reap the benefits of the fabless semiconductor model, many of which couldn’t have done it on their own.

This ability to bring a worldwide supply chain within reach to smaller companies gave eSilicon its start, but the model has worked well for eSilicon beyond these boundaries. Today, eSilicon serves customers that are much larger than eSilicon itself; customers that could “do what eSilicon does.” In the early days, the company discounted its chances of winning business at an enterprise big enough to maintain an “eSilicon inside.”

Time has proven this early thinking to be too limiting. Many of eSilicon’s customers today can clearly maintain an “eSilicon inside,” but they still rely on eSilicon to deliver their chips. Why? In two words, opportunity cost. It has been proven over time that for any enterprise the winning strategy is to focus on the organization’s core competence and invest in that. All other functions should be outsourced in the most reliable and cost-effective manner possible. Simply put, eSilicon’s core competency fits in the outsourcing sweet spot for many, many organizations. This trend has created new value in the fabless semiconductor sector and facilitated many new design starts.

WHAT’SNEXT

As the fabless model grows, there are new horizons emerging. During its early days, the vision of using the Internet to facilitate fabless technology access and reduce risk was largely put on the shelf. The reasons included the challenges of solving complex design and manufacturing problems and the lack of a clear delivery mechanism over the Web.

Today, these parameters are changing. The Internet is now an accepted delivery vehicle for a wide array of complex business-to-business solutions. eSilicon’s talented engineering team has also developed a substantial cloud-enabled environment that is used to automate its internal design and manufacturing operations every day. This team consists of many of the same people who highlighted the challenges of addressing these issues in the company’s early years. What a difference a decade can make.

What if that automated environment could be made available to end users in a simple, intuitive way? New work at eSilicon is taking the company in this direction. The recent announcement of an easy-to-use multi-project wafer quote system is an example. What once could take two weeks or more, consisting of many inquiries and legal agreement reviews, is now done in as little as five minutes with an extension to eSilicon Access. With availability on both the customer’s desktop and smartphone, this is clearly the beginning of a new path. eSilicon changed the landscape of fabless semiconductor in 2000 with the introduction of the fabless ASIC model. It’s time to do it again and bring back the “e” in eSilicon.

Social Media at Synopsys

When I talk about social media and mention Synopsys you may quickly think of Karen Bartleson, the Senior Director of Community Marketing, because she:

- Blogs and podcasts

- Created the best interactive DAC game ever in 2013 using barcodes and points

- Tweets @karenbartleson

- Is president of the IEEE Standards Association

- Has over 500 connections on LinkedIn

Karen Bartleson

Continue reading “Social Media at Synopsys”

TowerJazz and Silvaco BFF

Last week was the TowerJazz Technology Fair 2013. TowerJazz is the fourth biggest foundry in the world after TSMC, GF and UMC. They have fabs in Newport Beach (the old Jazz, itself with roots in Rockwell), two in Israel (the old Tower, with roots in National Semiconductor) and one in Japan (acquired from Micron). The technology fair has two days, the first focused on aerospace and defense and the second on consumer. Silvaco seemed to be a theme running through the day with so many customers using Silvaco tools.

The aerospace and defense often require specialized processes or, at least, specialized analysis for things like single event upsets. This plays to Silvaco’s strengths where they can use their TCAD tools to analyze/develop the process and seamlessly tie that back into their circuit simulation technology to analyze actual designs.

This, in turn, is driven by the availability of a wide range of PDKs for TowerJazz’s processes:

- TS18SL (0.18u CMOS 5V)

- TS18IS (0.18um CMOS 5V +CIS)

- BCD25MB – 0.25um CMOS

- CA13HC (0.13um CMOS)

- CA18HA – 0.18um CMOS

- CA18HD – 0.18um CMOS

- CA18HR – 0.18um CMOS

- CA18HG – 0.18um CMOS

- CA25QFS – 0.25um CMOS

- SBC18H3 – 0.18um BiCMOS SiGe

- SBC18H2 – 0.18um BiCMOS SiGe

- SBC18HX – 0.18um BiCMOS SiGe

- SBC18HA – 0.18um BiCMOS SiGe

- SBC18HK – 0.18um BiCMOS SiGe

- SBC35QTA – 0.35um BiCMOS SiGe

- SBC35QTS – 0.35um BiCMOS SiGe

- plus some legacy PDKs

- and if your PDK is not here they can build it

What counts as a state-of-the-art process depends on the application. For RF and analog the leading edge is 0.18um and 0.13um, nobody is trying to do those sorts of designs in 16nm FinFET.

As an example, Ultra Comm have developed advanced fiber optic transceiver chips that operate from 10Mbps to 12.5GBps per channel in a 4Tx 4Rx format incorporated in Ultra Comm’s X80-QFN transceivers. It is build in Jazz 0.18um SiGe and designed with Silvaco tools.

Ultra Comm also developed the world’s first integrated Fiber Fault Detection integrated circuit which performs on-demand Optical Time Domain Reflectometry measurements to 1cm resolution embedded in Ultra Comm X80-QFN transceivers. Again, in 0.18um SiGe designed with Silvaco tools. And there are more designs in the funnel.

When I said they used Silvaco tools, they actually use Silvaco CAD tools exclusively for all integration functions due to ease of use, accuracy of the models and the cost structure. Not to mention the fact that they have had 100% first pass success on all chips designed using the Silvaco toolsuite on Jazz process technologies.

Another even sexier project is Chronicle Technology who are making the sensor for the Naval Research Laboratory’s Solar Orbiter Heliospheric Imager (SoloHI) program design using Silvaco EDA tools and TowerJazz manufacturing. They are building a 4Kx4K advanced pixel sensor array using CMOS technology. This is used to image the sun from the spacecraft that flies between the orbits of Mercury and Venus. It is covered in heat shields to keep everything cool enough to function. It’s antenna has to be hidden behind the spacecraft too, to keep it from becoming too hot.

A full list of all Silvaco PDKs (not just for TowerJazz) is here.