Traditionally logic devices built on top of thin-film-transistors (TFTs) have used one type of device, either an NMOS a-Si: TFT (hydrogenated amorphous silicon) or a PMOS organic device. Recently a-Si:H and pentacene PMOS TFTs have been integrated into complementary logic structures similar to CMOS. This, in turn, creates a problem of how to model and simulate these structures.

This is a special case of something that Silvaco does all the time, since it has a full line of TCAD products along with modeling and circuit simulation tools. So the basic idea is to use Silvaco Athenta and Atlas TCAD tools to model the process used to build the TFT devices and perform process and device simulation. This is then converted into the Utmost IV data format. From there models can be extracted that can be used in circuit simulation to predict the performance. The TCAD tools close the gap between the technology development (TD) process engineers and the designers, two worlds that have very different knowledge bases.

TFT circuits in all NMOS (or PMOS) like topology have a large static power dissipation due to the existence of a direct path from supply to ground, just as in the days of NMOS and HMOS process technologies before the world went completely CMOS for logic. This power dissipation means that circuits like this cannot be used in battery operated portable systems. So just as we did with CMOS, we can integrated a-Si:H NMOS TFT with pentacene PMOS TFT in a complementary structure to form a hybrid inverter circuit. The TCAD data is all converted to Utmost IV format and then model extraction is done in Utmost IV. For the pentacene-based PTFT a UOTFT model was used. For the NMOS a-Si:H TFT an RPI a-Si TFT model was used. The extracted SPICE models are then used in the hybrid inverter circuit and a ring oscillator containing 5 of them.

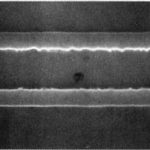

And yes, the ring oscillator oscillates:

So that is a lot of buzzwords and initials. But the important ideas are fairly simple. TCAD simulation of these novel devices were done and the output data was converted to Utmost IV format. Using this data, SPICE models for a-Si TFT (level=35) and organic TFT (using UOTFT model level=37) were extracted and used to successfully simulate a five-stage ring oscillator using the hybrid inverter. Basically, starting from details of the process, SPICE models are automatically generated and then used for circuit simulation and analysis.

The full white paper is available on the Silvaco website here.