iDRM (integrated design rule manager) from Sage-DA is the world’s first and only design rule compiler. As such it is used to develop and capture design rules graphically, and can be used by non-programmers to quickly capture very complex and shape dependent design rules and immediately generate a check for them. The tool can also be used for layout profiling: it detects every instance of a design rule or pattern, measures all its relevant distances and provides complete information on all such instances in the design.

In this paper we want to describe a slightly different application or use-case for iDRM: Searching for specific layout configurations. Using the iDRM GUI, users can quickly draw and capture specific layout structures or configurations they are interested in. These can be quite complex and involve many layers and polygons and can also include connectivity information.

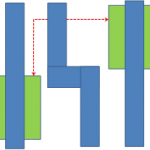

Let’s take a look at a simple example: let’s say you want to look for the following layout configuration:

a Z shape blue layer polygon, that has on each side two parallel blue lines crossing diagonally situated green polygons, where the two inner parts of the green layer are electrically connected.

Fig 1: drawing a specific layout configuration to search for

There are no specific dimensions here, so pattern matching tools cannot be used in this case, but for iDRM this is a very easy and quick task. you simply draw the above configuration in the iDRM GUI , exactly as it is drawn here, and click the FIND button.

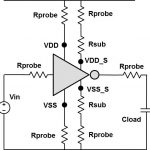

iDRM will search your layout database and find any layout instance that meets this criteria. Furthermore, if you also add measurement variables to your drawing, e.g. the spaces and width defined by A, B, C, D, E (see figure below), iDRM will measure them for you, and will display the results for every found instance. iDRM can then create tables or histograms counting all these results, and you can view each such instance using the iDRM layout viewer.

Once the dimensions are found, the user can choose to limit the search by adding specific measurement values or ranges of values as qualifiers to the search.

Fig 2: adding measurements to the layout configuration

Use cases: Circuit, layout, yield, reliability and … IP protection

This functionality is useful for circuit and layout designers that are looking for specifically laid out circuits, or for yield engineers that suspect certain configuration to be sensitive to yield or reliability issues. Another slightly different application is looking for use of protected IP or patented configurations, where specific layout or circuit techniques might be protected by patent and the user wants to find if such configurations are used in a design database.

More Articles by Daniel Nenni…..

lang: en_US