At the SPIE Advanced Lithography conference I sat down with Mike Lercel, Director of Strategic Marketing for ASML for an update. ASML also presented several papers at the conference and I attended many of these. In this article, I will discuss my interview with Mike and summarize the ASML presentations.

Continue reading “SPIE 2017 – ASML Interview and Presentations”

Making Cars Smarter And Safer

The news media has naturally focused on the handful of deaths that have occurred while auto-pilot features have been enabled. In reality, automobile deaths are occurring at a lower rate now than ever. In 2014 the rate was 1.08 deaths per 100 million miles driven. Compare that to the 5.06 per 100M miles in 1960, or a whopping 24.09 in 1921 – the first year there were reliable data on miles driven. Still around thirty-two thousand deaths per year in the US is a horrific number.

Autonomous driving promises to do a lot to further reduce these numbers. Automated systems containing electronics have contributed to reducing deaths. The airbag system contains accelerometers and controller circuitry to trigger deployment. Anti-lock brakes and anti-skid controls have certainly been effective in reducing accident rates or the severity of injuries. However, semi and fully autonomous driving could help eliminate the most frequent cause of injury accidents – human error. Per the NHTSA, 94% of auto accidents can be tied back to human choice or error.

The one big assumption made for autopilot systems is that they themselves do not suffer from ‘driver error’ – or in other words any kind of failure. Of course, no system can be made error proof. However, much can be done to design them to drastically reduce the likelihood of an error occurring. Going back to the 1970’s, the space shuttle relied on redundant systems and a voting mechanism to ensure no failures affected the mission. They had five computers to enable this. Four of them performed identical calculations and they voted to ensure the correct result. The fifth was a no-frills backup in case the fully configured systems failed.

Today, for commercial and consumer products, having a four or five-fold redundancy would be prohibitive. Even two-fold redundancy would present a competitive disadvantage. Let’s probe deeper into the control systems for autonomous driving. Neural networks are the core of autopilot systems. They rely heavily on hardware accelerators to perform compute intensive operations. In these systems, there is a combined need for functional safety, super-computer complexity and near real-time latency. See below for block diagrams of several leading auto-pilot compute systems.

The relevant standard for automotive functional safety is ISO 26262. It deals with every level of the supply chain. Functional safety risks include both random and systemic faults, and the systems it covers deal with accident prevention and accident mitigation, Active and Passive respectively. For a higher-level unit or system to function properly, all its components, mechanical, hardware and software, must also adhere to the same safety process. Each identified potential failure has an Automotive Safety Integrity Level (ASIL) assigned to it based on the severity of the expected loss, the probability of the failure and the degree to which it may be controllable if it occurs. ASIL is quite different from the more common Safety Integrity Level (SIL) used for other applications. ASIL relies on more subjective and comprehensive metrics.

Avoiding ASIL level B faults, as a rule of thumb, can be accomplished with fault detection such as ECC/parity and software measures. Again, this is for faults are associated with low levels of loss, or that can be easily recovered from. ASIL D, on the other end of the spectrum calls for space shuttle levels of protection. Common techniques for these faults involve duplication of key logic – albeit a costly undertaking.

Recently at the Linley Autonomous Hardware Conference in Santa Clara Arteris announced an innovative solution for improving the reliability of ISO26262 systems at the hardware level. As an alternative to duplicating all the elements of critical hardware to ensure reliable operation, with their Ncore 2.0 and Ncore Resilience Package it is possible to improve the reliability of the existing memories and data links. This means that only hardware that affects the contents of data packets need to be duplicated. The Ncore Resilience Package internally uses integrated checkers, ECC/parity and buffers to provide a reliable and resilient data transport in SOC’s. This goes a long way toward helping system designers and architects meet the requirements of ISO 26262.

One of the advantages of this approach is that system software is simplified. Ncore is scalable so there is more flexibility, including the ability to add non-coherent elements and make them present a coherent interface to the SOC by using Ncore Proxy Caches. Building functional safety into on chip networking makes complete sense in the context of automotive safety and reliability. More information about Arteris Ncore and Ncore Resilience Package is available on their website.

An Ultra-Low Voltage CPU

A continuing challenge for large scale deployment of IoT devices is the need to minimize service/cost by extending battery life to decades. At these lifetimes, devices become effectively disposable (OK – a new recycling challenge) and maintenance may amount to no more than replacing a dead unit with a new unit. Getting to these levels requires effort to manage dynamic, leakage and sensor power consumption. Managing leakage has been covered in many articles on FinFET and FDSOI technologies and discussions on aggressive power switching, though this challenge is not as acute in legacy processes. I wrote recently about advances in managing sensor power where at least for some types of sensing, standby power can be reduced to zero.

That leaves dynamic power (the power the system burns when it is doing something other than sleeping) as the primary contributor to battery drain. Dynamic power varies with the square of the operating voltage, so reducing voltage has a major impact. We’re used to seeing modern devices running at 1 volt, in some cases at 0.8 volts and even at 0.6 volts. It’s easy to see why; at 0.6 volts dynamic power should be only 36% of the power consumed at 1 volt. So why not reduce the voltage to 0.1 volts or even lower? That isn’t so easy; digital circuits depend on transistors switching between a ‘0’ state and a ‘1’ state. The normal way they do this is to switch between a definitely-off (grounded) state and a definitely-on (saturated) state. But at very low voltages there isn’t enough voltage swing to get up to the saturated state; you can only get part way up the curve.

Of course getting part way up can still be enough to effectively switch, as long as the level you get to is sufficient to switch the next gate in the chain. But there’s a problem. Variabilities in process, temperature and other environmental factors make it difficult to exactly control the voltage swing for each transistor or how quickly the next transistor will respond. As these variations accumulate it can be very challenging to have a circuit operate reliably at very low voltages.

This is where EtaCompute comes in. I think they can safely claim without contradiction that they have developed the world’s lowest power microcontroller IP, since they can operate these as low as 0.25 volts. This might be mildly interesting (do we really need more processors?) were it not for the fact that they have built their IP based on ARM M0+ and M3 cores, in partnership with ARM. They are very cagey about how they make this work, mentioning only that they use self-timed technology and dynamic voltage scaling (DVS) and they say that this is insensitive to process variations, inaccurate device models and path delay variations. 0.25-volt operation has evidently been demonstrated in a 90LP process. They also offer supporting low voltage IP including a real-time clock, AES encryption, an ADC, a DSP and a PMIC to control DVS.

At these low levels of dynamic power consumption and operating in legacy low-power processes where leakage is presumably not a concern, they assert devices built around this logic can comfortably operate in always-on/always-aware mode (which should be easier to manage and lower cost), driven by small coin-cell batteries and energy harvested through e.g. solar power.

The company is quite new. They were founded in 2015 by co-founders and early execs at Inphi, are based in LA and have raised ~$4.5M so far. They have very credible backing, through their partnership with ARM, also some of the investment comes from Walden International (Lip-Bu Tan). Good idea, good backing, should be an interesting company to watch.

You can visit the website HERE.

The Importance of EM, IR and Thermal Analysis for IC Design – Webinar

Designing an IC has both a logical and physical aspect to it, so while the logic in your next chip may be bug-free and meet the spec, how do you know if the physical layout will be reliable in terms of EM (electro-migration), IR (voltage drops) and thermal issues? EDA software once again comes to our rescue to perform the specific type of reliability analysis required to ensure that these physical issues are well understood, and to be gate-keepers before silicon tape-out occurs. So when should you start running this type of analysis, and how often do you need to run it? Great questions, and fortunately for us there’s a webinar on this specific topic from Silvaco.

Webinar Overview

This webinar introduces the best practices for ensuring robustness and ease-of-use in performing power, EM and IR drop analysis on various types of IC designs early in the design cycle using simple and minimalistic input data. Using industry-standard input and output file formats, power integrity analysis will be demonstrated early in the design cycle as well as at the sign-off, tape-out stage. We will show how to find and fix issues that are not detectable with regular DRC/LVS checks like missing vias, isolated metal shapes, inconsistent labeling, and detour routing. InVar Prime has been used to verify a broad range of designs including processors, wired and wireless network ICs, power ICs, sensors and displays.

Presenter

Kim Nguyen is a Senior Applications Engineer for Silvaco specializing in physical design and physical verification. Prior to joining Silvaco, Kim led physical design and tape-out teams at Intel Corporation.

He has also held key back-end applications engineering roles for various EDA companies.

Who Should Attend

Physical design and physical verification engineers who work on reliability verification, EM/IR, thermal and power analysis.

When: April 20, 2017

Time: 10AM – 11AM, PDT

Language: English

I will be attending this webinar and blogging about it in more detail, so look forward to another post next week with the details.

About Silvaco, Inc.

Silvaco, Inc. is a leading EDA provider of software tools used for process and device development and for analog/mixed-signal, power IC and memory design. Silvaco delivers a full TCAD-to-signoff flow for vertical markets including: displays, power electronics, optical devices, radiation and soft error reliability and advanced CMOS process and IP development. For over 30 years, Silvaco has enabled its customers to bring superior products to market at reduced cost and in the shortest time. The company is headquartered in Santa Clara, California and has a global presence with offices located in North America, Europe, Japan and Asia.

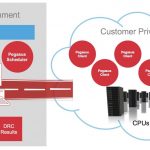

A New Product for DRC and LVS that Lives in the Cloud

Back in the day the Dracula tool from Cadence was king of the DRC and LVS world for physical IC verification, however more recently we’ve seen Calibre from Mentor Graphics as the leader in this realm. Cadence wanted to reclaim their earlier prominence in physical verification so they had to come out with something different to meet the ever increasing challenges:

- 10/7nm – 7,000 DRC rules, 40,000 operations

- 16nm DRC signoff – more than 4 days to run

- Poor scalability with physical verification tools

Instead of acquiring a start-up, management at Cadence decided to have engineering develop a new DRC/LVS tool from scratch to meet these challenges, and they named the new product Pegasus. If you’ve been keeping track of recent Cadence products there’s a common naming theme: Voltus, Innovus, Genus, Modus, Stratus, Tempus. Some non-conforming new product names: Xcelium, Joules, Indago.

Other DRC/LVS tools use multi-threading, however they really aren’t scaling well beyond a few hundred CPUs, so here are the three big differences with Pegasus:

[LIST=1]

Cadence’s 2nd generation of DRC/LVS tools were Assura and PVS that handled hierarchy and used multi-threading, which worked OK in terms of turn around time for many process nodes. With Pegasus you can expect to get runs back about 10X faster than with PVS, and it accomplishes this using three technologies:

- Stream processing – don’t wait to read in entire GDSII before starting to run DRC/LVS

- Data flow architecture – scales well up to 960 cores

- Massively Parallel Pipelined infrastructure – customer private cloud

What this means to the end user is much shortened DRC runtimes that used to take days are now completed in hours. Companies like Google made stream processing famous in their search engine approach, Facebook is the most well-known company to use a data flow architecture, and finally Amazon has mastered cloud computing. So Cadence took their new product development ideas for Pegasus from outside of traditional EDA thinking.

Related blog – Simulation Done Faster

With the old approach of multi-threading you needed to have a huge master machine, while with Pegasus you don’t need that any more and the Pegasus scheduler can setup 1,000,000 separate threads for use by all nodes in your cloud.

So just how fast can you expect to get results from Pegasus compared to PVS? The following chart shows three customer designs run on 360 cores:

How about scalability? This next chart shows a couple of designs run through Pegasus using 160, 320 and 640 CPUs:

Cadence customers using Virtuoso or Innovus will be please to learn that Pegasus works natively with each tool, and benefits to Virtuoso users of Pegasus include:

- In memory integration, no stream out and stream in

- Dynamically detect the creation, editing and deletion of objects

- Instantaneous DRC checks

- Uses the standard foundry-certified PVS deck

Related blog – Making Functional Simulation Faster with a Parallel Approach

I asked Christen Decoin at Cadence about repeatability and rotated designs, and he assured me that results are consistent between runs and it doesn’t matter if you rotate the layout.

Summary

The EDA world never remains constant, there are always new challengers for each tool category, and the team at Cadence has achieved something quite note-worthy with the introduction of Pegasus for SoC designers that cannot afford to wait 4 days or more for their DRC runs to get through sign-off. Even if you need to get blocks pushed through DRC faster, then any new tool that promises a 10X improvement is certainly worth looking into. Texas Instruments and Microsemi have talked publicly about using Pegasus for their DRC/LVS tool.

The marketing folks at Cadence even got a bit artistic with their graphics for Pegasus and the tagline: Let Your DRC Fly

Live from the TSMC Earnings Call!

Last week I was invited to attend the TSMC earnings call at the Shangri-la Hotel in Taipei which was QUITE the experience. I generally listen in on the calls and/or read the transcripts but this was the first one I attended live. I didn’t really know what to expect but I certainly did NOT expect something out of Hollywood. Seriously, there were photographers everywhere taking hundreds of pictures. I was sitting front row center and as soon as the TSMC executives sat down there was a rush of paparazzi and the clicking sounds were deafening. It was a clear reminder of how important TSMC is in Taiwan, and the rest of the world for that matter.

The most interesting news for the day was that 10nm is progressing as planned with HVM in the second half of this year. In fact, 10nm should account for 10% of TSMC wafer revenue this year (Apple). There had been rumors that foundry 10nm was in trouble (fake news) but clearly that is not the case for TSMC. In fact, according to C. C. Wei:

Although N10 technology is very challenging, the yield learning progression has been the fastest as compared to the previous node such as the 20- and 16-nanometer. Our current N10 yield progress is slightly ahead of schedule. The ramp of N10 will be very fast in the second half of this year.

C.C. also gave an encouraging 7nm update:

TSMC N7 will enter risk production in second quarter this year. So far, we have more than 30 customers actively engaged in N7. And we expect about 15 tape-outs in this year with volume production in 2018. In just 1 year after our launch of N7, we plan to introduce N7+ in 2018. N7+ will leverage EUV technology for a few critical layers to save more immersion layers. In addition to process simplification, our N7+ provides better transistor performance by about 10% and reduces the chip size by up to 10% when compared with the N7. High volume production of N7+ is expected in second half 2018 — I’m sorry, in second half of 2019. Right now, our focus on EUV include power source stability, pellicle for EUV mask and stability of the photoresist. We continue to work with ASML to improve the tool productivity so that it can be ready for mass production on schedule.

And last but not least 5nm:

We have been working with major customers to define 5-nanometer specs and to develop technology to support customers’ risk production schedule in second quarter 2019, with volume ramp in 2020. Functional SRAM in our test vehicle has already been established. We plan to use more layers of EUV in N5 as compared to N7+.

The other interesting technology update was InFO:

First, we expect InFO revenue in 2017 will be about USD 500 million. Now we are engaging with multiple customers to develop next-generation InFO technology for smartphone application for their 2018, 2019 models. We are also developing various InFO technologies to extend the application into high-performance computing area, such as InFO on substrate, and we call it InFOoS; and InFO with memory on substrate, InFO-MS. These technologies will be ready by third quarter this year or first quarter next year.

If I remember correctly, InFO contributed $100M last year (Apple) so this is great progress. By the way, now that I have seen the facial expressions that go with the voices during the Q&A I can tell you that C.C. has a very quick wit. I had pity for the analysts who tried to trip up C.C. and get inappropriate responses from him.

Mark Lui talked about ubiquitous computing and AI which reminded me why TSMC is in the dominant position they are today. As a pure-play foundry TSMC makes chips for all applications and devices. Ubiquitous says that computing can appear anytime and anywhere meaning all of those mobile devices TSMC has enabled over the past 30 years will continue to evolve making the TSMC ecosystem worth its weight in silicon.

I also have a new perspective on the analysts that participate in the Q&A after sitting amongst them. I have no idea how much they get paid for what they do but I’m pretty sure it is too much.

Here is my favorite answer for Q1 2017:

Michael Chou Deutsche Bank AG, Research Division – Semiconductor Analyst Okay, the next question, sir, management mentioned the log scale comparison versus Intel, I think, the 2014, right? So since Intel came out to say that their technology seems to be 3 year ahead of the other competitor, including your company, so do you have any comment on your minimum metal pitch and the gate pitch comparison versus Intel? Or do you have any comment for your 5-nanometer versus Intel 10-nanometer, potential 7-nanometer?

C. C. Wei Taiwan Semiconductor Manufacturing Company Limited – Co-CEO and President Well, that’s a tough question. I think every company, right now, they have their own philosophy developing the next generations of technology. As I reported in the foundry, we work with our customer to define the specs that can fit their product well. So the minimum pitch to define the technology node, we are compatible to the market. But the most important is that we are offering the best solution to our customers’ product roadmap. And that’s what we care for. So I don’t compare that really what is the minimum pitch to define the technology node.

Absolutely!

A PDF of the meeting is HERE. The presentation materials are HERE. I have pages of notes from the event and the trip in general so lets talk more in the comments section and make these analysts green with envy!

How to Implement a Secure IoT system on ARMv8-M

This weekend my old garage door opener started to fail, so it was time to shop for a new one at The Home Depot, and much to my surprise I found that Chamberlain offered a Smartphone-controlled WiFi system. Just think of that, controlling my garage door with a Smartphone, but then again the question arose, “What happens when a hacker tries to break into my home via the connected garage door opener?” I opted for a Genie system without the WiFi connection, just to feel a bit safer. Connected devices are only growing more prominent in our daily life, so I wanted to hear more about this topic from ARM and attended their recent webinar, “How to Implement a Secure IoT system on ARMv8-M.”

ARM has been designing processors for decades now, and has come to realize that security is best approached from a systems perspective and should involve both the SoC hardware and software together, thus giving birth to the moniker ARM TrustZone, where there is hardware-based security designed into their SoCs that provide secure end points and a device root of trust. This webinar focused on the Cortex-M33 and Cortex-M23 embedded cores shown below in Purple:

Most IoT systems could use a single M33 core, although you can easily add two of these cores for greater flexibility and even saving power. Cores and peripheral IP communicate using the AHB5 interconnect. Security is controlled by mapping addresses with something called the Implementation Defined Attribution Units (IDAU). This system also filters incoming memory accesses at a slave level as shown in the system diagram:

That diagram may look a bit complex, however ARM has bundled most of this IP together along with the mbed OS with pre-built libraries and called it the CoreLink SSE-200 subsystem which is pre-verified, saving you loads of engineering development time.

With TrustZone you have a system that contains both trusted and un-trusted domains, then memory addresses are sorted to verify that they are in a trusted range or not.

Let’s say that you like the security approach from ARM and then want to get started on your next IoT project, what options are available? ARM has built up a prototyping system using FPGA technology called the MPS2+ along with IoT kits that include the Cortex-M33 and Cortex-M23, plus there’s a debugger called Keil. You can also use a Fixed Virtual Platform (FVP) which uses software models for simulation.

One decision that you make for your IoT device is the memory map, splitting it into secure and non-secure addresses using a Secure Attribution Unit (SAU) together with the IDAU. There are even configuration wizards available to let you quickly define the start and end address regions.

ARM even has created an open-source platform OS called mbed OS,just for the IoT market, already with some 200,000 developers. With the addition of this OS we now have three levels of security:

- Lifecycle security

- Communication security

- Device security

Related blog –IoT devices Designers Get Help from ARMv8-M Cores

Summary

It’s pretty evident that ARM has put a lot of effort into creating a family of processors, and when it comes to security they have assembled an impressive collection of cores, semiconductor IP, SDK, compiler, platform and debugger. What this means is that now I can more quickly create my secure IoT system with ARM technology, using fewer engineers, all at an affordable price.

There’s even detailed virtual training courses coming up in April and May for just $99 each, providing more depth than just the webinar provides.

IP Traffic Control

From an engineering point of view, IP is all about functionality, PPA, fitness for use and track record. From a business/management point of view there are other factors, just as critical, that relate less to what the IP is and more to its correct management and business obligations. The problems have different flavors depending on whether you are primarily a supplier of IP, primarily a consumer or if you are working in a collaborative development. To avoid turning this into a thesis, I’ll talk here just about issues relating to the design and royalty impact of the consumption of soft and hard IP, particularly when externally sourced.

A large modern design can contain hundreds of such IPs – CPUs, memories of various types, standard interfaces, bus fabrics, accelerators, security blocks, the list is endless. Each comes with one or more license agreements restricting allowed access and usage. These can become quite elaborate as supplier and consumer arm-wrestle to optimize their individual interests. Licenses can be time-bounded, geography-bounded, design and access bounded and there may be additional restrictions around ITAR. Moreover new agreements are struck all the time with new bounds which overlap or partially overlap with previous agreements. The result for any one IP can be a patchwork of permissions and restrictions on who can access, when they can access, what they can access and for what purpose.

Multiply this by potentially hundreds of sourced IP on a design, across multiple designs active in a company, across multiple locations and you have a management nightmare. This is not stuff a design team wants to worry about but you can see how easy it would be, despite best intentions, to violate agreements.

There’s another problem – IP is not static. At any given time, there can be multiple versions of an IP in play, addressing fixes or perhaps offering special-purpose variants. New versions of IP appear as a design is evolving which leads to questions of which versions you should be using when you’re signing off the design. These questions don’t always have easy answers. A latest fix may be “more ideal” by some measures but may create other problems for system or cross-family compatibility. There may be a sanctioned “best” version at any given time, but how do you check if your design meets that expectation prior to signoff?

What about all the internally-sourced IP? For most companies these provide a lot of their product differentiation. Thanks to years of M&A/consolidation they have rich sources of diverse IP, but built to equally diverse standards and expectations which may be significant when used in different parts of the company. Version management and suitability for use in your application are as much a concern as for externally sourced IP. And you shouldn’t think that internal IP is necessarily free of rights restrictions because it is internal; there may still be restrictions inherited through assignments from prior inventors.

Finally, once you are shipping, do you have accurate information to track royalty obligations? Again this is not always easy to figure out across complex and differing license agreements unless you have a reliable system in place to support assessing payments and, if necessary, to support litigation.

Navigating these thickets of restrictions and requirements is an IS problem requiring a professional solution. Getting this stuff wrong can end in lawsuits, possibly torpedoing a design or a business so this is no place for amateur efforts. Deployed on the collaborative 3DEXPERIENCE Platform, the Silicon Thinking Semiconductor IP Management solution delivers an enterprise level capability companies need to manage and effectively use their IP portfolios. The solution provides easy and secure global cataloging, vetting, and search capabilities across business units and partner and supplier networks. It also delivers essential IP governance tools, and enables full synchronization of IP management with issue, defect and change processes. It also provides royalty tracking and management capabilities to maximize profits while minimizing litigation risks.

You can get another perspective on these management aspects of IP traffic control HERE.

Also Read

IP Vendors: Call for Contribution to the Design IP Report!

The EDA & IP industry enjoys high growth for the Design IP segment, but a detailed analysis tool is missing. IPnest will address this need in 2017, expecting the IP vendors’ contribution! If we consider the results posted last March by the ESD Alliance, the EDA (and IP) industry is doing extremely well, as the global revenue has grown in Q4 2016 by 22% compared with Q4 2015! If we zoom to the “Design IP” category, the 22% growth rate is online with the industry and the most significant information is the confirmation that the “Design IP” is now confirmed to be the largest category. The “CAE” category used to be by far the #1 for years, until 2015 as you can see on the graphic below.

When you see the slope of the Design IP curve in light blue during the last 3 to 4 years, you realize that the Design IP category will stay #1 in the future. As we all need facts, I have calculated the CAGR for 2010 to 2016: The Design IP category has grown with a 16% CAGR, when the next category (CAE) has grown with a 7% CAGR. All is good for Design IP, except that the industry miss a detailed analysis tool, like the former “Design IP Report” released by Gartner up to 2015. IPnest will launch this type of report in April 2017, covering the Design IP market by category for 2015 and 2016.

The obvious difference between the Design IP segment and any other EDA category is the business model, based on up-front licenses and royalties (even if only certain vendors ask for royalties). The royalty part of the revenues should be clearly identified, and two main categories defined: IP License and IP Royalty.

To better understand the Design IP market dynamic, we need to segment this market into categories:

·Microprocessor (CPU)

·Digital Signal Processing (DSP core)

·Graphic Processing (GPU)

·Wired Interface IP

·Wireless Communication IP

·SRAM Memory Compilers (Cells/Blocks)

·Others (OTP, MTP, Flash, XRAM) Memory Compilers

·Physical Library (Standard cell & I/O)

·General Purpose Analog & Mixed Signal

·Infrastructure IP (NoC, AMBA)

·Miscellaneous Digital IP

·Others…

You can download the pdf version of the Excel spreadsheet at the bottom of this article

… or contact me: eric.esteve@ip-nest.com

If we only consider the large, well-known IP vendors like ARM, Imagination, CEVA, Synopsys, Cadence or Rambus to measure the Design IP market, we will probably reach 80% of the effective market size. But the remaining 20% are made of a multitude of companies and some of them are very innovative, maybe designing the next big function that all the chip makers will integrate tomorrow. We need IP vendors to contribute by sharing their revenues, and we need all of them to participate!

Like the EDA market, the IP market history has been marked by acquisitions. Back in 2004, the acquisition of Artisan by ARM for almost $1 billion has been like a thunderclap (Artisan revenue was about $100 million). When you look at the way Synopsys has built their IP port-folio, it was through the successive acquisitions of InSilicon, Cascade (PCI Express controller), Mosaid (DDRn memory controller), the Analog Business Group of MIPS Technologies or the largest, the acquisition of Virage Logic in 2009 for $315 million (logic libraries and memory compilers). The “Design IP Report” can also be an efficient tool for the small IP vendors to get visibility and for the large one to complement their port-folio through an acquisition…

Like EDA, the IP market is not monolithic, but made of various IP categories. Each category follows its own market dynamic and if you want to build an accurate IP market forecast, you will have to consider each category individually, project the specific evolution and finally consolidate and calculate the global IP market forecast. This approach works reasonably well. For example, for the Interface IP segment, made of various protocols (PCIe, USB, MIPI, and many more), IPnest has started to build a 5 year forecast in 2009, and did it every year. We could measure the difference between the forecast and the actual results for the first time in 2014 and I am proud to say that the forecast was accurate within +/- 5%, and this error margin has stayed the same every year.

If you, as an IP vendor, think that you need to benefit from an accurate report (the “Design IP Report”) and if you expect to see some accurate forecast of the IP market, you need to contribute and share your IP revenues!

Eric Esteve fromIPNEST

Don’t miss the “IP Paradox” panel during the DAC 2017, organized by Eric Esteve and moderated by Dan Nenni:

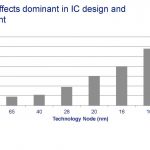

SPIE 2017 ASML and Cadence EUV impact on place and route

As feature sizes have shrunk, the semiconductor industry has moved from simple, single-exposure lithography solutions to increasingly complex resolution-enhancement techniques and multi-patterning. Where the design on a mask once matched the image that would be produced on the wafer, today the mask and resulting image often look completely different. In addition, the advent of multi-patterning has led to patterns being broken up into multiple colors, with each “color” being produced by a different mask and a single layer on a wafer requiring two to five masks to produce. Designs must be carefully optimized to ensure that the resulting wafer images are free of “hotspots” that can lead to low yields.

Continue reading “SPIE 2017 ASML and Cadence EUV impact on place and route”