At this year’s SNUG (Synopsys Users Group) conference, Richard Ho, Head of Hardware, OpenAI, delivered the second keynote, titled “Scaling Compute for the Age of Intelligence.” In his presentation, Richard guided the audience through the transformative trends and implications of the intelligence era now unfolding before our eyes.

Recapping the current state of AI models, including the emergence of advanced reasoning models in fall 2024, Richard highlighted the profound societal impact of AI technology, extending well beyond technical progress alone. He followed with a discussion on the necessary scaling of compute and its implications for silicon technology. The talk emphasized the distinction between traditional data centers and AI-optimized data centers, concluding with an analysis of how these shifts are reshaping silicon design processes and the role of EDA engineers.

Reasoning Models: The Spark of General Intelligence

AI entered mainstream consciousness in November 2022 with the launch of ChatGPT. Its rapid adoption surprised everyone, including OpenAI. Today, the latest version, GPT-4o—introduced in 2024 with advanced reasoning models—has surpassed 400 million monthly users, solidifying its role as an essential tool for coding, education, writing, and translation. Additionally, generative AI tools like Sora are revolutionizing video creation, enabling users to express their ideas more effectively.

Richard believes that the emergence of reasoning models heralds the arrival of AGI. To illustrate the concept, he used a popular mathematical riddle, known as the locker problem. Imagine a corridor with 1,000 lockers, all initially closed. A thousand people walk down the corridor in sequence, toggling the lockers. The first person toggles every locker, the second every other locker, the third every third locker, and so on. The 1,000th person toggles only the 1,000th locker. How many lockers remain open?

Richard presented this problem to a reasoning model in ChatGPT, requesting its chain of thought. Within seconds, ChatGPT concluded that 31 lockers remain open.

More important than the result was the process. In summary, the model performed:

- Problem Extraction: The model identified the core problem: toggling and counting divisors.

- Pattern Recognition: It recognized that only perfect squares have an odd number of divisors.

- Logical Deduction: It concluded that 31 lockers remain open.

This example, while relatively simple, encapsulated the promise of reasoning models: to generalize, abstract, and solve non-trivial problems across domains.

The Economic Value of Intelligence

The keynote then pivoted from theory to impact, discussing the impact of AI on global productivity. Richard started from the Industrial Revolution and moved to the Information Age, showing how each major technological shift triggered steep GDP growth. With the advent of AI, particularly language models and autonomous agents, another leap is expected — not because of chatbots alone, but because of how AI enables new services and levels of accessibility.

Richard emphasized the potential of AI in a variety of fields as:

- Medical expertise: available anywhere, anytime,

- Personalized education: tuned to individual learners’ needs,

- Elder care and assistance: affordable, consistent, and scalable,

- Scientific collaboration: AI as an assistant for tackling climate change, medicine, and energy.

To illustrate the transformative potential of AI agents, Richard recalled his experience at D.E. Shaw Research to solve the protein folding challenge. Fifteen years ago, D.E. Shaw Research designed a line of purpose-built supercomputers, called Anton 1 & 2, to accelerate the physics calculations required to model the forces—electrostatics, van der Waals, covalent bonds—acting on every atom in a protein, across femtosecond time steps. It was like brute-forcing nature in silicon, and it worked.

In late 2020, DeepMind tackled the same problem, using AI. Instead of simulating molecular physics in fine-grained time steps, they trained a model and succeeded. Their AlphaFold system was able to predict protein structures with astonishing accuracy, bypassing a decade of painstaking simulation with a powerful new approach, and rightly earned one of the highest recognitions in science, a Nobel Prize.

AI is no longer just mimicking human language or playing games. It’s becoming a tool for accelerated discovery, capable of transformative contributions to science.

Scaling Laws and Predictable Progress

The talk then shifted gears to a foundational law: increasing compute power leads to improved model capabilities. In fact, the scaling of compute has been central to AI’s progress.

In 2020, OpenAI observed that increasing compute consistently improves model quality, a relationship that appears as a straight line on a log-log plot. The relationship leads to practical tasks, such as solving coding and math problems. The evolution of GPT is a living proof of this dependency:

- GPT-1 enabled state-of-the-art word prediction.

- GPT-2 generated coherent paragraphs.

- GPT-3 handled few-shot learning.

- GPT-4 delivered real-world utility across domains.

To exemplify this, Richard pointed to the MMLU benchmark, a rigorous suite of high school and university-level problems. Within just two years, GPT-4 was scoring near 90%, showing that exponential improvements were real — and happening fast.

The Infrastructure Demands of AI

The compute required to train these large models has been increasing at eye-popping rates — 6.7X annually pre-2018, and still 4X per year since then, far exceeding Moore’s Law.

Despite industry buzz about smaller, distilled models, Richard argued that scaling laws are far from dead. Reasoning models benefit from compute not only at training time, but also at inference time — especially when using techniques like chain-of-thought prompting. In short, better thinking still requires more compute.

This growth in demand is reflected in investment. OpenAI alone is committing hundreds of billions toward infrastructure. The industry as a whole is approaching $1 trillion in total AI infrastructure commitments.

The result of all this investment is a new class of infrastructure that goes beyond what we used to call “warehouse-scale computing” for serving web services. Now we must build planet-spanning AI systems to meet the needs of training and deploying large models. These training centers don’t just span racks or rooms—they span continents. Multiple clusters, interconnected across diverse geographies, collaborating in real time to train a single model. It’s a planetary-scale effort to build intelligence.

Designing AI Systems

Today, we must focus on the full-stack of AI compute: the model, the compiler, the chip, the system, the kernels—every layer matters. Richard recalled the Amdahl’s Law, namely, optimizing a single component in the chain doesn’t guarantee a proportional improvement in overall performance. To make real gains, we have to improve the stack holistically—software, hardware, and systems working in concert.

Within this vertically integrated stack, one of the most difficult components to design is the chip accelerator. Designing the right hardware means striking a careful balance across multiple factors. It’s not just about raw compute power—it’s also about throughput, and in some cases, latency. Sometimes batching is acceptable; other times, real-time response matters. Memory bandwidth and capacity remain absolutely critical, but increasingly, so does bandwidth between dies and between chips. And perhaps most importantly, network bandwidth and latency are becoming integral to system-level performance.

Peak performance numbers often advertised are rarely achieved in practice. The real value comes from the delivered performance in processing AI software workloads, and that requires full-stack visibility, from the compiler efficiency, to how instructions are executed, where bottlenecks form, and where idle cycles (the “white spaces”) appear. Only then realistic system’s throughput and latency can be assessed. Co-design across model, software, and hardware is absolutely critical.

Richard emphasized a critical yet often overlooked aspect of scaling large infrastructure: reliability. Beyond raw performance, he stressed the importance of keeping massive hardware clusters consistently operational. From hardware faults and rare software bugs triggered in edge-case conditions to network instability—such as intermittent port flaps—and physical data center issues like grid power fluctuations, cooling failures, or even human error, he argued that every layer of the stack must be designed with resilience in mind, not just speed or scale.

Resiliency is perhaps the most underappreciated challenge. For AI systems to succeed, they must be reliable across thousands of components. Unlike web services, where asynchronous progress is common, AI training jobs are synchronous — every node must make progress together. One failure can stall the entire job.

Thus, engineering resilient AI infrastructure is not just important, it’s existential.

From Chip Design to Agentic Collaboration

The keynote closed with a return to the world of chip design. Richard offered a candid view of the challenges in hardware timelines — often 12 to 24 months — and contrasted this with the rapid cadence of ML research, which can shift in weeks.

His dream? Compress the design cycle dramatically.

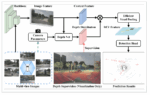

Through examples from his own research, including reinforcement learning for macro placement and graph neural networks for test coverage prediction, he illustrated how AI could help shrink the chip design timeline. And now, with large language models in the mix, the promise grows further.

He recounted asking ChatGPT to design an async FIFO for CDC crossings — a common interview question from his early career. The model produced functional SystemVerilog code and even generated a UVM testbench. While not yet ready for full-chip design, it marks a meaningful step toward co-designing hardware with AI tools.

This led to his final reflection: In the age of intelligence, engineers must think beyond lines of code. With tools so powerful, small teams can accomplish what once took hundreds of people.

Richard concluded by accentuating that the next era of AI will be shaped by engineers like those attending SNUG, visionaries whose work in compute, architecture, and silicon will define the future of intelligence. He closed with a call to action: “Let’s keep pushing the boundaries together in this incredibly exciting time.”

Key Takeaways

- AI capabilities are accelerating — not just in chatbot quality, but in math, reasoning, science, and infrastructure design.

- Scaling laws remain reliable — compute continues to drive capability in predictable and exponential ways.

- The infrastructure must evolve — to handle synchronous workloads, planetary-scale compute, and the resiliency demands of large-scale training.

- Hardware co-design is essential — model, software, and system improvements must be approached holistically.

- AI for chip design is here — not yet complete, but showing promise in coding, verification, and architecture exploration.

Also Read:

SNUG 2025: A Watershed Moment for EDA – Part 1

DVCon 2025: AI and the Future of Verification Take Center Stage

The Double-Edged Sword of AI Processors: Batch Sizes, Token Rates, and the Hardware Hurdles in Large Language Model Processing