The TSMC 30th Anniversary Forum just ended so I will share a few notes before the rest of the media chimes in. The forum was live streamed on tsmc.com, hopefully it will be available for replay. The ballroom at the Grand Hyatt in Taipei was filled with cameras, semiconductor executives, and security personnel.

The event started with a video about TSMC over the last 30 years followed by comments from Chairman Morris Chang. The keynotes were by Nvidia CEO Jensen Huang, Qualcomm CEO Steve Mollenkopf, ADI CEO Vincent Roche, ARM CEO Simon Segars, Broadcom CEO Hock Tan, ASML CEO Peter Wennink, and Apple COO Jeff Williams. Next was a panel discussion led by Chairman Morris Chang.

First let’s start with the jokes. Jensen Huang was supposed to go first but his presentation was not ready and Morris roasted him a bit over it. Jensen replied that it took him longer because he actually prepared for the event. Funny because it was a joke with a bit of truth to it because the other presentations were standard stock. Jensen did the best presentation which was all about AI which is in fact the future of semiconductors in the next ten years.

The best joke however was in response to a question about legal matters, if AI goes wrong who is held accountable? Morris pointed out that Steve Mollenkopf probably has the most legal experience of the group referring to Qualcomm’s massive legal challenges of late. Steve recused himself from the question of course. Even at 86 years old Morris still has a quick wit and provided most of the humor for the evening.

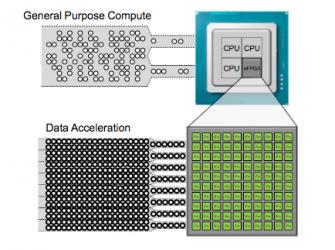

As I have mentioned before, AI will touch almost every chip we make in the coming years which will bring an insatiable compute demand that general purpose CPUs will never satisfy. This year Apple put a neural engine on the A11 SoC that’s capable of up to 600 billion operations per second. Nvidia GPUs do trillions of operations per second so we still have a ways to go for edge devices.

A couple of more interesting notes, the Apple-TSMC relationship started in 2010 which didn’t produce silicon until the iPhone 6 in 2014. Morris described the Apple-TSMC relationship as intense but Jeff Williams (Apple) said that you cannot double plan for the volumes of technology that Apple requires so partnerships are key. My take is that the TSMC-Apple relationship is very strong and will continue for the foreseeable future. Who else is going to be able to do business the Apple (non competing) way and still make big margins?

Jeff also predicts that medical will be the most disruptive AI application to which Morris agreed suggesting mediocre doctors will be replaced by technology. This is something I feel VERY strongly about. Medical care is barbaric by technology standards and we as a population are suffering as a result. Apple is focused on proactive medical care versus reactive which is what you see in most hospitals. Predicting strokes or heart events is possible today for example. AI enabled medical imaging systems is another example for tomorrow.

Security and privacy were discussed with Apple insisting that your data is more secure on your device than it is in the cloud. Maybe that’s why the new phones have a huge amount of memory (64-256 GB) while free iCloud storage is still only 5 GB. We use a private 1 TB cloud for just that reason by the way, our data stays in our possession. I certainly agree about security but privacy seems to be lost on millennials and they are the target market for most devices.

Bottom line: Congratulations to the TSMC support staff, this event was well done and congratulations to TSMC for an amazing 30 years. The room was filled with C level executives and a smattering of media folks like myself. It really was an honor to be there, being part of semiconductor history, absolutely.