Cadence has recently announced two key design-in for their Vision DSP IP family: MediaTek’s Helio P30 integrates the Tensilica Vision P5 DSP and HiSilicon has selected the Cadence® Tensilica® Vision P6 DSP for its 10nm Kirin 970 mobile application processor. The Kirin 970 being integrated into Huawei’s new Mate 10 Series mobile phones (HiSilicon is a subsidiary of Huawei), we can expect the IC production volumes to be huge. In fact, Huawei is ranked #3 for WW smartphone shipment in 2017, with a market share becoming closer to the #2 Apple. Let’s take a look at the various Tensilica DSP IP cores and figure out their positioning in respect with imaging, vision processing or emerging applications such as 3D sensing, human/machine interface, AR/VR and biometric identification for the mobile platform.

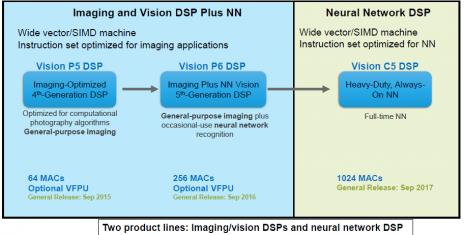

The above picture is useful to discriminate between the Vision P5 DSP, Vision P6 and Vision C5 DSP. At first the architecture, wide vector/SIMD (Single Instruction Multiple Data), is identical when the instruction set is optimized for imaging application (P5 and P6) or for Neural Network (NN) for the Vision C5 DSP.

The Vision P5 DSP was released in September 2015. It’s a general-purpose imaging DSP with 64 MACs, optimized for computational photography algorithms, like the Vision P6, but the latter (released in September 2016) is offering occasional-use neural network recognition and 4X MACs, with 256 MACs. Designer can select an optional 32 ways SIMD vector FPU with 16-bits (FP 16).

The Vision C5 DSP, released in September 2017, also offers 4X MACs count, with 1024 8-bit MACs or 512 16-bit. But the main difference with the P family is that the complete DSP is optimized to run all NN layers and allows running full-time NN (Always-on NN), supporting face detection, people detection, object detection and gesture detection and running video analysis and AI. The Vision C5 is optimized for vision, radar/lidar and fused-sensor applications and target surveillance, drone and mobile/wearable markets.

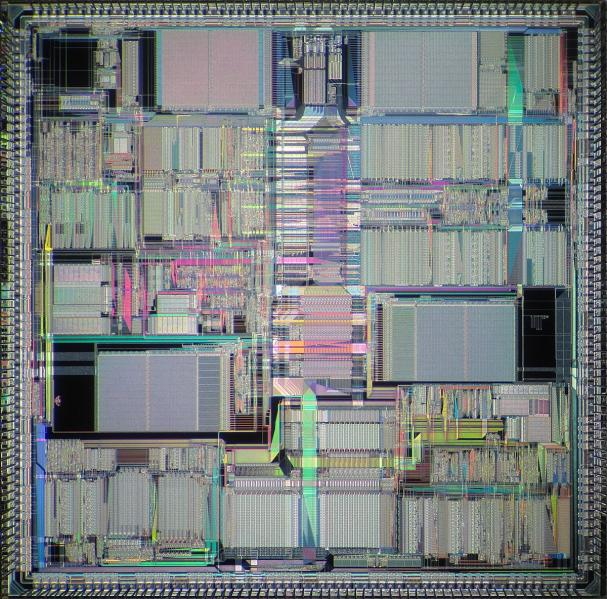

I remember the time where the only DSP into a mobile phone was integrated to support the modem… if you look at the Kirin 970 bloc diagram (above), you realize that digital signal processing is now intensively used in a mobile phone.

The global-mode modem is LTE modem (a Category 18 modem) with download capabilities of 1.2Gbps, based on DSP. Dual-back sensors are now integrated into mobile phone, and dual camera requires increased computational requirements for the imaging functionality -another DSP like function, supported by a dual camera ISP in the Kirin 970. Huawei’s i7 sensor processor is also a DSP based function, like obviously the Cadence/Tensilica Vision P6 image DSP doing Pre-and Post-image processing and the HiFi Audio processing.

Let’s address the dual-back sensors capability of the Kirin 970. According with a report from IBS “Image Sensor and Image Signal Processing” (Sep 2016), the integration of dual-back image sensors will replace the single-back sensor, as shipments will be at 50%/50% in 2020. The CMOS Image Sensor (CIS) market is not as well-known as the Apps Processor or modem market, but it’s weighting $12 billion in 2016 and will grow with 10% CAGR up to 2025 (according with IBS or with Yole, French based analyst specialized in the sensor industry). As far as I am concerned, I didn’t know that much about this market one year ago as I discovered it in 2017 while working for an IP start-up targeting CIS, but I can now tell that it will be one of the fastest growing semiconductor segment, thanks to the dual-back sensors adoption in mobile and also to the CIS pervasion in automotive, new cars integrating from 3 to up to 10 cameras! Stay tuned as the CIS IP segment could be one of the session of the next DAC IP in 2018…

If the phone integrates two back sensors, that make sense to use dual ISP to extract the best picture quality (in fact Huawei has shown a comparison of the same image taken with Samsung Galaxy S8 and Huawei Mate 10 Pro during a presentation in Berlin in September, and the result is a blurry pic with Samsung). But two ISP also means that the Vision processor (Tensilica Vision P6 DSP here) has to be much more powerful than before. Cadence is claiming to have the highest per-cycle processing with 4 vectors per cycle (each 64-way SIMD), the widest memory interface at 1024 bits. As the Vision P6 is running at 1.1 GHz on 16nm FF, we may expect it to run even faster in the Kirin 970 targeting 10nm.

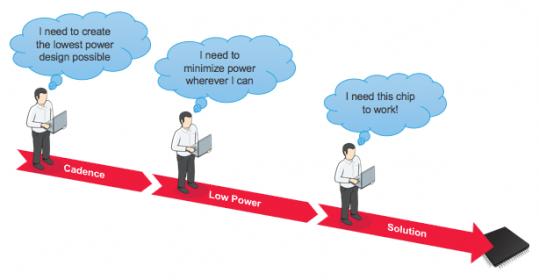

I end up with this last picture showing the great energy efficiency of Tensilica Vision DSP, up to 25X better than CPU. More than just low power, energy efficiency will become the key concern of semiconductor devices of the future… and yes, we will most probably also address this topic during IP session at DAC 2018!

How MediaTek is using the Vision P5 DSP in their Helio P30 SoC, you can find some info on AnandTech here:

http://www.anandtech.com/show/11770/mediatek-helio-p23-helio-p30-midrange-socs

By Eric Esteve fromIPnest