One might be tempted to think that technology driven gains in computer performance might be enough to keep up with the needs of design and verification tools. We know that design complexity is increasing at a rate predicted by Moore’s Law. We also know that the performance of the computers used during IC development benefit from this same level of performance improvement. When I first started in EDA I made the analogy of a dragon chasing its tail – always close, but never quite able to catch up. However, the dragon always seemed close enough to make developing the next generation of hardware possible.

Stepping back and examining the issue more closely reveals well known issues, such as exploding design rule complexity. I have written before about the rapid increase in the thickness of DRM’s. The need for increased compute power necessary for design completion hits across the board. We see it in simulation, synthesis, place and route, etc. However perhaps the most heavily impacted area is physical design verification, aka DRC.

Many of us can remember the tectonic shift in DRC when flat checking became impractical. This is when after decades there was a move from Cadence’s Dracula to Mentor’s Calibre. Calibre effectively and reliably solved the issues surrounding hierarchical DRC and paved a new path forward that was absolutely necessary for design productivity. The holy grail of most verification tools is the overnight run. If a tool can run in 8 to 14 hours, the users can start a run, go home and come back the next day to analyze and correct issues.

As process nodes advanced, the requirements for physical verification exploded. The new requirements came from several distinct categories. Mentor has published a white paper recently that discusses each of these areas and describes the work and development effort necessary to deliver a total solution that makes physical optimization practical.

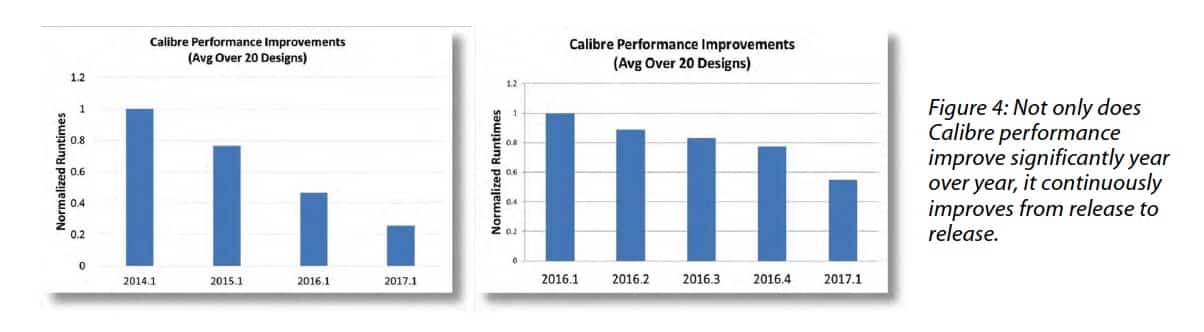

Some of the solutions are good old fashioned software development work. These include the addition of parallel processing and support for larger designs. Mentor does a lot of additional work on the algorithmic side to support faster operations. Some of these focus on handling hierarchy better, especially when it relates to metal fill. Anyone involved with nodes below 28nm knows how complex metal fill requirements have become. Calibre is used now to generate metal fill. Mentor has pushed out significant enhancements in fill generation. The white paper discusses how these enhancements and methodology changes enable much higher design throughput.

Other significant runtime improvements can be achieved through less obvious methods such as carefully writing rule decks. Two seemingly similar methods of performing a check can have vastly different runtimes. If specific knowledge is used in writing rule decks, overall runtimes can be lowered. We are long past the days where users write their own rule files. Today all the major foundries provide Calibre rule decks. Mentor has developed deep relationships with the major foundries and has, in conjunction with them, devised a process to ensure that all the supported rule decks use the most efficient methods. This requires early access and intimate cooperation between the Calibre team and the foundries.

More advanced nodes also come with completely new verification requirements. One such example is multi-patterning. Early on the foundries took care of this for their customers. However, as the process advanced from double to multiple patterning, the responsibility migrated to the physical layout teams. This is another area where Calibre has added support to ensure that customer designs are quickly and correctly completed.

In the Mentor White Paper there is discussion on the effort that Mentor expends adapting Calibre at each new process node and at the same time maintaining backwards compatibility, so that designs on nodes like 130nm or 90nm still obtain the same verification results. My observation is that Mentor is highly aware that the base tool needs to be reinvigorated at the same time as new performance features need to be added. Furthermore, none of it is useful without deep foundry relationships to anticipate the requirements for nodes that may be years away. Mentor is in an enviable position as it has the ability to work with foundries and their most advanced customers to ensure that support is ready prior to production release for each new node.

The Mentor paper entitled “Achieving Optimal Performance During Physical Verification” is a fascinating view into the work that is required to stay on top of meeting and exceeding the next generation needs for physical verification. The results that Calibre attains are a clear indication of the level of effort that Mentor dedicates to the overall process of enhancing and supporting their physical verification solution. I highly recommend reading the paper to get more detailed insights and to better understand how the whole solution works together.