Six years ago I first interviewed Stephen Crosher, CEO and Co-founder of Moortecas they were in startup mode with some new semiconductor IP for temperature sensing, and earlier this month I attended their webinar all about embedded in-chip monitoring to get caught up with their technology and growing success. Ramsay Allen is their VP of Marketing and he talked about how their business started out in 2005 based in the UK, focused as an IP supplier of Process, Voltage and Temperature (PVT) sensing.

Stephen Crosher, Ramsay Allen – Moortec

Stephen presented the bulk of the webinar and introduced the need for embedded in-chip monitoring:

- Can I meet my power consumption requirements?

- Is my chip operating in a reliable fashion?

- How are the transient thermal levels within my SoC operating?

FinFET transistors became widespread starting at 22nm and continuing into smaller nodes because compared to planar CMOS technologies it is offering lower leakage, lower operating voltages, higher silicon density, faster speeds and improved channel control. With the increase in density come new challenges of thermal hot spots, electromigration causing reliability issues, and leakage concerns. Even packaging costs become an issue as you can spend between $1 and $3 per watt consumed in the SoC.

As voltage supply levels scale ever downwards then chip engineers need to design for worst-case IR drops and account for increased resistance values in interconnect. There is even an industry segment on the high-end that is mining for Bitcoin, and their chip performance is bound by power delivery and air conditioning costs, so being able to run your chips cooler is a big financial benefit.

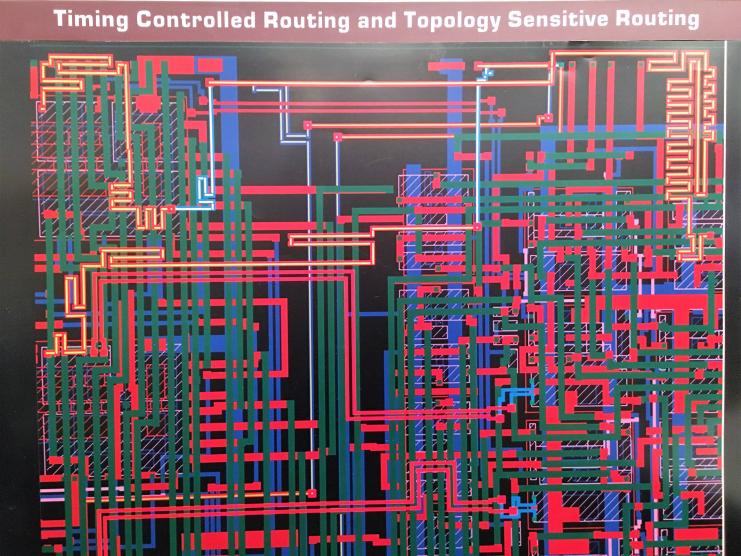

Smaller process geometry nodes like 28nm and below have reliability issues to contend with like NBTI (Negative Bias Temperature Instability) where the Vt value shifts over time, so IC designers need to know how far Vt values have changed during aging. Trying to reach timing closure is now complicated by process variations within a single die where one chip region has a unique PVT corner, while another chip region is operating in a different PVT corner:

Mr. Crosher shared a use case from AMD on their Athlon II Quad core CPU, designed at 45nm where they placed thermal sensors in each of the cores and then distributed the workload across the cores based upon the thermal readings from each core, making sure that no one core became too hot, balancing the core reliability.

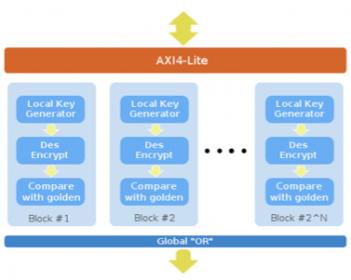

In the second use case the challenge was to optimize voltage scaling by measuring the power and speed of each IC, then finding the lowest functional voltage possible, saving the unique settings in each device. Moortec even supports Adaptive Voltage Scaling (AVS) in a closed loop format by placing multiple Voltage Monitors (VM) or Process Monitors on each chip:

There was even a use case where an enterprise data center used embedded chip monitoring to do real-time temperature monitoring to allow power optimization, provide a failure prediction of devices, and to protect each CPU by providing a safety shutoff limit. This is a big deal for data centers because they are such large consumers of power from our electrical grid, and their projected growth is staggering. Today, about 2% of our total electricity is taken by Data Centers, and with a CAGR of 12% these power stations will produce more greenhouse gas than airlines by 2020.

The actual monitoring IP from Moortec has both hard macros and a soft PVT controller as shown below:

This IP is already used in many nodes: 40nm, 28nm, 16nm, 7nm.

The number of monitors and their placement is dependent on each unique application, so the engineers at Moortec are happy to give you a hand on where to place Process, Voltage and Temperature monitors.

Summary

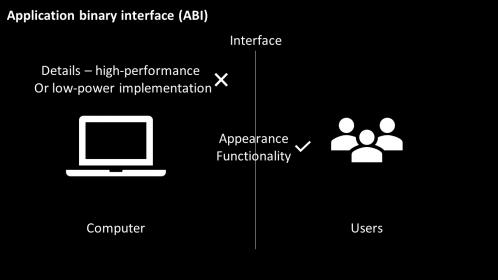

The challenges in our modern SoC chips can be met through the use of PVT in-chip monitors. You could try and create your own IP to do this, or just task the experts that have been doing this for over a decade and re-use their silicon-proven monitoring IP.

Q&A

Q: How do I test your IP?

A: Thermal – a reference is required to test accuracy, so this is done by probing on die, and we have test chip programs to ensure no self-heating. There’s only a .003C temperature rise from adding a sensor. Yes, we have correlated silicon versus simulation data.

Q: Where do you store info coming out of PVT sensors?

A: Register sets in the control block. You would store output in your own SoC design, not in our IP.

Q: Is the voltage monitor immune to Vdd fluctuations?

A: Our voltage monitor is looking at Vdd supply across its full range, designed to be immune to ripples, and it’s robust.

Webinar Recording

To view the entire 42 minutes webinar, visit this link.