The recent fatal accident involving an UBER autonomous car, was reportedly not caused – as initially assumed – by a failure of the many sensors on the car to recognize the cyclist. It was instead caused by a failure of the software to take the right decision in regard to that “object”. The system apparently considered it a false positive, as “something” not important enough to stop or slow down the vehicle, as if it was a newspaper page flown by the wind.

The (rather disturbing) dashcam footage released indeed shows the car system “not bothering” about the cyclist crossing the road.

After all, this was an odd scenario. The system was probably trained to recognize cyclist ontheir bikes, riding along the road and not across, as crossing should only happen on pedestrian lanes, and most probably during the day.

One must consider the fact that such Artificial Intelligence systems are trained by actual frames and footage recorded in previous rides, that log the most common cases.If it’s not pre-classified as something that deserves attention, the car might well just move on.

In the Cadence CDNLive EMEA conference that took place in Munich in early May, Prof. Philipp Slusallek of Saarland University and Intel Visual Computing Institute, highlighted the critical role of verification of such AI systems. The pre-recorded footage is good to test for the routine and trivial cases (e.g. cyclist riding along the road), he said, but not for a complete coverage of the long tail of “critical situation with low probability” that the system may not implement or may not be tested for.

(I apologize for the quality of the slides’ pictures. The presentation was in well-attended large hall.)

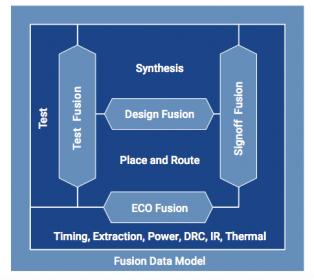

The solution he offered was for a High Performance Computing (HPC) system that analyses the existing stimuli data and generates additional frames for such unavailable cases, to achieve a “high variability of input data” – just as one will do in constraint driven randomization of inputs in the the verification of VLSI systems. Such a system, should take the “real” reality, as recorded from actual footage, as a basis and augment it with a Digital Reality of additional instances of scenarios – as described in the following slide:

However, such system displays multiple challenges and requirements, to analyze the existing scenarios and create additional valid scenarios:

In light of this extensive list of requirements, no wonder that the first part of the presentation focused on the HPC platform necessary to run such analysis and simulation. Since AI for Autonomous Driving (and possibly other use-cases) is supposed to be ubiquitous (and therefore cost-sensitive), I wonder if this heaving-lifting computing system will be a viable solution for the “masses”?

Furthermore, a verification engineer looking at some of the slides above, might be tempted to think constrained-random is the solution. A researcher, might see Monte-Carlo simulation in it, and others might see their domain specific solution. The real solution to the problem, would most likely be all of those and none of them, as the problem at hand definitely requires a new paradigm. Talking from a verification engineer’s stand-point, constrained-random, while good at generating extremely varied solutions, always requires a set of rules, i.e. the constraints. Its native field of application was for generating unexpected combinations within well-defined protocols. With the problem of autonomous driving, there are really no hard rules, as the Uber incident demonstrates quite well. Rather than starting from constraints, building a well-defined solution space and then trying to pick the most varied and interesting ones, this problem requires starting from real-life scenarios and then augmenting them with interesting variance that follows only soft rules. Instead of fighting the last war, verification engineers should probably start looking for inspiration, new technologies and new methodologies for generating stimuli elsewhere, maybe in the machine-learning domain? On the other hand, machine-learning algorithms are often a “black-box” – with these inputs, those are the outputs – not giving the system, or the person designing the system, enough insight on what and how can be improved.

In fact, Intel/Mobileye just announceda large deal to supply 8 million cars to a European automaker with its self-driving technologies, as soon as 2021 and released footage of autonomous driving in busy Jerusalem. And the debate about how many and what types of sensors, cameras, LIDARs and radars are needed for a full autonomous vehicle is still on.

However, as discussed above, no matter how many “eyes” such cars have, the true challenge will be to verify that the “brains” behind such eyes are making the right decisions at the critical moments.

(Disclaimer: This early post by Intel claims that the Mobileye system would have correctly detected and prevented the fatal accident).

Moshe Zalcberg is CEO of Veriest Solutions, a leading ASIC Design & Verification consultancy, with offices in Israel and Serbia.

*My thanks to Avidan Efody, HW/SW Verification expert, for reviewing and contributing to an earlier version of this article.