While writing “Mobile Unleashed: The Origin and Evolution of ARM Processors In Our Devices” it was very clear to me that ARM was an IP phenomenon that I did not believe would ever be repeated. Clearly I was wrong as we now have RISC-V with an incredible adoption rate, a full fledged ecosystem, and top tier implementers which now includes eSilicon.

I spoke to Mike Gianfagna and Lou Turnullo from eSilicon about their recent announcement. A small world story, Mike and I worked together at Zycad and Lou and I worked together at Virage Logic. Virage took over the Zycad building so my commute did not change nor did the color of my office. Mike and Lou are very approachable guys with a wealth of experience so if you have the opportunity, definitely approach them.

This announcement is really about SerDes which has changed quite a bit over the years. You will be hard pressed to find a leading edge chip without SerDes inside so this is a big semiconductor deal, absolutely. Earlier SerDes were analog. In an analog SerDes, all characteristics of the SerDes are “hard coded” and cannot be changed. Newer SerDes are more complex and must operate in a wider variety of system configurations. These SerDes are often DSP-based vs. analog.

The eSilicon SerDes is DSP-based. With this architecture you can control characteristics of the SerDes via firmware. This is what the SiFive E2 embedded processor is used for. New SerDes must operate in a wide variety of system configurations – backplane configurations, temperature/humidity extremes, connector types. All this requires configuration of the SerDes equalization functions so the SerDes will match its operating environment so it can deliver the best power and performance.

Bottom line: DSP-based SerDes can be “tuned” to the operating environment whereas analog SerDes cannot. Another interesting application is continuous calibration which is also possible, where the SerDes can be tuned and re-tuned over time to adapt to changes in the operating environment and even changes in the SerDes itself as it ages.

eSilicon and SiFive put out a good press release so I have included it here. You can read more about the SiFive E2 Core HERE.

eSilicon Licenses Industry-Leading SiFive E2 Core IP for Next-Generation SerDes IP

Configurability of industry’s lowest-area, lowest-power core provided optimal solution for eSilicon

SAN MATEO, Calif. and SAN JOSE, Calif. – Aug. 7, 2018 – SiFive, the leading provider of commercial RISC-V processor IP, and eSilicon, an independent provider of FinFET-class ASICs, market-specific IP platforms and advanced 2.5D packaging solutions, today announced that, after extensive review and testing of available options in the market, eSilicon has selected the SiFive E2 Core IP Series as the best solution for its next-generation SerDes IP at 7nm.

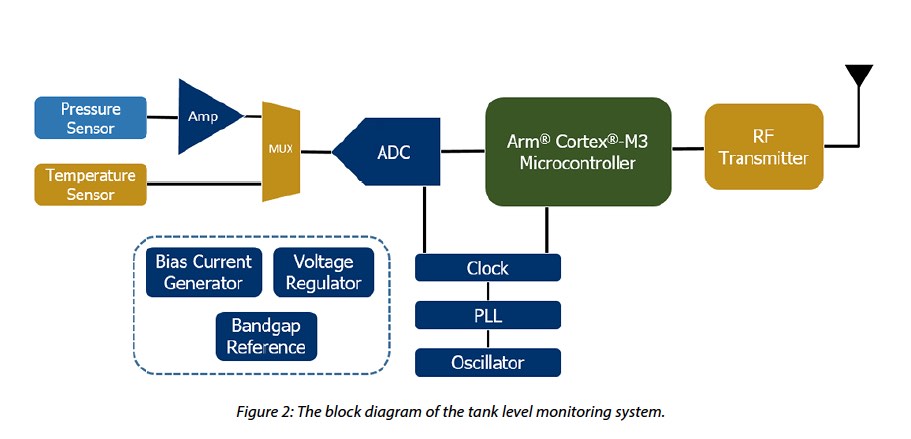

eSilicon’s 7nm SerDes IP represents a new breed of performance and versatility based on a novel DSP-based architecture. Two 7nm PHYs support 56G and 112G NRZ/PAM4 operation to provide the best power efficiency tradeoffs for server, fabric and line-card applications. The clocking architecture provides extreme flexibility to support multi-link and multi-rate operations per SerDes lane.

“Today’s high-performance networking applications require the ability to balance power and density to effectively address increasing performance demands,” said Hugh Durdan, vice president of strategy and products at eSilicon. “SiFive’s E2 Core IP allows eSilicon to provide the flexibility and configurability that our customers require while achieving industry-leading power, performance, and area.”

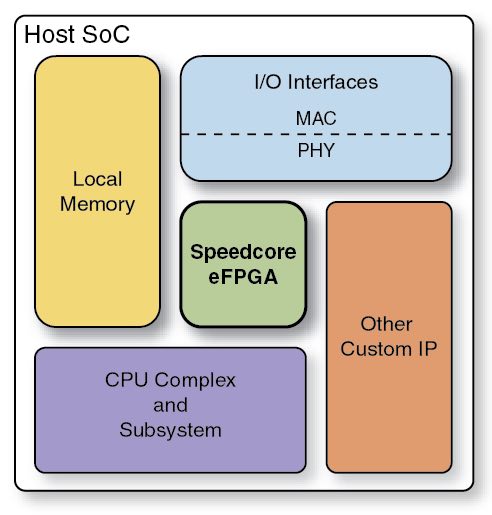

The SiFive E2 Core IP is designed for markets that require extremely low-cost, low-power computing, but can benefit from being fully integrated within the RISC-V software ecosystem. At one-third the area and one-third the power consumption of similar competitor cores, the SiFive E2 Core series is the natural selection for companies like eSilicon that are looking to address the challenges of advanced ASIC designs.

“eSilicon has a successful track record for leveraging the most advanced technologies to develop high-bandwidth, power-efficient IP for ASIC design,” said Brad Holtzinger, vice president of sales, SiFive. “Our E2 Core Series IP takes advantage of the inherent scalability of RISC-V to bring the highest performance possible to the demands of advanced ASICs. We look forward to working with eSilicon on its next-generation SerDes to address these demands.”

About SiFive

SiFive is the leading provider of market-ready processor core IP based on the RISC-V instruction set architecture. Led by a team of industry veterans and founded by the inventors of RISC-V, SiFive helps SoC designers reduce time-to-market and realize cost savings with customized, open-architecture processor cores, and democratizes access to optimized silicon by enabling system designers to build customized RISC-V based semiconductors. SiFive is located in Silicon Valley and has venture backing from Sutter Hill Ventures, Spark Capital, Osage University Partners and Chengwei Capital, along with strategic partners Huami, SK Telecom, Western Digital and Intel Capital. For more information, visit www.sifive.com.

About eSilicon

eSilicon is an independent provider of complex FinFET-class ASICs, market-specific IP platforms and advanced 2.5D packaging solutions. Our ASIC+IP synergies include complete 2.5D/HBM2 and TCAM platforms for FinFET technology at 16/14/7nm as well as SerDes, specialized memory compilers and I/O libraries. Supported by patented knowledge base and optimization technology, eSilicon delivers a transparent, collaborative, flexible customer experience to serve the high-bandwidth networking, high-performance computing, artificial intelligence (AI) and 5G infrastructure markets. www.esilicon.com