Mr. Venkata Sudhakar Simhadri is a serial entrepreneur with a proven track-record in the semiconductor industry. He was the Founder, President & CEO of Gigacom Semiconductor LLC & Founder / Director of Gigacom India (Both the Companies acquired by MosChip) and the driving force behind establishing IP licensing and design services business with leading semiconductor companies.

Earlier to Gigacom, Venkata was the Founder, President & CEO of Time-to-Market (TTM) from 1998 till its acquisition by Cyient in 2008 and was the head of its Hi-tech Business Unit till 2012. Venkata has 30+ years of experience, primarily working in the USA and India region.

Mr. Venkata is currently the MD & CEO of MosChip Technologies Limited, Chairman of IESA Semicondutor Manufacturing CIG (Core Interest Group),Vice-Chairman of the IESA Hyderabad Chapter, the brains behind the IESA AI Summit started in 2021, and was the General Chair for the recent blockbuster, VLSI Design & Embedded Systems Conference 2023.

Tell us about your company?

MosChip Technologies is a publicly traded (BSE | 532407↗) semiconductor and Embedded system design services company headquartered in Hyderabad, India, with 1300+ engineers located in Silicon Valley-USA, Hyderabad, Bengaluru, Ahmedabad and Pune. MosChip provides turn-key digital and mixed-signal ASICs, design services, SerDes IP, and embedded system design solutions. Over the past 2 decades, MosChip has developed and shipped millions of connectivity ICs. For more information, visit moschip.com

What problems are you solving?

MosChip is a semiconductor design and solutions company that offers a wide range of products, services, and IP (Intellectual Property) portfolio to cater to various industries. The problems that MosChip is solving across industries include:

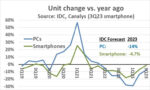

Semiconductor Design Challenges: Semiconductor industry has been growing rapidly for the last few decades with the demand in emerging markets like automotive, AI, IOT and etc. As a result, there has been a huge scarcity for experienced chip design engineers. MosChip has been focused on training and creating chip design talent and providing design services to the top semiconductor companies in the world.

Embedded System Development: MosChip has twenty plus years of track record in Embedded product design and has built many products ( both hardware/ software ) for defense, communications, IOT applications. MosChip has been helping customers to build proto-types, validation and releasing the products to markets.

IP Portfolio: Their IP portfolio includes pre-designed and verified intellectual property blocks, which can save time and resources for companies looking to integrate proven technology into their products. This can be especially useful for speeding up product development cycles.

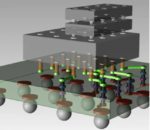

Industry-Specific ASIC Platforms/Solutions: MosChip has been investing in RISC-V based platforms for IOT, Industrial and Automotive applications that includes both Analog/ Mixed-signal and digital building blocks. Leveraging the platform, MosChip can provide quick turn-around for building custom silicon ( turn-key ASICs ) for customers looking for optimizing their products.

In summary, MosChip addresses a wide range of challenges across industries by offering semiconductor design services, a diverse IP portfolio, Embedded system design services. These services aim to help companies innovate, improve product quality, reduce development time &cost, and stay competitive in their respective markets.

What application areas are your strongest?

Our strongest application areas include Consumer Electronics, Networking, Industrial Automation, automotive electronics, smart cities, Government, and healthcare. In these domains, MosChip has a proven track record of delivering customized semiconductor and embedded solutions that enhance performance, reliability, and efficiency.

What does the competitive landscape look like and what are your collaborations across various sectors?

The competitive landscape in our industry is spread across the world and some of the Indian IT service companies offering similar design services. However, our collaborations with the leading fabs ( MosChip is TSMC’s DCA partner ), EDA Companies like Cadence, Synopsys and Siemens and IP companies give us competitive edge. Most importantly, MosChip’s dedicated focus and Semiconductor industry and the 20+ years of track-record gives us the competitive edge.

What new features/technology are you working on?

We have been investing on technologies such as Artificial Intelligence (AI) and Machine Learning (ML) to enhance our semiconductor solutions. Additionally, we are expanding our capabilities in edge computing and edge applications to provide clients with more comprehensive and efficient solutions.

MosChip is also working on VIDYUT, which is a cutting-edge, fully-integrated polyphase Energy Meter IC designed specifically for Energy Meter OEMs. The platform we are building for this IC using RISC-V has the potential address many different applications.

How do customers normally engage with your company?

As mentioned, MosChip is one publicly-traded companies on Bombay Stock Exchange (BSE) in the Semiconductor companies of India which gives us a basic exposure to the customers. Apart from this, Customers discover us through our personal business network and via our digital presence (on LinkedIn | Twitter | Instagram | Facebook | YouTube | Koo | website). MosChip is also an active member of multiple orgnizations like IESA and My self being the Chairman of the Semiconductor Manufacturing Core Interest Group (CIG) of IESA gives us a great visibility. We are also been the active participants in the Semicon India Conferences and other events relevant to Embedded Systems, AI/ML, etc.

Also Read:

CEO Interview: Islam Nashaat of Master Micro

CEO Interview: Sanjeev Kumar – Co-Founder & Mentor of Logic Fruit Technologies