In a Washington Post Column this past Sunday, Barry Ritholtz, A Wall St. Money Manager and who has a blog called the Big Picture, recounts the destruction that Apple has inflicted on a wide swath of technology companies (see And then there were none). He calls it “creative destruction writ large.” Ritholtz though is only accounting for what has occurred to date. I would contend that we are about to start round two and the changes coming will be just as significant. If I were to guess, HP will soon decide to Farm out its Server Business to Intel. Intel will soon realize that they will need to step up to the plate for a number of reasons.

When HP hired Leo Apotheker, the ex-CEO of Software Giant SAP, the Board of Directors (which includes Marc Andreessen and Ray Lane, formerly of Oracle) implicitly fired the flare guns that they were in distress and were going to make radical changes as they reoriented the company into the software sphere of the likes of Oracle and IBM. To do this, they had to follow IBM’s footsteps by first stripping out PCs. IBM, however, sold its PC group to Lenovo back in 2004 before the last downturn. Unfortunately for HP, it will get much less for its PC business than what they paid for Compaq.

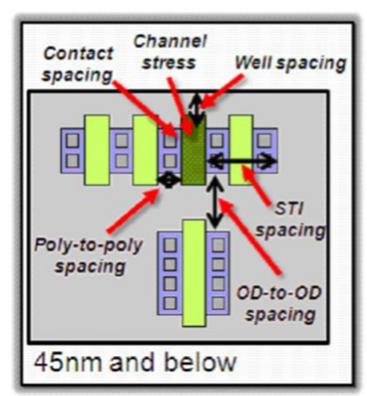

The next step for HP is risky but necessary. They need to consolidate server hardware development under Intel. Itanium based servers are selling at a run rate of $500M a quarter at HP are now less than 5% of the overall server market compared to IBM Power and Oracle SPARC, which together account for nearly 30% of the server dollars. Intel and AMD x86 servers make up the rest (See the chart below). In addition, IBM’s mainframe and Power server businesses are growing while HP’s Itanium is down 10% year over year.

Oracle’s acquisition of Sun always intrigued me as to whether it was meant as a short-term effort to force HP to retreat on Itanium or as a much longer-term strategy of giving away hardware with every software sale. When Oracle picked up Sun, it still held a solid #2 position in the RISC world, next to IBM. By taking on Sun, Oracle guaranteed SPARC’s survival and at the same time put a damper on HP growing more share. New SPARC processors were not falling behind Itanium as Intel scaled back on timely deliveries of new cores at new process nodes. More importantly, the acquisition was a signal to ISVs (Independent Software Vendors) to not waste their time porting apps to yet another platform, namely Itanium. Oracle made sure that HP was seen, as an orphaned child when it announced earlier this year that is was withdrawing support for Itanium.

There is only one architecture, at this moment, that can challenge SPARC and Power and it is x86. It is in HP’s interest to consolidate on x86 and reduce its hardware R&D budget. If needed, a nice software translator can be written to get any remaining Itanium apps running on x86. Since the latest XEON processors are three process nodes ahead of Itanium, there should be little performance difference. But what about Intel, do they want to be the box builder for HP?

I would like to contend that Intel has to get into the box business and is already headed there. There chief issue in holding them back is the reaction from HP, Dell and IBM. Neither of them is generating great margins on x86 servers. With regards to Dell, Intel could buy them off with a processor discount on the standard PC business, especially since they will now be the largest volume PC maker. IBM is trickier.

But why does Intel want to go into the server systems business. The answer is several fold. From a business perspective they need more silicon dollars as well as sheet metal dollars. Intel sees another $20-$30B opportunity in ramping up and they will need it to counteract any flatness or drop in processor business in the client side of the business. Earlier this year, Intel bought Fulcrum, if they build the boxes for the data center, then they have the potential to eat away at Broadcom’s $1B switch chip business.

A more interesting angle is the data center power consumption problem. Servers consume 90% of the power in a data center. It used to be that processors were the majority of the power, but with the performance gap growing between processors and DRAM and the rise of virtualization it now becomes a processor and memory problem. Intel is working on platform solutions to minimize power but they expect to get paid for their inventions.

Intel has started to increase prices on server processors based on reducing a data center’s power bill. Over the course of the next few years they will let processor prices creep up, even with the looming threat of ARM. This is a new value proposition that can be taken one step further. If they build the entire data center box with processors, memory, networking and eventually storage (starting with SSDs), then they can maximize the value proposition to data centers, who may not have alternative suppliers.

In some ways Intel is at risk if they just deliver silicon without building the whole data center rack. There are plenty of design groups at places like Google, Facebook and others who understand the tradeoffs of power and performance and would like to keep cranking out new systems based on the best available technology. By Intel putting down its big foot, it could eliminate these design groups and make it more difficult for a new processor entry (AMD or ARM based) from entering the game.