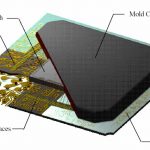

Since the introduction of Apple’s iPhone and then the follow on iPAD, it has been Wall Streets frame of reference that Intel would be playing defense as the PC market slid into oblivion and therefore a Terminal Value should be placed on the company. Intel’s Q4 2011 earnings conference call provided a nice jolt to the analysts as Paul Otellini signaled a massive Home Run to the Upper, Upper Decks is coming. The result of this is that a whole slew of Fabless Companies are about to get their functionality integrated into Intel’s smartphone, tablet and ultrabook platforms courtesy of the low power 22nm and 14nm trigate processes.

The old model was that Fabless was safe because it required little upfront capex outlay as the semiconductor market rotated in and out of boom and bust cycles. Therefore the higher P/E ratios accrued to Qualcomm, Broadcom, Altera, Marvell, Xilinx while Intel sat at a lowly 10 P/E. In addition, the Sovereign Debt Crises that had its beginnings over 10 years ago struck fear into ordinary investors who fled equity’s for the absolute safety of low yielding government bonds resulting in further P/E compression. However as Governments begin to print their way out of their massive liabilities, we may see a new safe haven for investors: the American export-oriented companies that sit on massive cash hordes and will be able to borrow at lower rates than the broke Sovereigns or their would be competitors.

Intel knows this and is leveraging its strong financial position into an even bigger investment in 2012 of 14nm capacity and R&D which can only mean that they are on a path to implement a mobile and datacenter roadmap that completely cannibalizes companies like Broadcom and Marvell in networking and the ARM camp in mobiles. The only possible holdout will be Qualcomm with their Communications IP.

On top of last years massive $10.8B Capex spending, Intel plans to spend $12.5B to build and outfit two 14nm fabs and thereby have in place by late 2013 twice the fab footprint they had in 2011 with 32nm. In addition, R&D spending is increasing 21% to $10.3B. That says there are a lot of tapeouts coming at 22nm and 14nm this year alone.

The revenue that Intel has guided to this year is only supposed to increase by “high single digits” so it means that they will only tag on an extra $5B to this years $54B. However, none of this takes into account the likely addition of Apple by end of the year and the fact that they are being very conservative on the uplift of ultrabooks vs today’s notebooks. A high ultrabook cannibalization or incremental growth scenario would mean much higher ASPs at the same volume as Intel charges a nice premium for low power.

Going into 2013, Intel plans to launch Haswell, which is a new microarchitecture that I would strongly speculate will take the TDP down from 17W for the ULV to under 10W. In the 1990s Intel’s business model paid more for higher MHz. Now the model pays for lower and lower TDP and higher integration. With a lower TDP, Intel will open up the next big revenue driver in tablets and ultrabooks, which is Intel 3G/4G/LTE baseband that will be common across all mobiles. Who wants to be limited by WiFi when Cellular is everywhere? Intel will argue that it’s communications solution in the latest trigate will overcome the power problems seen in the silicon coming out of the Fabless players and therefore ruin battery life and make enclosures more difficult to design.

Against this backdrop, it is important to remember that the other components in the tablet and ultrabook continue to drop in price (e.g. Screens, HDDs and Flash), which enables Intel to increase its relative BOM content in a rising unit volume market. Therefore tracking Intel’s revenue growth is more than linear with PC or Tablet unit growth.

If Intel succeeds with its plans, it will put tremendous pressure on TSMC and their ability to keep up as they rely on Qualcomm, nVidia, Altera and Broadcom to fund leading edge production. It also raises a question about Samsung’s plans to utilize Intel based solutions vs its internal ARM based chips. Samsung has been a long-standing PC player and will need to use Intel x86 in corporate tablets with Win 8. The smartphone and consumer tablet business is not just open for question, but open to Intel commoditization.

To accelerate the market move to Intel silicon in Smartphones and Tablets, Intel has just announced agreements with Motorola and Lenovo, the former home of Rory Read (CEO of AMD). Lenovo has been gaining share in the PC market at the expense of Dell and HP. With this deal, Intel is using the world’s lowest cost supplier to threaten the existing Android tablet and smartphone leaders HTC and Samsung. It will put extreme pressure on ARM processor suppliers later this year. The Motorola deal means that Intel will get preferential treatment in the tuning of the latest Google Android O/S for x86. ARM now will move to the back of the O/S bus.

The real story at the end of the day is that Moore’s Law is an even more destructive force than it was two years ago as the cost for the Fabless guys to play in Intel’s court has been raised dramatically. The Barbed Wire Fences Have Just Been Moved Out and Intel’s Ranch has grown.

FULL DISCLOSURE: I am Long AAPL, INTC, ALTR and QCOM