Most IC designers I talk to really enjoy the creative process of developing a new SoC design, debugging it, then watching it go into production. They don’t really like spending time learning how to make their EDA tools work together in an optimal IC design flow where they may have a dozen tools each with dozens of options. Fortunately for Synopsys tool users there is now a short-cut offered in the form of the Lynx Design System, which has already captured the digital design flow so you can focus on design instead of CAD integration.

Continue reading “How Is Your IC Design Flow Glued Together?”

The Semiconductor Landscape In A Few Years?

Looking at the huge gap between the revenue of semiconductor design and manufacturing (~$300B) and that of EDA tools, services and silicon IP combined (~6B) inspired me to look more deeply into the overall arena of semiconductors in today’s context and possibly decipher some trends which should emerge in near future. Although this gap in revenue always existed, in the realm of SoCs and ever increasing complexity on a single wafer over last few years, the gap seems to be justified and may open up new chapters in the semiconductor arena. I have been watching the developments in this space for a few years and the industry seems to be at an inflexion point. This prompted me to write this article.

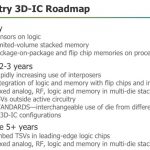

In the backdrop of ever increasing demand for higher density at lower nodes, thereby increasing most intricate design and process rules and manufacturability, it is evident that designs need to be much closer to manufacturing. Some of this is being addressed by semiconductor IPs which are specialized components targeted at particular nodes. Possibly due to this, the IP business has increased to much extent, rightly pointed by Eric Esteve in his Jan 2012 article, that Q311 silicon IP revenue ($568M) has surpassed the overall revenue of CAE ($566M). With the geometry reduction, power became critical which took the front seat thus giving rise to High K metal gate and vertical gate or FinFET transistors in technology and several CAE techniques such as concept of power domain over clock gating and so on. There are other manufacturing complexities, e.g. reducing metal pitch giving rise to coupling effects and signal integrity issues, at 20nm and below this asks for double and higher patterning technique which in turn asks for new routers to accommodate different masks for the same layer. After all one common theme emerging is that lower geometry (20nm and below) made to work can give rise to large saving in area and power as well as can provide very high speed. For increasing capacity and possibly reducing assembly complexity, 3D ICs are on the horizon.

Now let us look at the economic and business scenario in this arena. Foundries have led the way for semiconductor technology development, so definitely they need to be the largest share holders. Then comes some of the dominant application players, wireless is the largest growing and in that market Qualcomm is the giant with huge cash reserves. However, Qualcomm does not have a foundry and hence it has to share its profit margin with foundry. Samsung has its presence in home appliances, entertainment, and smart phone and so on. Then there are memory and storage players like Micron and ST. A very important, Silicon IP is an element spread across everywhere with ARM being a dominant player in that space. Then there is EDA, CAE, serving all of these spaces as per their needs. While large technology advancement has taken place and more is being done, economic pressure has increased. Multiple players have increased in each of these spaces and purchasing power of a consumer has decreased, there by resulting into depressed profit margins, mostly in single digit. Definitely there is a case of consolidation in each of these spaces. What looks to be obvious is that high-end foundries combined with design will be the leader in the overall semiconductor landscape. Following are some of the possible scenarios I can think of –

Foundries – Intel and TSMC will lead the way being ahead in the process node compared to the rest and hence taking advantage of enabling high-end SoCs. Samsung, Global Foundry and SMIC are there to follow.

Appliances, Storage – Home appliances, automotive, Entertainment – Samsung, Micron, and ST will remain the leaders.

Wireless and mobile application – Qualcomm will continue to lead the way. It needs to find the way to reduce or eliminate margin paid to the foundry and that could bring a large surprise considering the money power Qualcomm has.

Semiconductor IP – This is a brain child which will continue growing in design IP space. The processor dominant, ARM can grow further and communication IP player Qualcomm will continue growing. Other IP players can consolidate with ARM and Qualcomm.

EDA – Synopsys, Cadence and possibly Mentor will continue their lead in EDA tools space. Quite often it has been felt that an EDA company should get a share in the chip produced by its tool. However the important point to note here is that an EDA company does not provide any design IP, it provides tools which get driven by the foundry requirements. Hence in broader sense EDA is a service to foundry, the new technology in foundry drives the enhancements into EDA tools to cater to those needs or accommodate them. Moreover in SoC age, the design is more and more IP and foundry driven. It makes sense for EDA tools to work in association with foundries. When foundries will consolidate, then it will not be a surprise if major players like Intel and TSMC acquire big EDA players like Synopsys, Cadence and Mentor.

I did not talk about computing space, PC, notebook etc. With the arrival of iPad and mediaTablet, that space will be led by the experts like Apple, Intel, and IBM and so on. Of course in the overall eco system and design chain, there will remain multiple players in every domain, but they will operate as services to the consolidated giants.

Apple blows away their numbers

Well it looks like everyone (including me) was way too conservative about Apple’s iPhone sales last quarter. Analysts were expecting Apple to sell 30M iPhones and 13M iPads. In fact they sold 37M iPhones, almost a quarter more than expected, and over 15M iPads. In fact Apple sold more iPads than HP, the largest PC manufacturer, sold PCs. Yes, that’s an apples-oranges comparison but it is clear that the tablet market will have a real impact on the PC market as we move into the post-PC world. And Apple didn’t do badly in that space either, selling 5.2M Macs too.

Apple have now sold 350M iOS devices, 62M of them (nearly 20%) in Q4 of last year alone. I still think that last quarter was somewhat anomalous following the low Q3 numbers since a lot of people waited for the iPhone 4S. The market share numbers seem to be Apple at 43% and Android at 47%. Respected analysts are still predicting that WP7 phones (mainly through Nokia) will surpass iPhone sales in a couple of years but I’m not sure I find that credible.

On their call Apple said that there were supply chain limitations during the quarter and so presumably they could have sold even more iPhone and iPads.

Apple is now the #1 computer manufacturer by volume, nearly the number #1 by revenue (HP is still a bit bigger) and may well be the most valuable company in the world tomorrow when the market opens and it is expected, as it did for a short time last year, to surpass Exxon-Mobil. They are sitting on nearly $100B in cash. They are also now the largest purchaser of semiconductors in the world at $17B (according to Gartner).

To put Apple’s achievement in perspective, they grew revenue by $47B in 2011 versus 2010. That’s like creating an Intel from scratch in one year (Intel should be a bit over $50B).

Apple’s Blowout Earnings: Welcome to 2012!

Apple’s blowout earnings for the quarter that just ended has huge ramifications for the entire semiconductor industry as suppliers align much closer to them or figure out how to minimize the damage that is to come through the rest of 2012. The immediate implication is that Wall St. will likely toss to the sidelines any semiconductor vendor or Foundry that is not clearly in the Apple Camp today or in the very near future. On a second and more important note, this enhances my confidence that Intel will be supplying silicon to Apple by the end of the year and has put in place a multi-year processor development partnership.

In the past quarter, Apple was able to close the gap with Android smartphones by increasing iphone market share by 17% (see graph to the right). The likelihood is that they are on the path to exceeding Android sometime in the next two quarters. Combined with the strong growth in iPAD’s and the MAC PC line, Apple has carved out a position that will be hard to assail. Looking at the stock prices of various semiconductor vendors after Apple reported, one sees that Qualcomm and Broadcom are viewed as big beneficiaries. On the flip side, nVidia was down as their market opportunity with Tegra in phones and tablets will now shrink. In addition, they will now have to battle AMD for Apple’s MAC PC business.

As mentioned in several previous blogs (hereand here), Intel’s large capex spending in 2011 to build out 22nm and this year’s even larger CAPEX to build two new 14nm fabs, thus doubling capacity, can only be logical if they have Apple as a customer. In addition, the huge 21% increase in Intel’s R&D budget is likely to go beyond the development of purely x86 processors and 4G/LTE baseband communications chips. Apple has plenty of reasons to want to leverage an Intel relationship. First and foremost it must reduce the risk of depending only on Samsung and secondly, there is a lot Intel can offer in terms of future processor design and technology.

Last summer, I speculated that a joint x86-ARM processor effort between Apple and Intel that supports simultaneous iOS and OS-X operating systems running on Tablets and MAC Air PCs could be in the works. The benefit to the user is to allow the iOS to run most of the time (non-Office Mode) for best battery life and fastest response time. With the x86 functionality, it would be possible for Apple to sell into the corporate world a much higher costing tablet that meets the check box of both iOS applications plus Office Applications and therefore blunt a Windows 8 tablet attack coming from PC manufacturers like Dell and HP starting late 2012. Dell and HP’s only roadblock to keeping Apple out of corporate would be gone and in fact would be at a disadvantage with the lack of iOS applications support on their tablets. Suddenly the corporate world makes a shift to Apple. Microsoft will be the big loser.

For consumers, the story also gets stronger as Apple offers the same CPU but with the x86 disabled for entry-level models. With a click of a button on iTunes users will be given the opportunity to upgrade at a reasonable price point that provides generous margins to Apple. The reconfiguration will be done remotely of course.

Apple, as one can see, is in the drivers seat and although they are now the most valuable company in the world in terms of market cap, they have just begun to tap into the rich veins of the consumer and corporate computing markets. To get where they are going, they will need to enter into (if they haven’t already) a heavy engineering collaboration with Intel on new processors on the leading edge process combined with the latest packaging technology. This collaboration must be in place for new processors that arrive 2, 4, and maybe as far as 6 years down the road to maximize impact.

FULL DISCLOSURE: I am Long AAPL, INTC, QCOM, ALTR

Manage Your Cadence Virtuoso Libraries, PDKs & Design IPs (Webinar)

Users of Cadence Virtuoso tools for IC layout and schematics can make their design flow easier by using Design Data Management tools from ClioSoft. Keeping track of versions across schematics, layout, IP libraries and PDKs can be daunting. Come and learn more about this at a Webinar hosted by ClioSoft next Tuesday.

Continue reading “Manage Your Cadence Virtuoso Libraries, PDKs & Design IPs (Webinar)”

Going up…3D IC design tools

3D and 2.5D (silicon interposer) designs create new challenges for EDA. Not all of them are in the most obvious areas. Mentor has an interesting presentation on what is required for verification and testing of these types of designs. Obviously it is somewhat Mentor-centric but in laying out the challenges it is pretty much agnostic.

The four big challenges that are identified are:

- physical verification for multi-chip packages using silicon interposers and (through-silicon-vias) TSVs.

- layout-versus-schematic (LVS) checking of 3D stacks, including inter-die connectivity

- parasitic extraction for silicon interposers and TSVs

- manufacturing test of 3D stack from external pins

The first 3 challenges, updating the physical verification flow to handle 3D, are incremental improvements on existing technology. One complication is that the technologies (and hence the rules) used on each die may be different. But fundamentally, physical verification can be done one die at a time, LVS is comparing networks although bigger and more complex, and parasitic extraction can be done one die at a time although there are also inter-die effects that may need to be modeled. One area that does require a lot more attention is ensuring that the TSVs on one die do indeed match up to appropriate connection points on the die underneath so circuit extraction cannot be done entirely one die at a time.

The entire manufacturing process is obviously impacted by 3D in a major way. But here’s one less obvious area that is impacted: wafer sort. When a wafer comes out of the fab and before it is cut up into individual dice, it is tested to identify which die are good and which are bad. But at some point, enough testing is counterproductive: it is cheaper to waste money packaging up a few bad die and then discarding them at final test, than it is to have a much longer wafer test (perhaps even requiring more testers). When you discard a bad die at final test you are just wasting the cost of the package and the cost of assembly. The die itself was always bad.

With 3D this tradeoff point moves. If you package up a bad die along with several good die in a stack, then not only are you discarding a bad die, the package and the assembly cost. You are discarding all the other die in the package which are (most likely) good. So it makes sense to put a lot more effort into wafer sort. Plus, to make it worse, this is more likely to happen since, with several die in the package, the chance that all of them are good is lower than the chance that any one die is good.

Once the die are packaged up, then the challenge is to get test vectors to any die that are not directly connected to package pins. A very disciplined approach is required to ensure that vectors can be elevated up from the lowest die (typically connected to the package) and the upper levels.

Future challenges that are identified are:

- architectural exploration. 3D offers another degree of freedom and all the usual floorplanning issues have to be extended to cover multiple-floors

- thermal issues and signoff. TSVs and multiple die spread out heat to some extent, but nevertheless all the heat from the middle of the stack needs to get out

- physical stress especially in areas around TSVs (where the manufacturing process can affect transistor threshold voltages)

The Mentor presentation is here.

High Speed USB 3.0 to reach Smartphone & Tablets in 2012… but which USB 3.0?

If you are not familiar with SuperSpeed USB standard (USB 3.0), you may understand this press release from Rahman Ismail, chief technology officer of the USB Implementers Forum, as simply claiming that USB 3.0 will be used in smartphone & media tablet this year… but, if you are familiar with the new standard, you are just confused! In fact, the nick name for USB 3.0 is “SuperSpeed”, as the nick name for USB 2.0 is “High Speed”. Calling USB 3.0 ‘High Speed USB 3.0” is just a good way to put confusion in the reader’ mind!

Let’s try to clarify the story.

- High Speed USB (USB 2.0 running at 480 Mbit/s) is supported in Wireless handset for a while, allowing to exchange data with external devices (PC, laptop) and also to charge the battery (up to 500 mA). But, you also can find USB 2.0 used inside the handset for chip to chip communication only, it’s HSIC. Some chip makers use it for example to interface the Application Processor with the Modem, like TI in OMAP5.

- SuperSpeed USB (USB 3.0 running at 5 Gbit/s) if offering a theoretical maximum data rate of 4 Gbit/s or 500 MB/s (due to 8b/10b encoding scheme of the PHY, similar to PCIe gen-2) and offers a battery charging efficiency almost twice better, up to 900 mA. Similarly, you can find SSIC, or USB 3.0 defined for chip to chip communication, used internally in the handset.

- Adopting USB 3.0 will also bring additional benefits (SuperSpeed USB is a Sync-N-Go technology that minimizes user wait-time. No device polling and lower active and idle power requirements provides an Optimized Power Efficiency) but we will concentrate on data rate and battery charging here.

- A very interesting opportunity has been offered when the MIPI Organization and USB IF have decided that MIPI M-PHY (specification for High Speed Serial physical layer, supporting data rates ranging from 1.25 Gbit/s to 5 Gbit/s) could be used to support USB 3.0 function. That is, a wireless chip maker can integrates USB 3.0 controller (digital) in the core and uses M-PHY instead of the USB 3.0 PHY. Because this chip maker is (or will) probably using MIPI M-PHY to support others MIPI specifications like UFS, DigRF or LLI, he will have already acquired the technology expertise and could avoid developing or acquiring a new complex PHY (USB 3.0), thus save time and money!

So far, the picture looks clear. Then comes the above mentioned press release from USB IF, saying:“The data transfer rates will likely be 100 megabytes per second, or roughly 800 megabits per second (Mbps). Mobile devices currently use the older USB 2.0 technology, which is slower. However, the USB 3.0 transfer speed on mobile devices is much slower than the raw performance of the USB 3.0 technology on PCs, which can reach 5Gbps (gigabits per second). But transferring data using the current USB 3.0 technology at such high data rates requires more power, which does not fit the profile of mobile devices. “It’s not the failure of USB per se, it’s just that in tablets they are not looking to put the biggest, fastest things inside a tablet,” Ismail said.

To me, this PR from USB-IF represents another way to limit the attractiveness of SuperSpeed USB, able to offer 500 MB/s transfer rate, as this “High Speed USB 3.0” only offers 100 MB/s. Coming after the ever delayed launch of a PC chipset supporting native USB 3.0 from Intel, expected now in April this year when the USB 3.0 specification has been frozen in November 2008, it’s just like if a (malicious) wizard had decided to put a curse on SuperSpeed USB!

Will this standard has a chance to see the same adoption than the previous USB specification? Hum…

By Eric Esteve– IPNEST– See also “USB 3.0 IP Survey“

Analog Panel Discussion at DesignCon

DesignCon is coming up and the panel discussions look very interesting this year. The one panel session that I recommend most is called, “Analog and Mixed-Signal Design and Verification” which is moderated by Brian Bailey, one of my former Mentor Graphics buddies and fellow Oregonian.

Continue reading “Analog Panel Discussion at DesignCon”

Acquiring Great Power

“Before we acquire great power we must acquire wisdom to use it well”

Ralph Waldo Emerson

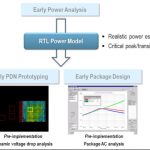

Making good architectural decisions for controlling power consumption and ensuring power integrity requires a good analysis of the current requirements and how they vary. Low power designs, and today there really aren’t any other types, makes this worse since both clock-gating and power-gating can cause much bigger transitions (especially when re-starting a block) than in designs where the power is delivered in a more continuous way.

The biggest challenge is that good decisions must be made early at the architectural level, but the fully-detailed design data required to do this accurately is obviously not all available until the design has finished. But obviously the design cannot be finished until the power architecture has been finalized. So the key question is whether early power analysis can deliver sufficient accuracy to guide power grid prototyping and chip-package co-design and so break the cycle of this chicken-egg problem.

Early analysis at the RTL level seems to offer the best balance between capacity and accuracy. Higher levels than RTL don’t really offer realistic full-chip power budgeting and levels lower than RTL are too late in the design cycle and also are dependent on the power architecture. But even at the RTL level the analysis must take account of libraries, process, clock-gating, power domains and so on.

Getting a good estimate of overall power is one key parameter, but it is also necessary to discover the design’s worst current demands across all the operating modes. Doing this at the gate-level is ideal from an accuracy point of view but leaves it too late in the design cycle. Again, moving up to RTL is the solution. Clever pruning of the millions of vectors can locate the power-cricitcal subset of cycles consuming worst transient and peak power and dramatic reduce the amount of analysis that needs to be done.

Once all this is identified, it is possible to create an RTL Power Model that can be used for the architectural power decisions. In particular, planning the power delivery network (PDN) and doing true chip-package co-design. Doing this early avoids late iterations and the associated schedule slips, always incredibly costly in a consumer marketplace (where most SoCs are targeted today).

See Preeti Gupta’s full analysis here.

EDA Tool Flow at MoSys Plus Design Data Management

I’ve read about MoSys over the years and had the chance this week to interview Nani Subraminian, Engineering Manager about the types of EDA tools that they use and how design data management has been deployed to keep the design process organized. My background includes both DRAM and SRAM design, so I’ve been curious about how MoSys offers embedded DRAM as IP. They’ve basically made the DRAM look like an SRAM from an interface viewpoint (so no more RAS, CAS, OE complex timing).

Continue reading “EDA Tool Flow at MoSys Plus Design Data Management”