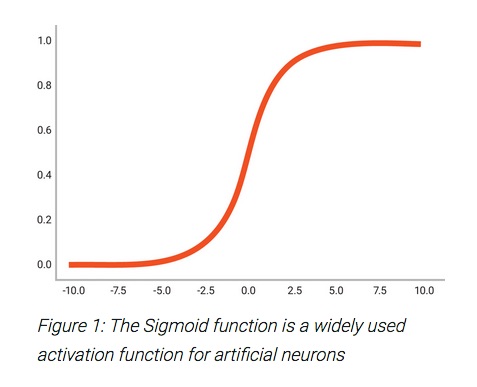

The field of artificial intelligence has relied on heavy inspiration from the world of natural intelligence, such as the human mind, to build working systems that can learn and act on new information based on that learning. In natural networks, neurons do the work, deciding when to fire based on huge numbers of inputs. The relationship… Read More

Tag: artificial intelligence

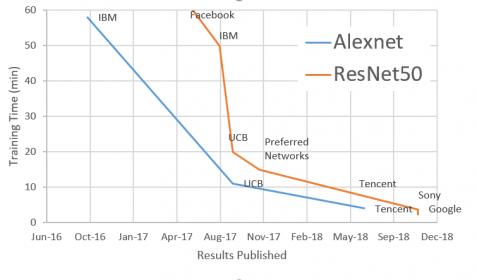

Let The AI Benchmark Wars Begin!

Why benchmark competition enables breakthrough innovation in AI. Two years ago I inadvertently started a war. And I couldn’t be happier with the outcome. While wars fought on the battle field of human misery and death never have winners, this “war” is different. It is a competition of human ingenuity to create new technologies … Read More

AI at the Edge

Frequent Semiwiki readers are well aware of the industry momentum behind machine learning applications. New opportunities are emerging at a rapid pace. High-level programming language semantics and compilers to capture and simulate neural network models have been developed to enhance developer productivity (link). Researchers… Read More

AI and the Domain Specific Architecture

Last month I attended the 2018 U.S. Executive Forum where Wally Rhines was one of the keynotes. I was also lucky enough to have lunch with Wally afterwards and talk about his presentation in more detail and he sent me his slides which are attached to the end of this blog.

The nice thing about Wally’s presentations is that they are not … Read More

Cloud FPGA Optimal Design Closure, Synthesis, and Timing Using Plunify’s AI Strategies

Plunify, powered by machine learning and the cloud, delivers cloud-based solutions and optimization software to enable a better quality of results, higher productivity and better efficiency for design. Plunify is a software company in the Electronic Design Market with a focus on FPGA. It was founded in 2009, has its HQ in Singapore… Read More

DesignWare IP as AI Building Blocks

AI is disruptive and transformative to many status quos. Its manifestation can be increasingly seen in many business transactions and various aspects of our lives. While machine learning (ML) and deep learning (DL) have acted as its catalysts on the software side, GPU and now ML/DL accelerators are spawning across the hardware… Read More

Deep learning fueling the AI revolution with Interlaken IP Subsystem

AI is revolutionizing and transforming virtually every industry in the digital world. Advances in computing power and deep learning have enabled AI to reach a tipping point toward major disruption and rapid advancement. However, these applications require much higher performance and bandwidth requiring new kinds of IP and… Read More

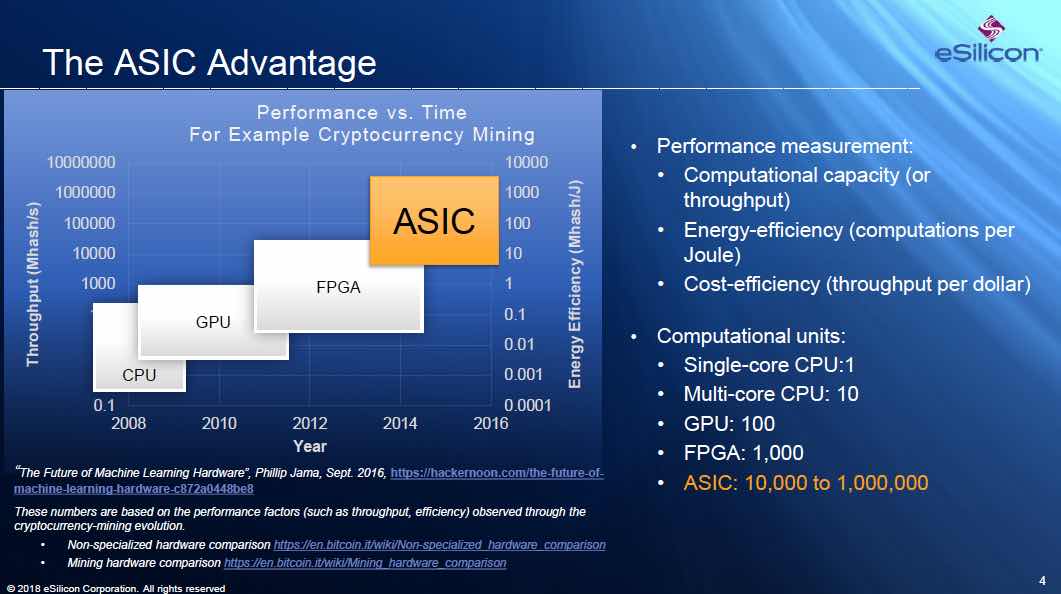

Being Intelligent about AI ASICs

The progression from CPU to GPU, FPGA and then ASIC affords an increase in throughput and performance, but comes at the price of decreasing flexibility and generality. Like most new areas of endeavor in computing, artificial intelligence (AI) began with implementations based on CPU’s and software. And, as have so many other applications,… Read More

Machine Learning Drives Transformation of Semiconductor Design

Machine learning is transforming how information processing works and what it can accomplish. The push to design hardware and networks to support machine learning applications is affecting every aspect of the semiconductor industry. In a video recently published by Synopsys, Navraj Nandra, Sr. Director of Marketing, takes… Read More

AI processing requirements reveal weaknesses in current methods

The traditional ways of boosting computing throughput are either to increase operating frequency or to use multiprocessing. The industry has done a good job of applying these techniques to maintain a steady increase in performance. However, there is a discontinuity in the needs for processing power. Artificial Intelligence… Read More