And just like that, the AI PC arrived. It will be hard to miss high-profile advertising campaigns like the one just launched by Microsoft touting them. Gartner said this September that AI PCs will be 43% of all PC shipments in 2025 (with 114M units projected) and that by 2026, AI PCs will be the only choice for business laptop users. Other analysts are going with even bigger numbers. The idea of an AI-enabled personal assistant is intriguing, and with AI PC momentum building fast, waiting to create and adopt implementations may be an expensive miss. We spoke with Ceva’s Roni Sadeh, VP of Technologies in their CTO office, to get some insight. Let’s look at what an AI PC might do for users and how designers might construct one quickly.

What would an AI PC do for users?

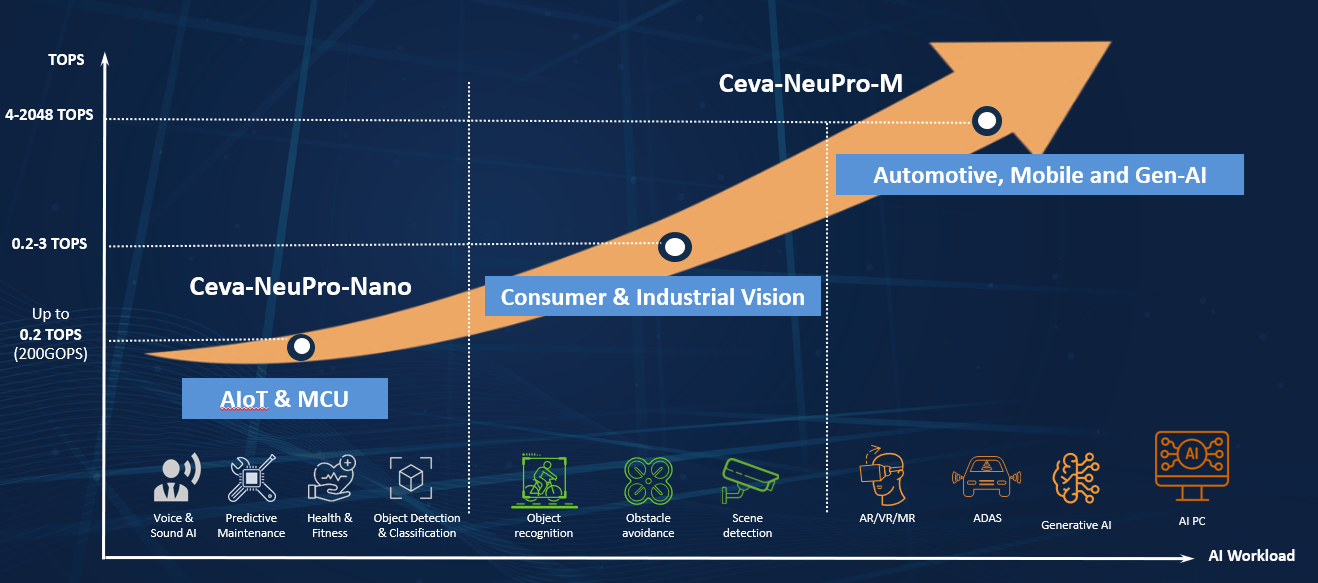

So far, the push for AI implementations has been primarily in two areas: server-class implementations running GPU clusters or large NPU chips in the cloud, and embedded NPU chip implementations in autos, defense systems, drones, vision systems, and more applications. The debate continues between GPUs, which are powerful for AI training and inference but highly inefficient, and tuned NPU accelerators designed for efficient inference.

This bifurcation developed because embedded systems must be much smaller, use less power and cooling, and render decisions in real-time with low latency. The cloud, as powerful as it can be, also has some inherent challenges: an application might be down temporarily, latency can spike when many users hit the same platform simultaneously, and privacy and data security are unclear. However, tight constraints on embedded systems limit how much processing power they can offer.

Now, something in the middle is developing if it can get enough computing power – a use case for an AI PC as a productivity assistant for personal or business use. Three immediate advantages are clear: an AI PC would be available anytime, analysis works without sending sensitive data to the cloud, and PCs are inherently single-user, so there is no competition for resources. “It’s a great opportunity for a dedicated AI accelerator,” says Sadeh. “It would be like talking to a person, gathering data stored on an AI PC, and responding to a prompt in around a second.”

This use case already exists for personal use with mobile phones, but they draw heavily on a cloud connection for data to draw their conclusions. An AI PC could handle more data in various formats without cloud resources. It could be transformative for data analysts, researchers, and Excel power users who are used to grinding through analysis, looking for something, and need to produce professional-quality documents with results rapidly.

How could designers construct AI PCs?

Of course, there are a couple of catches that merit design attention. Sadeh indicates that 40 TOPS, toward the upper end of embedded NPU chip capability today, won’t be enough for AI PCs to be useful as users throw more complex queries and more data at them. “We’ll need a few hundred TOPS in AI PCs soon and, ultimately, a few thousand,” says Sadeh. However, the power budget for designers is more or less fixed – scaling TOPS can’t come at the expense of rapid AI PC battery drain.

There is also the PC lifecycle and the question of upgrades. NPU designs for AI PCs will probably iterate very quickly, keeping pace with the speed of new AI model introductions. This pace suggests that in at least the first couple of rounds, AI PC designers will probably want to keep the NPU on an expansion module, such as M.2 or miniPCIe, instead of putting chips down on the motherboard.

Unlike embedded NPUs, which sell into various applications and require reconfigurability, Sadeh sees a closed AI PC solution. “Users will likely be unaware of the specifics of the AI model running,” he says. “Currently, 6GB of memory runs a large model, but it’s not hard to project memory needs getting larger.” The NPU, its memory, and its AI model would be fixed as shipped by the manufacturer but could upgrade if housed on an expansion module.

So, NPU chips must be small enough to fit on an expansion module form factor, fast enough to provide LLM model support within a perception of good user experience, and low power enough not to eat a battery too quickly. That’s where Ceva enters the picture with its IP product experience in AI applications.

Remember that hardware IP and software advances are cooperative in reaching higher TOPS performance. For instance, Meta has just released a new, more efficient version of its Llama model. As these newer models emerge, improved NPU tuning follows. Ceva’s NeuPro-M IP combines heterogeneous multi-core processing with orthogonal memory bandwidth reduction techniques and optimization for more data types, larger batch sizes, and improved sparse data handling. Ceva is already working with customers on creative NPU designs using NeuPro-M.

If businesses adopt the technology quickly, as Gartner anticipates, growth in AI PCs could take off. Read more of Sadeh’s thoughts on AI PC momentum in his recent Ceva blog post:

The AIPC is Reinventing PC Hardware

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.