FPGA’s have become an important part of system design. It’s a far cry from how FPGA’s started out – as glue logic between discrete logic devices in the early days of electronic design. Modern day FPGA’s are practically SOC’s in their own right. Frequently they come with embedded processor cores, sophisticated IO cells, DSP, video, audio and other types of specialized processing cores. All of this makes them suitable, even necessary, for building major portions of systems products.

Early FPGA’s were tiny compared with their contemporaneous ASIC’s. The tools for implementing designs were often cobbled together by the FPGA vendors themselves, or there were a few commercial offerings that offered advantages over the vendor specific solutions. Nevertheless, these tools always took a back seat in terms of capacity, performance and sophistication when compared to the synthesis, and place and route flows for custom ASIC’s.

In today’s market, the previous generation of FPGA tools would not stand a chance. Requirements and expectations are much higher. Good thing there has been significant innovation here. Actually, it’s astounding how far FPGA tools have come.

I was reading a white paper published by Synopsys that delves into the state of the art for FPGA design. It makes a good read. It is written by Joe Mallett, Product Marketing Manager at Synopsys for FPGA design. The title is “Shift Left Your FPGA Design for Faster Time to Market”.

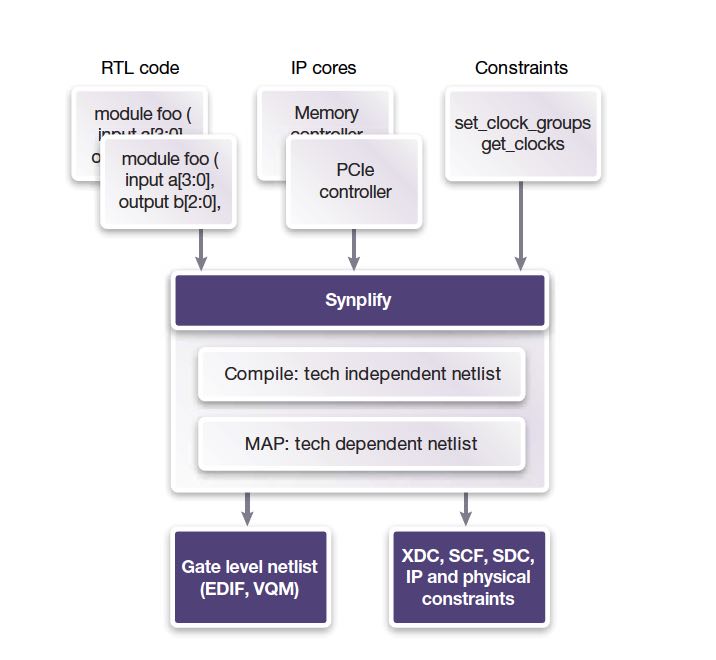

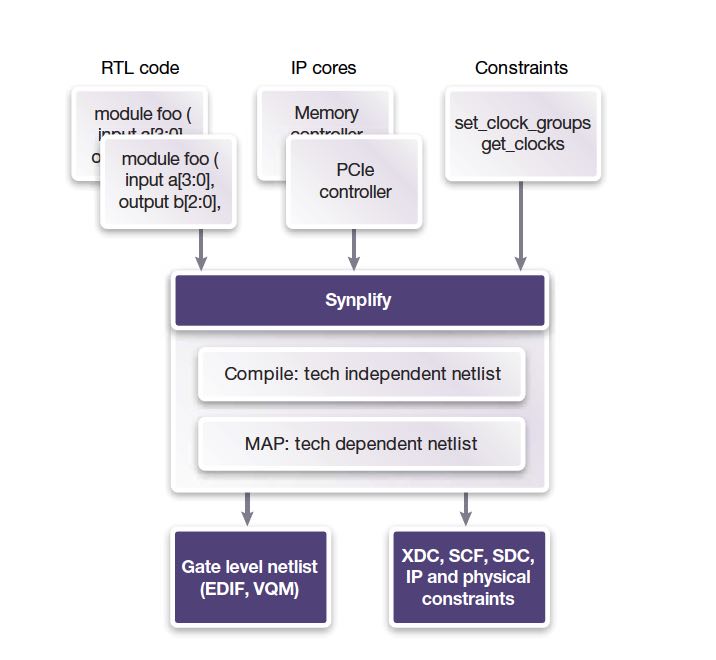

Just as is true for ASIC’s, FPGA designs rely a lot on 3rd party IP. These come with RTL and constraint files. FPGA tools need to digest these easily. The same is true for application specific RTL and constraints developed on a per project basis. FPGA’s also have evolved complex clock structures – in large part to meet the power and performance requirements for the designs they are used on. Designers need tools that easily handle multicycle and false path definition.

Once the baseline design specification is in place, the design can move to First Hardware. While it is certain to not meet all the performance requirements of the final design, it is a significant milestone that leads to further optimization heading toward the final deliverable.

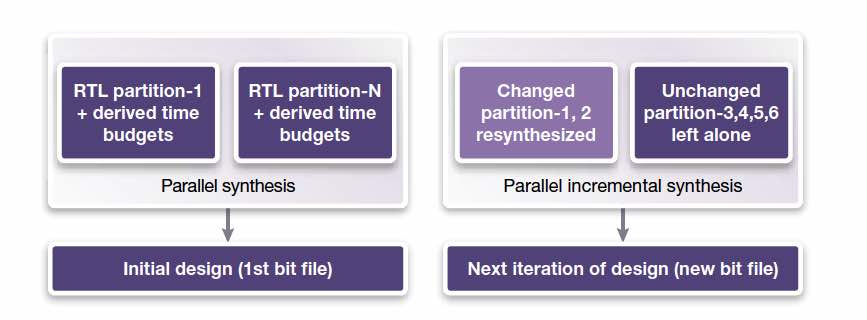

In the Synopsys White Paper, Joe talks at length about the ways their Synplify tool can accelerate each step in the FPGA design flow. The initial step being getting to First Hardware. Joe discusses initial design set up and mentions acceleration of synthesis through automatic partitioning and execution with mutli-core and/or compute farm compute resources. There are a lot of benefits in this approach, from lower per machine memory footprint to incremental design.

The next milestone is debug. Synopsys has developed extremely clever ways of adding high design visibility, which greatly accelerates debug. What’s even better, and is a unique advantage of FPGA’s is that the debug can run in real-time and at-speed, in the system level environment. The incremental design capabilities shorten turnaround time at this stage too.

Last we are left with tuning and optimization. Not surprisingly the means to accomplish this are at every stage of the design process. Highly optimized timing needs to be coupled with design size optimization. Synthesis, placement and routing all play a role in the final outcome. Rather than spoil the details, I recommend reading the white paper here. There is also companion paper on distributed synthesis in Synopsys’ Synplify FPGA design tool that I found very interesting.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.