For about 5 years now Synopsys has held an HSPICE SIG event in conjunction with DesignCon. It features a small vendor faire with companies that partner with Synopsys on HSPICE flows. They also have a dinner with industry/customer speakers and provide an update on HSPICE development. Lastly there is a Q&A where customers get to ask questions. I was able to attend this year’s event last week. Synopsys has posted a video of this interesting session here.

There were guest speakers from TSMC, Xilinx, and Micron. Raed Sabbah from Micron spoke about how he uses HSPICE to characterize SRAM bit cells. His talk covered a 6T bit cell bi-stable latch, which as he pointed out is vulnerable to process variation. In his analysis method he needs to run over 10 million simulations. He relies heavily on the Large Scale Monte Carlo feature of HSPICE to get his results. For this he uses distributed processing. He is able to check the write and read margins using transient analysis with split T and V.

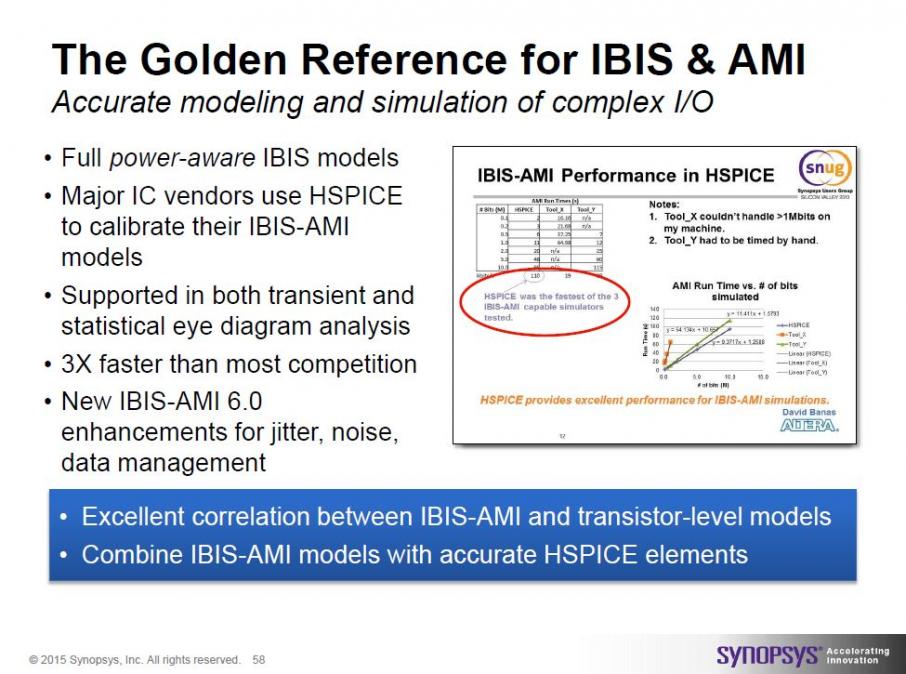

Next up was Brandon Jiao from Xilinx who spoke about their 28G SerDes verification with channel models. They have created their own IBIS AMI 5.0 models to perform linear time invariant time domain simulations. The model support clock/data recovery and DFE. The models they created also account for impairments and non-idealities found in silicon. For the channel model they use an s-parameter model that has ports for coupled lines. They run this with the coupled lines grounded and then with TX/RX data.

PBRS is used to ensure that they get good coverage of states. The last challenge is to get past the ignore bits while the system stabilizes and then record the data bits of interest. They use HSPICE heavily in this entire process. In their design the pulse time is on the order of 35 picoseconds, and the response time is orders of magnitude longer at ~75nanoseconds. To get coverage of all the possible bit patterns the need to run 20e20 bits.

In the his talk Min-Chie Jeng of TSMC spoke about their partnership with Synopsys in developing the TSMC Model Interface (TMI). This is an API interface that offers advanced modeling capabilities that are out of reach of traditional models. Newer process nodes are offering relatively less process improvement, putting increased pressure on design margins. One area that is becoming problematic is layout dependent effects. Traditional SPICE models do not handle them well. This creates a gap in pre and post simulation results. One example of a source of this gap is the differences between fingers within a single device.

Another important use of TMI is in predicting aging effects. TMI can include an aging model based on self heating. This in turn is dependent on the specific waveform used in the simulation. In a comprehensive flow it is possible to identify the hottest device in a design which would consequently be the one that would suffer the greatest self heating induced degradation, and pose the greatest reliability risk.

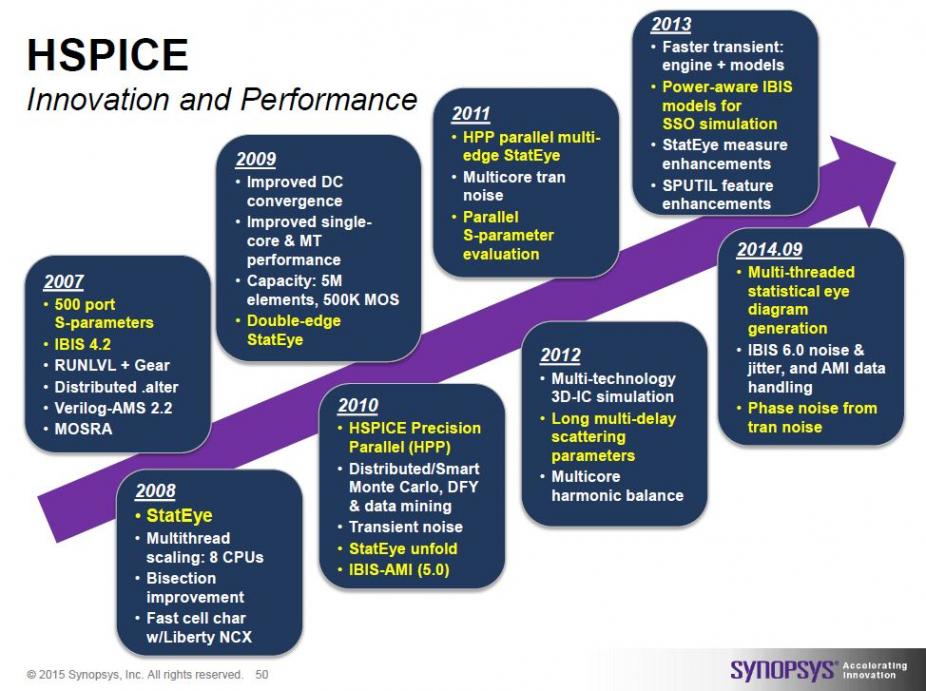

In closing, Scott Wedge from Synopsys spoke about recent HSPICE development. He opined that they have been achieving roughly a 1.5X performance improvement with each release. This is significant because over a period of many successive releases this has accumulated to provide much better performance than in years gone by. Scott maintains that they have been very consistent in the themes of the prior releases: capacity, coverage, improved s-parameter support, multi-core and transient noise.

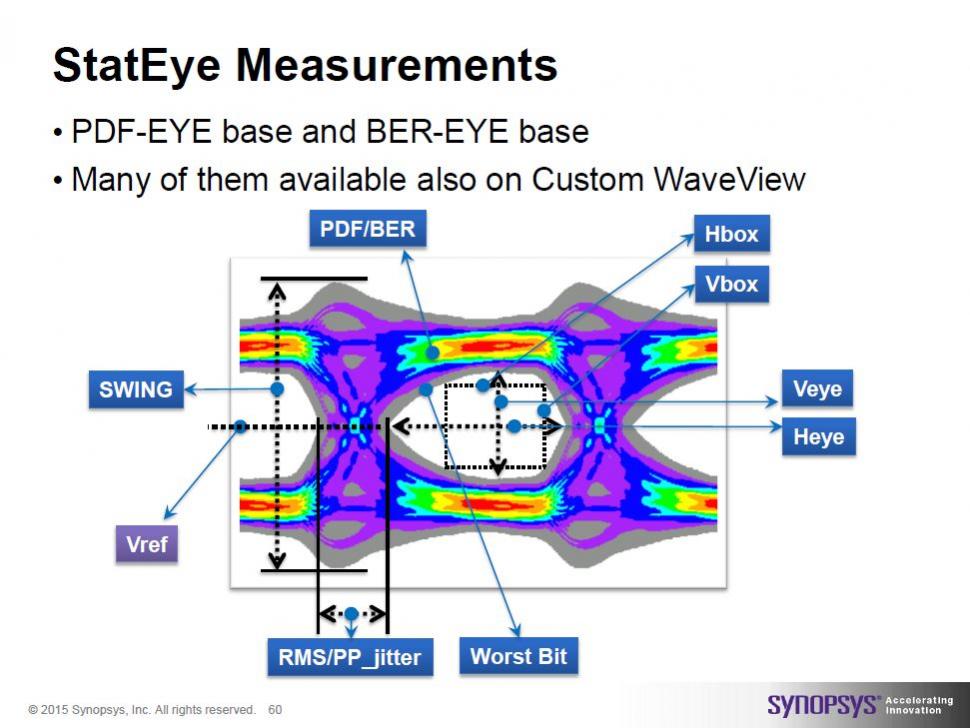

In the talks that preceded, and in his, there was a lot of focus on stat-eye support in HSPICE. This is clearly a significant feature that they just further enhanced with additional metrics.

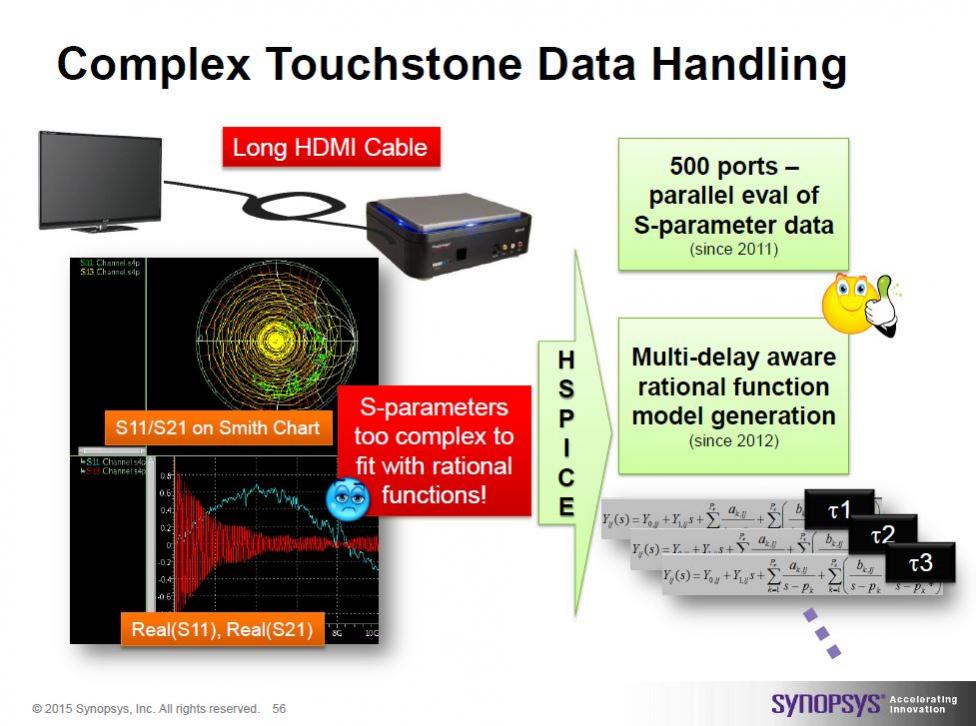

The Q&A started off with a few easy questions. But inevitably the issue of s-parameter support came up. It seems that at an event like DesignCon, where board SI is a hot topic, s-parameter support is essential. The SI solvers now provide s-parameter models with hundreds of ports for present day boards and packages. S-parameters for on-chip devices are relatively easy to work with, but many members of the audience are facing hundreds of ports.

Scott was up front regarding the challenges, citing passivity and causality as issues in creating rational functions. Realistically there is no magic bullet. But Scott committed to work with customers as corner cases come up to ensure solutions.

All in all HSPICE has been the so-called gold standard for SPICE since its inception. It has fared well under Synopsys’ stewardship. HSPICE has been a crucial tool for electronics designers for many years. By Scott’s own admission he has been involved with HSPICE for over 25 years. Synopsys is still clearly tracking the trends that are driving the market.

Share this post via:

Comments

0 Replies to “How Well is HSPICE Tracking Current Design Trends?”

You must register or log in to view/post comments.