I recently published a post on LinkedIn titled “Sometimes, you gotta throw it all out” in reference to the innovation process and getting beyond good to better. A prime example has crossed my desk: the new ProtoCompiler software for Synopsys HAPS FPGA-based prototyping systems.

Last week, I spoke with Troy Scott, product marketing manager for FPGA-based prototyping software at Synopsys, and I was a bit surprised to hear him use the words “completely rewritten” in describing ProtoCompiler. After all, Synopsys is known for FPGA synthesis and partitioning tools, and it would seem their current stuff would be pretty solid – why would they start over?

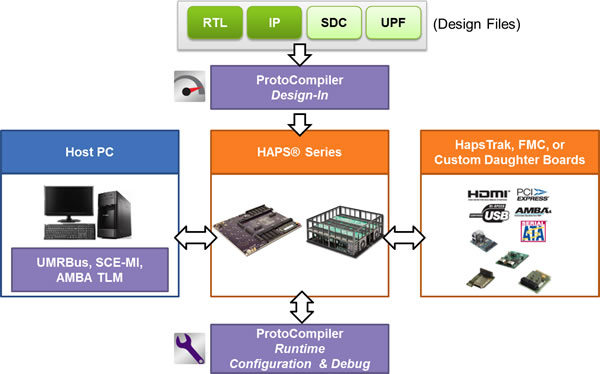

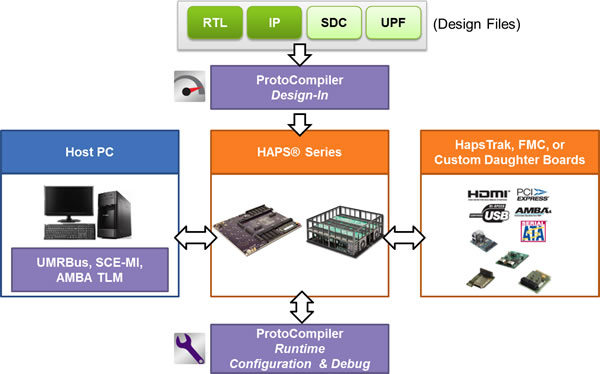

The answer lies in defining the problem correctly. Getting closure on a dedicated FPGA-based prototyping system, such as the HAPS-DX we learned about previously, isn’t the same as getting closure on a generic FPGA with generic tools. The nuances of clock distribution, handshaking, and pin multiplexing – all designed to enhance the partitioning and debug visibility of large ASIC designs represented in FPGA form – mean optimization can only be achieved through intimacy.

Synopsys was looking for results in minutes, not hours, even on massive designs. Part of the answer is better multicore processing on a Linux host, but the real effort is a new partitioning engine coupled with improved HDL compilation, tackling the problem of projecting a design into a HAPS. A more optimized synthesis strategy must account for the topology of the hardware prototyping system – this is what sets ProtoCompiler running on HAPS apart from using third-party FPGA development boards, which are typically more focused on providing a habitat for the FPGA hardware designer.

ProtoCompiler uses a strategy Scott calls a “deferred I/O plan”, using knowledge about the HAPS design and the Xilinx FPGA. There is no dedicated interconnect on HAPS; all I/O is accessible in a bank of connectors, with 50 signals on each connector that can be flexibly routed and cabled. Also, looking at the logic implementation also is important – is it a “rat’s nest” like a GPU connected to everything, or is it more of a regular pattern like a subsystem of AMBA AHB-connected peripherals?

With two sets of defined constraints, accounting for both the HAPS platform and the ASIC design, the synthesis tool can then take over and come to closure with decent timing much more quickly. Typically, the bottleneck in closure is pin-sharing and the necessary time multiplexing of interconnects. “Usually, what you’re doing is chasing the pins with the highest mux ratios, and trying to squash those down in iterations,” Scott said.

Scott gives another concrete example of intimate knowledge a synthesis tool should leverage: on HAPS, there is a high-speed TDM using the SERDES channels on the Xilinx Virtex-7 XC7V2000T. The flight times have been fully characterized, taking into account trace lengths, cable delays, and more, and they know how the clocking and gearing logic is set up.

Trying to pull the same thing off using FPGA development boards and generic synthesis tools on large, fast designs leaves designers to reconcile all these details, often with precious little structural knowledge beyond the internals of the FPGA itself. This is the core of the minutes-versus-hours tradeoff ProtoCompiler and HAPS target, and why Synopsys rewrote their tools.

A complete introduction to ProtoCompiler, including a datasheet and a video with Scott talking about the platform, is on the Synopsys web site. The path of iterative innovation Synopsys is on with the HAPS platform has been fascinating to watch. They aren’t locked in and satisfied with things; instead, they are taking rapid cycles of learning and real-world experience into each successive enhancement of FPGA-based prototyping.

lang: en_US

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.