By now, you’ve probably seen the news on Synopsys acquiring Coverity, and a few thoughts from our own Paul McLellan and Daniel Payne in commentary, who I respect deeply – and I’m guessing there are many like them out there in the EDA community scratching their heads a little or a lot at this. I’m not from corporate, but I am here to help.

Coverity and other purveyors of C/C++ static code analysis tools come from my happy place – embedded – and I’m a relative noob in EDA circles who can’t carry laundry for my colleagues here. However, I’ve been carefully observing the disciplines of EDA and embedded coming together for a few years now, way before coming to SemiWiki; it is why I was excited to be invited here and participate in the dialog, and maybe help shape the future.

From my perspective: this is more than just a sidestep by Synopsys into a new space to diversify beyond EDA roots, and I don’t see this solely as a competitive response to Mentor Embedded efforts, which are broader right now with a range of embedded software tools and operating systems. No, this is certainly a strategic maneuver, not just a tangential probe.

First, a bit of explanation: what is static code analysis? EDA types might recognize its foundation by another name: design rules checking, in which a tool looks at source files and relationships against a set of rules to find defects in code. Many folks are familiar with lint, the most basic of tools for checking C/C++ code. Coverity and other embedded tool sets go much farther than that, with heuristics and algorithms to not only shake out defects in C/C++ code, but reduce the annoying “false positives”. These tools provide control over the rule sets in play, and what gets checked and reported on, so actual errors are highlighted and warnings and other benign differences of opinion based on requirements and experience can be categorized or filtered out entirely.

Now, I’ll go back to the comments on “not firmly in EDA space” and “zero synergy” posed by my esteemed counterparts. I agree with them, this is not traditional EDA territory; allow me to make the case for the JJ Abrams-esque alternate strategy timeline. Aart de Geus and Anthony Bettencourt both focused on quality and security as their message, but it goes deeper than that – way deeper. Here are 6 reasons to think about the vision and where Synopsys is likely headed.

Time is money. Of course, you write perfect C/C++ code. Sure you do. I know I do. (Ha ha. HAHAHA. HAHAHAHAHA .…) Okay, maybe every once in a while, it’s not perfect. More than likely, you have policies involving coding standards, peer reviews, and objective testing to expose errors. Those all likely involve one thing: a human READING code, line by thousands or millions of lines. Eyeballs. Caffeine. LASIK. Late nights. Wasted days. Time that could be spent better. Static analysis tools not only read through code, but they catalog and check interprocedural relationships and other constructs for less obvious errors, pointing reviewers to the problem areas quickly.

All code is critical. We often relate code quality with safety critical applications. Certainly, the first advocates of embedded static code analysis have come from defense, industrial, medical, automotive, and other areas with stricter compliance and liability issues, and in some cases defined industry-wide coding standards. However, any code defect can make or break any application, and as the LOC count rises, the risk goes way up. This gets magnified in a typical SoC today, with different types of cores all running together. A high profile bug can torpedo any product quickly, something no developer can afford.

Code is the product. Microcontrollers, SoCs, and microprocessors do nothing but sit there and burn watts without software running on them – silicon is just the enabler, not the product. Synopsys may not be huge in the operating systems and tools business yet, but they are big in the embedded business; the popularity of the ARC processor core and DesignWare IP means there has to be verified software, somewhere. Synopsys has to create and deliver quality C/C++ code making this stuff work, and provide confidence it has been checked.

Co-verification and the golden DUT. Most folks think of EDA testing as RTL simulation, pattern generation and scan chains, but in today’s world, that is just the beginning. Real SoCs are co-verified, with the actual software running on a simulator or emulator. Think Apple A7 running iOS 7. In a complex part, without actual code running, errors can sneak through. Here’s a question: if you have new IP with both new hardware and new software, which is the problem? That golden software may not be as golden as you think, and many users report running static code analysis tools spotted actual problems they missed in software review and test.

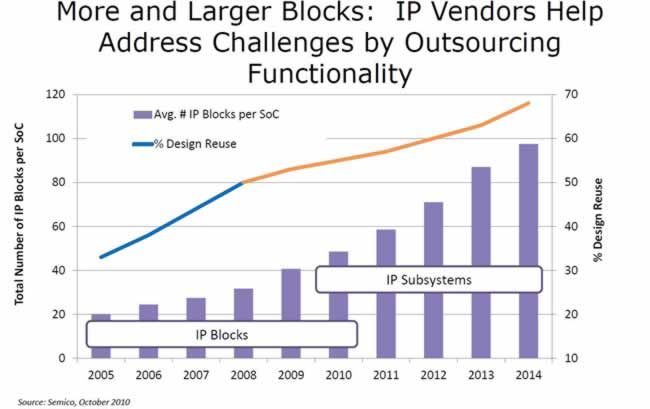

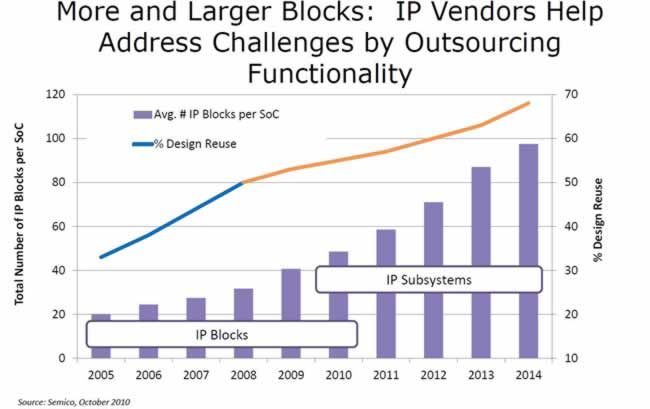

IP is coming from everywhere. This is not a make-versus-buy world anymore; it’s build-borrow-buy. Here’s my favorite chart I stole from Semico Research via Synopsys, with the message that a complex SoC is approaching 100 or more IP blocks with both hardware and software, and reuse is key to productivity. If you write all your own IP, congratulations, but more than likely you get some IP from either open source communities or commercial suppliers. Guess what? The software IP from outside sources very likely doesn’t conform to your coding standards. Is it broke? Will it break, or will it break your IP, at integration? Would you like to read all that code line-by-line, or would you rather have it scanned – using your internal rule set, filters, and customized reports – to pinpoint where potential problems may lie?

In the end, there can only be one. “Yeah, but we’re talking about C/C++ here; we don’t design chips with C/C++.” (There are RTL static code analysis tools out there; same idea, but a story for another time.) True, but you likely design chips for C/C++, and again, your chips don’t do much without software. While the end game may be a generation away, at some point silicon will be optimized for the code it runs. If we believe in the ultimate vision for high level synthesis, design realizations will come directly from C/C++ application code – in order to do that with confidence, the code has to be not only defect-free, but well-formed and very well-understood. In the interim, it would certainly be interesting for Synopsys to adapt the Coverity tools for SystemC, not a gigantic stretch.

Like I said, this may be the JJ Abrams version of the story – but I think it will play out as the right one in an evolving EDA industry, and I strongly suspect Synopsys has already seen the benefits of Coverity tools internally as well as externally. A big congrats to the Coverity team, and to Synopsys for being brave enough to step out of the box further into embedded space.

More Articles by Don Dingee…..

lang: en_US

Comments

0 Replies to “6 reasons Synopsys covets C/C++ static analysis”

You must register or log in to view/post comments.