Synopsys just released a white paper, a backgrounder on CDC. You’ve read enough of what I’ve written on this topic that I don’t need to re-tread that path. However, this is tech so there’s always something new to talk about. This time I’ll cover a Synopsys survey update on numbers of clock domains in designs, also an update on ways to validate CDC constraints.

First the survey, extracted from their 2018 Global User Survey. There are still some designs, around 31%, using 5 or less clock domains. The largest segment, 58% have between 21 and 80 domains. And as many as 23% have between 151 and a thousand domains. Why so many? Some of this will continue to be thanks to external interfaces of course.

Clearly a lot will be a result of power management, running certain functions faster or slower to tradeoff performance against over-heating. And a growing number, prominent in really large designs such as datacenter-bound AI accelerators, are so large that it is no longer practical to try to balance clock trees across the whole design. Now designers are using GALS techniques in which local domains are synchronous, and crossings between these domains are effectively asynchronous.

All of which means that CDC analysis, like everything else in verification, continues to grow in complexity.

Turning to constraints, I’ve mentioned before that CDC depends on having info about clocks, naturally, and the best place to get that info is from SDC timing constraints. You have to build those files anyway for synthesis, timing analysis and implementation. Might as well build it right in one set of files and use that set everywhere, including for CDC. That’s a lot trickier than it sounds for a CDC tool if you’re not already tied into the implementation tools. Getting the interpretation right for Tcl constraints can be very challenging. Not a problem for Synopsys of course.

Along those lines, it’s a lot easier to figure out if one clock is a divided-down or multiplied-up version of another if you understand the native constraints. Which helps a lot with false errors. No need to report problems between two clocks if one is an integral multiple of the other.

Other prolific generators of false errors are those pesky resets, configuration signals and other signals which switch very infrequently, usually under controlled conditions allowing sufficient time for the signals to settle. In fact, at Atrenta we found that in many cases these could be the dominant source of noise in CDC analysis.

Static analysis has no idea these signals are in any way special. They see them crossing from one clock domain to another and assume they have to be synchronized like every other signal. That would be a waste in most cases – of power, area, latency, something that doesn’t need to be wasted.

So we invented quasi-static constraints (honestly I don’t know if we truly invented the concept, I just don’t know that we didn’t), to type signals that could be ignored in CDC analysis. These have no meaning in implementation so won’t be cross-checked, but they are amazingly effective; noise rates plummet. There’s just one problem. Because they’re not cross-checked elsewhere, if you mislabel a signal as quasi-static, you could miss real errors.

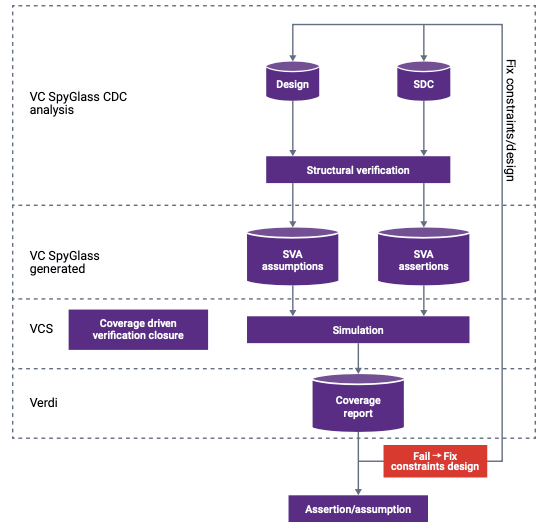

Synopsys figured out a way to cross-check meta-constraints like these. They convert them into SVA assertions which you can then pull into simulation regressions to check that the claim really holds up. This is diagrammed in the opening figure. Pretty neat. I think Synopsys really has CDC verification covered thanks to this extension. Click here to read Synopsys’ white paper.

Also Read:

Prevent and Eliminate IR Drop and Power Integrity Issues Using RedHawk Analysis Fusion

Achieving Design Robustness in Signoff for Advanced Node Digital Designs

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.