SSD memory is enjoying a new resurgence in datacenters through NVMe. Not as a replacement for more traditional HDD disk drives, which though slower are still much cheaper. NVMe storage has instead become a storage cache between hot DRAM memory close to processors and the “cold” HDD storage. I commented last year on why this has become important for the hyperscalers. Cloud throughput and therefore revenues are heavily impacted by storage latencies, which makes fast storage cache a high priority. Which creates implications for verifying warm memory – proving your solution will deliver what it promises.

You start to wonder what other operations you could offload into storage. SQL serving for example. Database operations work on lots of data which can dominate latency (and power) if you first have to drag it all over to the processor. It’s faster and lower power to do the bulk of the heavy lifting right in the NVMe unit. I’ve even seen a recent suggestion that linear algebra could be moved into SQL, from which it would be a short jump to push it into NVMe. Another paper suggests an architecture to accelerate big data computation using this kind of approach.

Architecture complexity

It seems there is no limit to what we can do with computation close to storage, when we put our minds to it. All of which makes that NVMe memory much more powerful. The downside is that verifying warm memory implementations, already complex, becomes even more complex.

First there’s the architecture complexity. One of these devices may service multiple hosts and many I/O queues. It must provide a similar level of security to that offered by the hosts including at least encryption, perhaps a hardware root of trust and other features to harden the device against attacks.

Implementation complexity

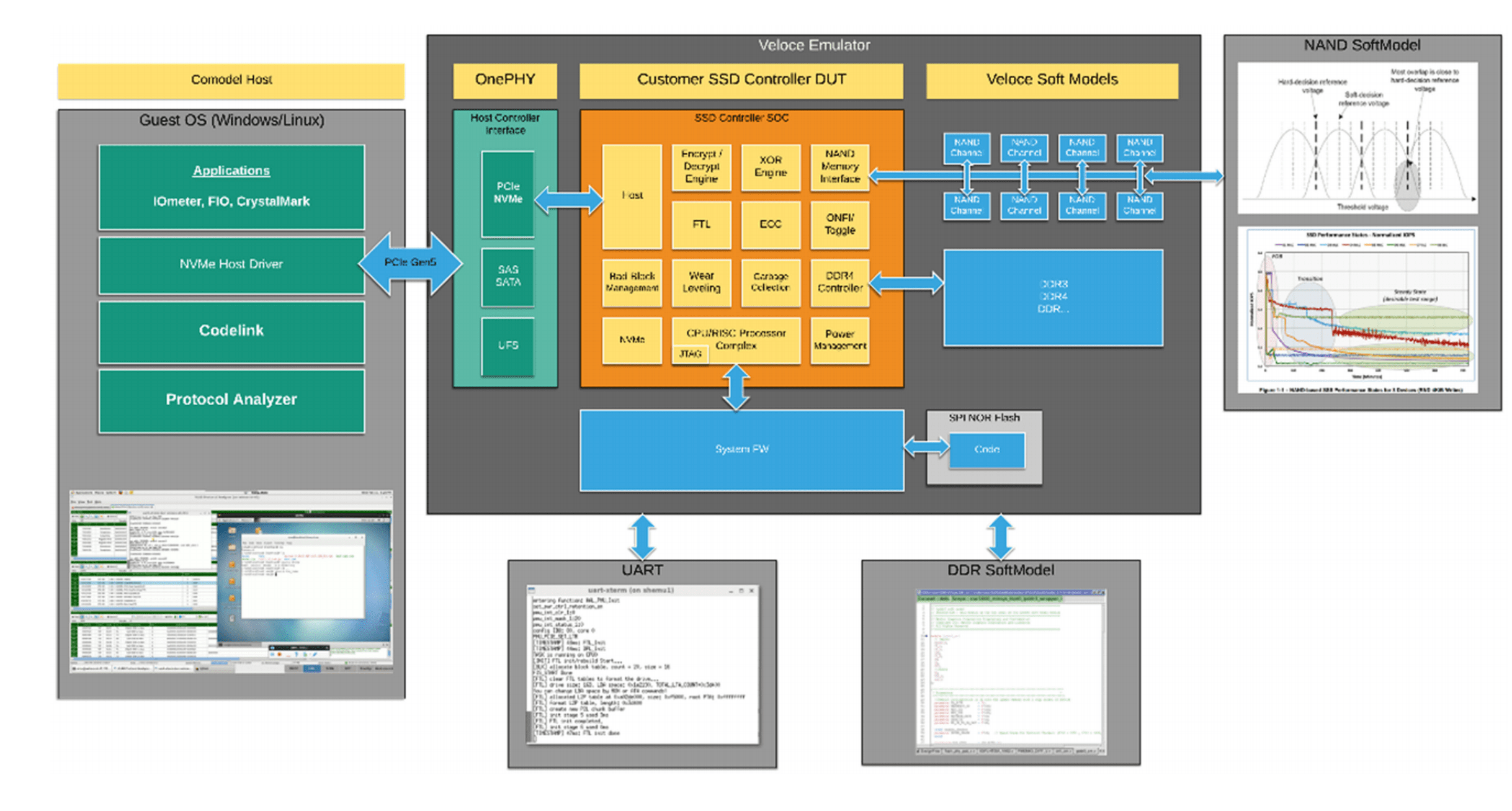

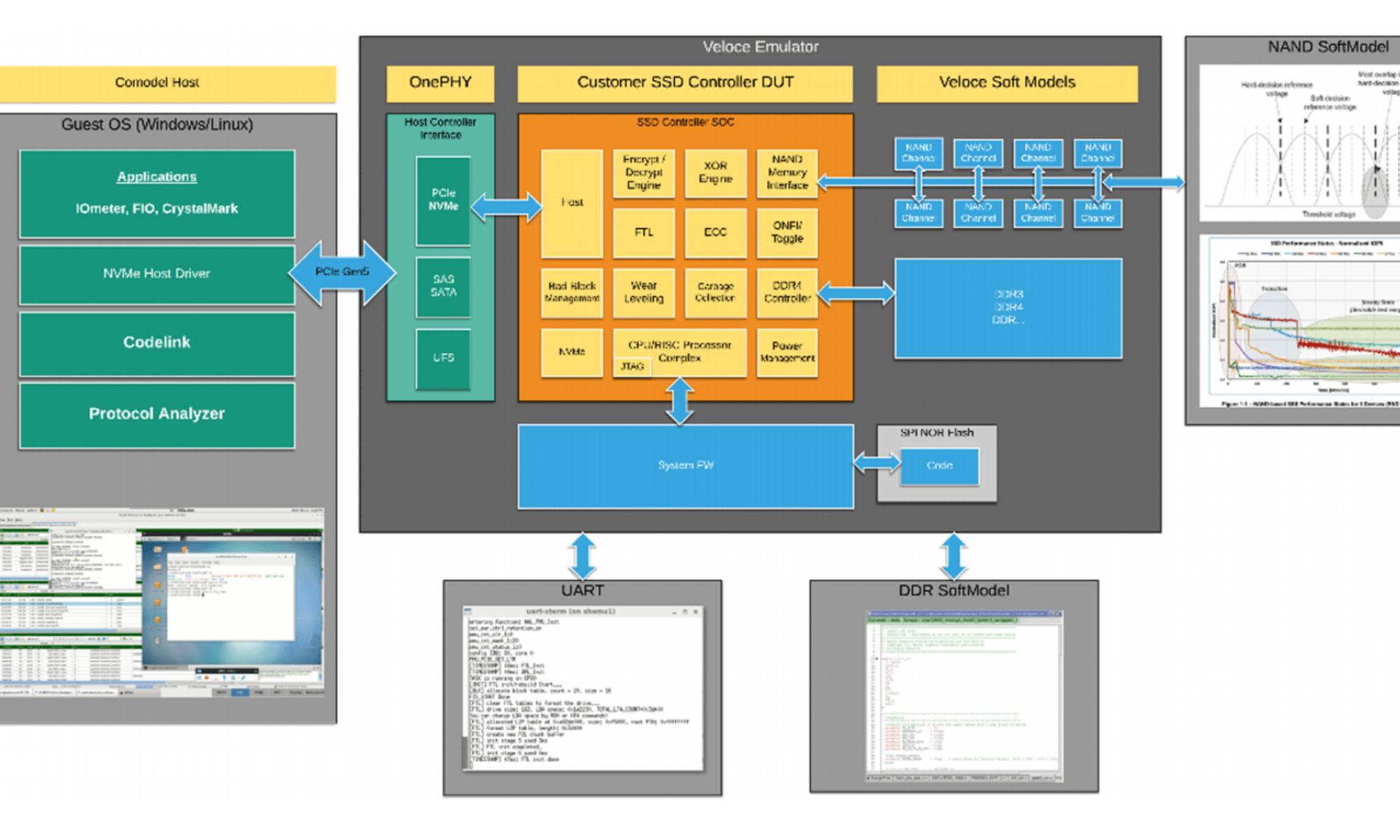

Then there’s the implementation complexity. It must deal with the NVMe interface, encryption, logical to physical address mapping, wear-leveling, garbage collection, interface with local DRAM through DDR (to store data while it’s doing garbage collection) and so on. This is a full-blown processor in its own right. As if that weren’t enough, you can’t just model the flash as perfect memory. Reading a bit can return a soft error to which the controller must adapt. According to the Mentor Veloce folks, design teams need to model flash bit behavior down to this level of accuracy in order to have full confidence in their system-level testing. Mentor provide soft models for NAND, NOR and DDR to represent these components.

Traffic complexity

Finally, there’s traffic complexity. A verification plan must also model traffic with all the variations you might expect to see in those loads from the host (one or more servers), connected through a PCIe interface. For benchmarking this requires running a standard I/O load like IOmeter, FIO or CrystalMark. Measuring throughput, latencies, all the factors you are aiming to improve through use of warm memories.

Put all of this together and you have a big verification task – virtual host and an SSD simulation model which you have to run in emulation to deliver the kind of throughput you need for this volume of verification. Ben Whitehead, Storage Products Specialist at Mentor, has written a white-paper, “Virtual Verification of Computational Storage Devices”, to describe the Veloce solution they have assembled to address this need. With a bunch of application-specific features for measurement, checking and debug. An interesting read for anyone working in this hot domain.

Also Read:

Trusted IoT Ecosystem for Security – Created by the GSA and Chaired by Mentor/Siemens

Emulation as a Service Benefits New AI Chip

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.