The first thing to realize about Dassault’s Simulation Lifecycle Management platform is that in the non-IC world where Dassault primarily operates, simulation doesn’t just mean functional verification or running Spice. It is anything during the design that produces analytical data. All of that data is important if you are strictly tracking whether a design meets its requirements. So yes, functional coverage and Spice runs, but also early timing data from synthesis, physical verification, timing and power data from design closure, and so on. All of this is what the automotive, aerospace and other worlds call “simulation.” To them, in the mechanical CAD (MCAD) world, anything done on the computer as opposed to on a machine-tool, is simulation. Similarly, with that world view, anything done with a chip design other than taping it out and fabricating it is simulation.

So Simulation Lifecycle Management (SLM) is an integrated process management platform for semiconductor new product introduction. The big idea is to take the concepts and processes used in MCAD to design cars and planes and push them down into the semiconductor design process. In particular keeping track of pretty much everything.

In automotive, for example, there is ISO 26262 (wikipedia) which covers specification, design, implementation, integration, verification, validation, and production release. In practice this means that you need to focus in on traceability of requirements:

- document all requirements

- document everything that you do

- document everything that you did to verify it

- document the environment that you used to do that

- keep track of requirement changes and document that they are still met

That’s a lot of documenting and the idea is to make almost all of it happen automatically as a byproduct of the design process. To do that, SLM needs to be the cockpit from where the design process is driven.

There are really two halves to the process. One primarily used by management to keep define processes and keep track of that state of the design. The core management environment really has three primary functions:

There are really two halves to the process. One primarily used by management to keep define processes and keep track of that state of the design. The core management environment really has three primary functions:

- dynamic traceability: the heart of the document what you did, how you know you did it and the environment you used to do it

- process management: knowledge that everything that has been done is, in fact, documented

- work management: see results, keep track of where you are in metrics like functional coverage, percentage of paths meeting timing during timing closure and so on.

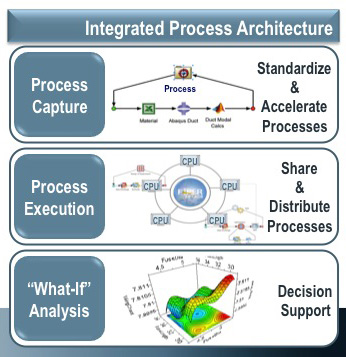

The other half, primarily used by engineers actually doing the design, running tools and scripts and making judgement calls. This is called the integrated process architecture and also consists of three parts:

The other half, primarily used by engineers actually doing the design, running tools and scripts and making judgement calls. This is called the integrated process architecture and also consists of three parts:

- process capture: a way to standardize and accelerate design processes and flows for specific tasks

- process execution: integrating the processes and flows into the load-leveling environment, server farms, clouds or whatever the execution environment(s) in use are

- decision support: automation of what-if analysis for tasks like area-performance tradeoffs where many runs at many different points may need to be created and then the mass of data analyzed to select the best tradeoff

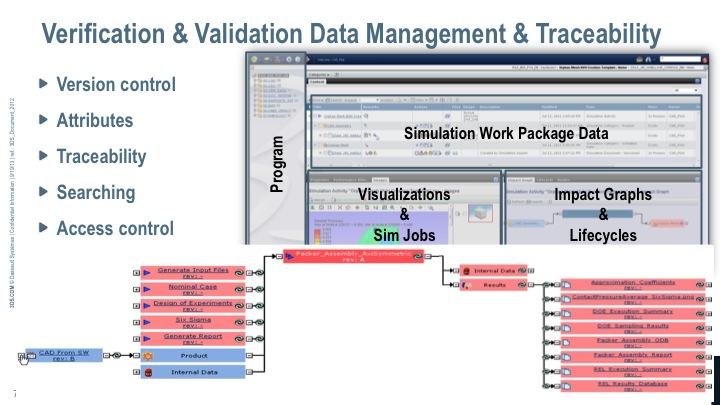

There is obviously a lot more detail depending on which task you did down into. But to get a flavor of it, the above screen capture shows some of the requirements traceability. A requirement may generate many more sub-requirements that, in turn, generate tasks that are required to demonstrate that the requirement is met (and keep track of everything to be able to reproduce the run too). Again, don’t forget that where the above diagram says “simulation” it might mean keeping track of how many DRC violations remain to be fixed.

There is obviously a lot more detail depending on which task you did down into. But to get a flavor of it, the above screen capture shows some of the requirements traceability. A requirement may generate many more sub-requirements that, in turn, generate tasks that are required to demonstrate that the requirement is met (and keep track of everything to be able to reproduce the run too). Again, don’t forget that where the above diagram says “simulation” it might mean keeping track of how many DRC violations remain to be fixed.

Subsequent blogs will go into more detail of just how SLM works in practice.

Share this post via:

A Century of Miracles: From the FET’s Inception to the Horizons Ahead