Around the mid-2000’s the performance component of Moore’s Law started to tail off. That slack was nicely picked up by architecture improvements which continue to march forward but add a new layer of complexity in performance optimization and verification. Nick Heaton (Distinguished Engineer and Verification Architect at Cadence) and Colin Osbourne (Senior Principal System Performance Architect and Distinguished Engineer at Arm) have co-written an excellent book, Performance Cookbook for Arm®, explaining the origins of this complexity and how best to attack performance optimization/verification in modern SoC and multi-die designs. This is my takeaway based on a discussion with Nick and Colin, complemented by reading the book.

Who needs this book?

It might seem that system performance is a problem for architects, not designers or verification engineers. Apparently this is not not entirely true; after an architecture is delivered to the design team those architects move on to the next design. From that point on, or so I thought, design team responsibilities are to assemble all the necessary IPs and connectivity as required by the architecture spec, to verify correctness, and to tune primarily for area, power, and timing closure.

There are a couple of fallacies in this viewpoint. The first is the assumption that the architecture spec alone locks down most of the performance, and the design team need not worry about performance optimizations beyond implementation details defined in the spec. The second is that real performance measurement, and whatever optimization is still possible at that stage, must be driven by real workloads – perhaps applications running on an OS, running on firmware, running on the full hardware model.

But an initial architecture is not necessarily perfect, and there are still many degrees of freedom left to optimize (or get wrong) in implementation. Yet many of us, certainly junior engineers, have insufficient understanding of how microarchitecture choices can affect performance and how to find such problems. Worse still, otherwise comprehensive verification flows lack structured methods to regress performance metrics as a design evolves. Which can lead to nasty late surprises.

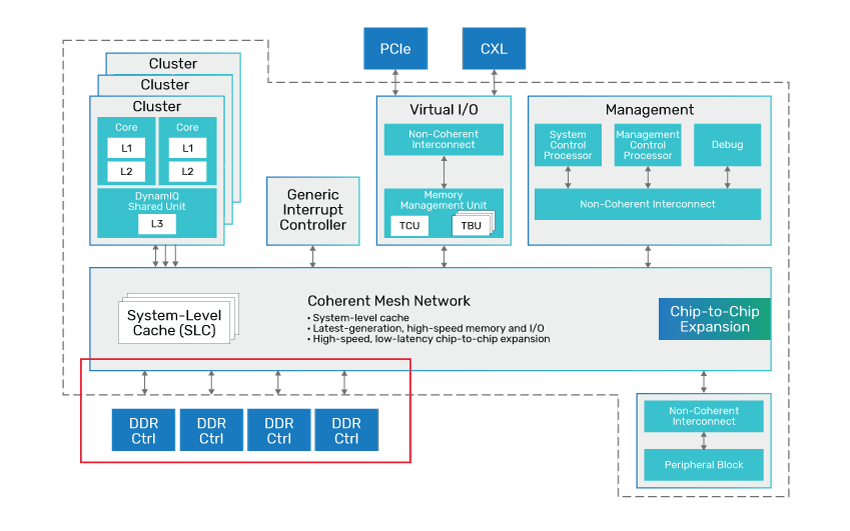

The book aims to inform design teams on the background and methods in design and verification for high performance Arm-based SoCs, especially around components that can dramatically impact performance: the memory hierarchy, CPU cores, system connectivity, and the DRAM interface.

A sampling of architecture choices affecting performance

I can’t do justice to the detail in the book, but I’d like to give a sense of big topics. First, the memory hierarchy has huge impact on performance. Anything that isn’t idling needs access to memory all the time. Off-chip/chiplet DRAM can store lots of data but has very slow access times. On-chip memory is much faster but is expensive in area. Cache memory, relying on typical proximity of reference in memory addresses, provides a fast on-chip proxy for recently sampled addresses, needing to update from DRAM only on cache misses or a main memory update. All this is generally understood, however sizing and tuning these memory options is a big factor in performance management.

Processors are running faster, outpacing even fast memories. To hide latencies in fetching they take advantage of tricks like pre-fetch and branch prediction to request more instructions ahead of execution. In a multi-core system this creates more memory bandwidth demand. Virtual memory support also adds to latency and bandwidth overhead. Each can impact performance.

On-chip connectivity is the highway for all inter-IP traffic in the system and should handle target workloads with acceptable performance through a minimum of connections. This is a delicate performance/area tradeoff. For a target workload, some paths must support high bandwidth, some low latency, while others can allow some compromise. Yet these requirements are at most guidelines in the architecture spec. Topology will probably be defined at this stage: crossbar, distributed NoC, or mesh, for example. But other important parameters can also be configured, say FIFO depths in bridges and regulator options to prioritize different classes of traffic. Equally endpoint IP connected to networks often support configurable buffer depths for read/write traffic. All these factors affect performance, making connectivity a prime area where implementation is closely intertwined with architecture optimization.

Taking just one more example, interaction between DRAM and the system is also a rich area for performance optimization. Intrinsic DRAM performance has changed little over many years, but there have been significant advances in distributed read-write access to different banks/bank groups allowing for parallel controller accesses, and prefetch methods where the memory controller guesses what range of addresses may be needed next. Both techniques are supported by continually advancing memory interface standards (eg. in DDR) and continually more intelligent memory controllers. Again, these optimizations have proven critical to continued advances in performance.

A spec will suggest IP choices of course, and initial suggestions for configurable parameters but based on high-level sims; it can’t forecast detailed consequences emerging in implementation. Performance testing on the implementation is essential to check performance remains within spec, and quite likely tuning may at times be needed to stay within that window. Which requires that you have some way to figure out if you have created a problem, then have some way to isolate a root cause, and finally understand how to correct the problem.

Finding, fixing, and regressing performance problems

First, both authors stress that performance checking should be run bottom-up. Should be a no-brainer but the obvious challenge is what you use for test cases in IP or subsystem testing, even perhaps as a baseline for full system testing. Real workloads are too difficult to map to low-level functions, come with too much OS and boot overhead, and lack any promise of coverage however that should be defined. Synthetic tests are a better starting point.

Also you need a reference TLM model, developed and refined by the architect. This will be tuned especially to drive architecture optimization on the connectivity and DDR models.

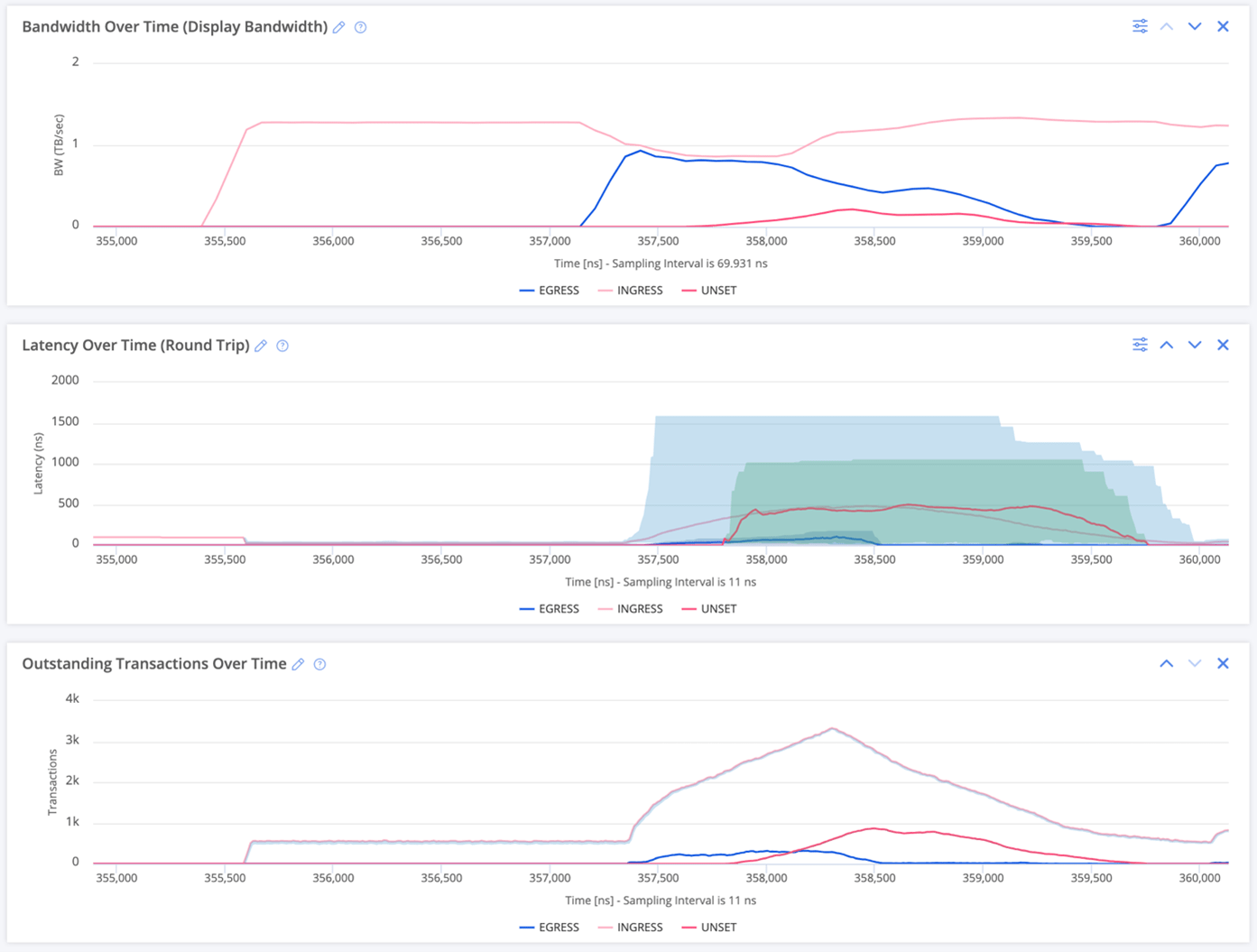

Then bottom-up testing can start, say with a UVM testbench driving the interconnect IP connected to multiple endpoint VIPs. Single path tests (one initiator, one target) provide a starting point for regression-ready checks on bandwidth and latencies. Also important is a metric I hadn’t considered, but which makes total sense: Outstanding Transactions (OT). This measures the amount of backed up traffic. Cadence provides their System Testbench Generator to automate building these tests, together with Max Bandwidth, Min Latency and Outstanding Transaction Sweep tests, more fully characterizing performance than might be possible through hand-crafted tests.

The next level up is subsystem testing. Here the authors suggest using Cadence System VIP and their Rapid Adoption Kits (RAKs). These are built around the Cadence Perspec System Verifier augmented by the System Traffic Library, AMBA Adaptive Test Profile (ATP) support and much more. Perspec enables bare metal testing (without need for drivers etc.), with easy system-level scenario development. Very importantly, this approach makes extensive test reuse possible (as can be seen in available libraries). RAKs leverage these capabilities for out-of-the-box test solutions and flows, for an easy running start.

The book ends with a chapter on a worked performance debug walkthrough. I won’t go into the details other than to mention that it is based on an Arm CMN mesh design, for which a performance regression test exhibits a failure because of an over-demanding requester forcing unnecessary retries on a cache manager.

My final takeaway

This is a very valuable book, also very readable. These days I have a more theoretical than hands-on perspective, yet it opened my eyes on the both the complexity of performance optimization and verification, while for the same reasons making it seem more tractable. Equally important, this book charts a structured way forward to make performance a first-class component in any comprehensive verification/regression plan. With all the required elements: traffic generation, checks, debug, score boarding and the beginnings of coverage.

You can buy the book on Amazon – definitely worth it!

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.