I attended much of the Jasper users’ group a week ago. There were several interesting presentations that I can’t just blog about because companies are shy, and some that would only be of interest if you were a user of Jasper’s products on a daily basis.

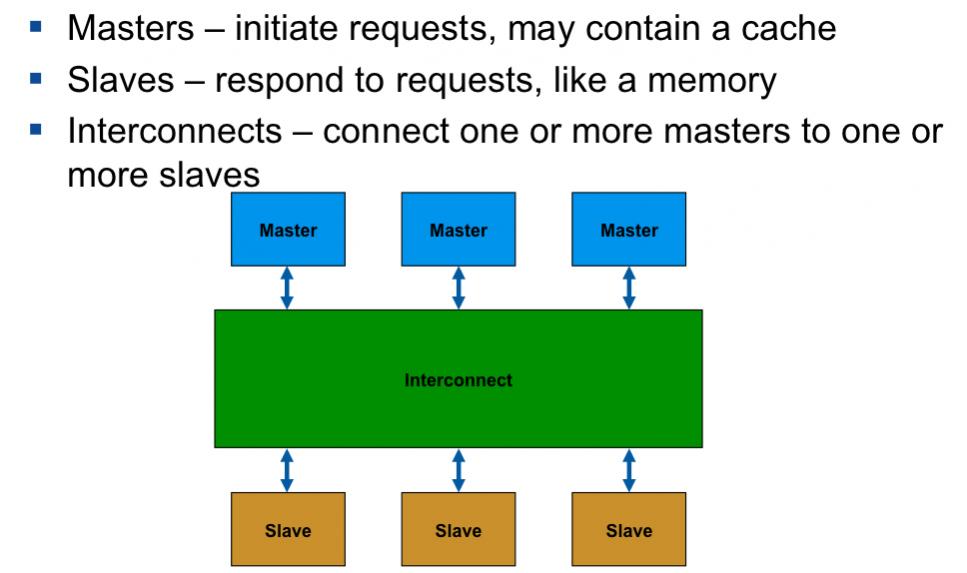

But for me the most interesting presentations were several on an area that I didn’t realize this sort of formal verification was being used for. The big driver is that modern multi-core processors now require much more sophisticated cache control than before. ARM in particular has created some quite sophisticated protocols under the AMBA4 umbrella that they announced at DAC.

In the old days, cache management was largely done in software, invalidating large parts of the cache to ensure no stale data could get accessed, and forcing the cache to gradually be reloaded from main memory. There are several reasons why this is no longer appropriate. Caches have got very large and the penalty for off-chip access back to main memory is enormous. Large amounts of data flowing through a slow interface is bad news.

As a historical note, the first cache memory I came across was on the Cambridge University Titan computer. It had 32 words of memory accessed using the bottom 7 bits as the key and was only used for instructions (not data). This architecture sounds too small to be useful, but in fact it ensures that any loop of less than 32 instructions runs out of the cache and so that trivial amount of additional memory made a huge performance difference.

Anyway, caches now need to have more intelligence. Instead of invalidating lots of data that might turn out to be stale, the cache controllers need to invalidate on a line-by-line basis in order to ensure that anybody reading an address gets the latest value written. This even needs to be extended to devices that don’t even have caches since a DMA device cannot simply go to the main memory due to delayed write-back.

Obviously these protocols are pretty complicated, so how do you verify them? I don’t mean how do you verify that a given RTL implementation of the protocol is good, that is the normal verification problem that formal and simulation techniques have been used on for years. I mean how do you verify that the protocol itself is correct. In particular, that the caches are coherent (any reader correctly gets the last write) and deadlock-free (all operations will eventually complete, or a weaker condition, at least one operation can always make progress).

Since this is a Jasper User Group Meeting it wouldn’t be wild to guess that you use formal verification techniques. The clever part is that there is a table driven product called Jasper ActiveModel. This creates a circuit that is a surrogate for the protocol. Yes, it has registers and gates but these are not something implementable, they capture the fundamental atomic operations in the protocol. Then, using standard proof techniques, this circuit can be analyzed to make sure it has the good properties that it needed.

It was a very worthwhile exercise. It turned out that the original spec contain some errors. Of course they were weird corner cases, but that is what formal verification is so good at. Simulation hits the stuff you already thought of. There was one circumstance under which the whole cache infrastructure could deadlock, and another in which it was possible to get access to stale data.

Similar approaches have been taken for verifying communication protocols, which also have similar issues: they might deadlock, the wrong data might get through and so on.

TSMC vs Intel Foundry vs Samsung Foundry 2026