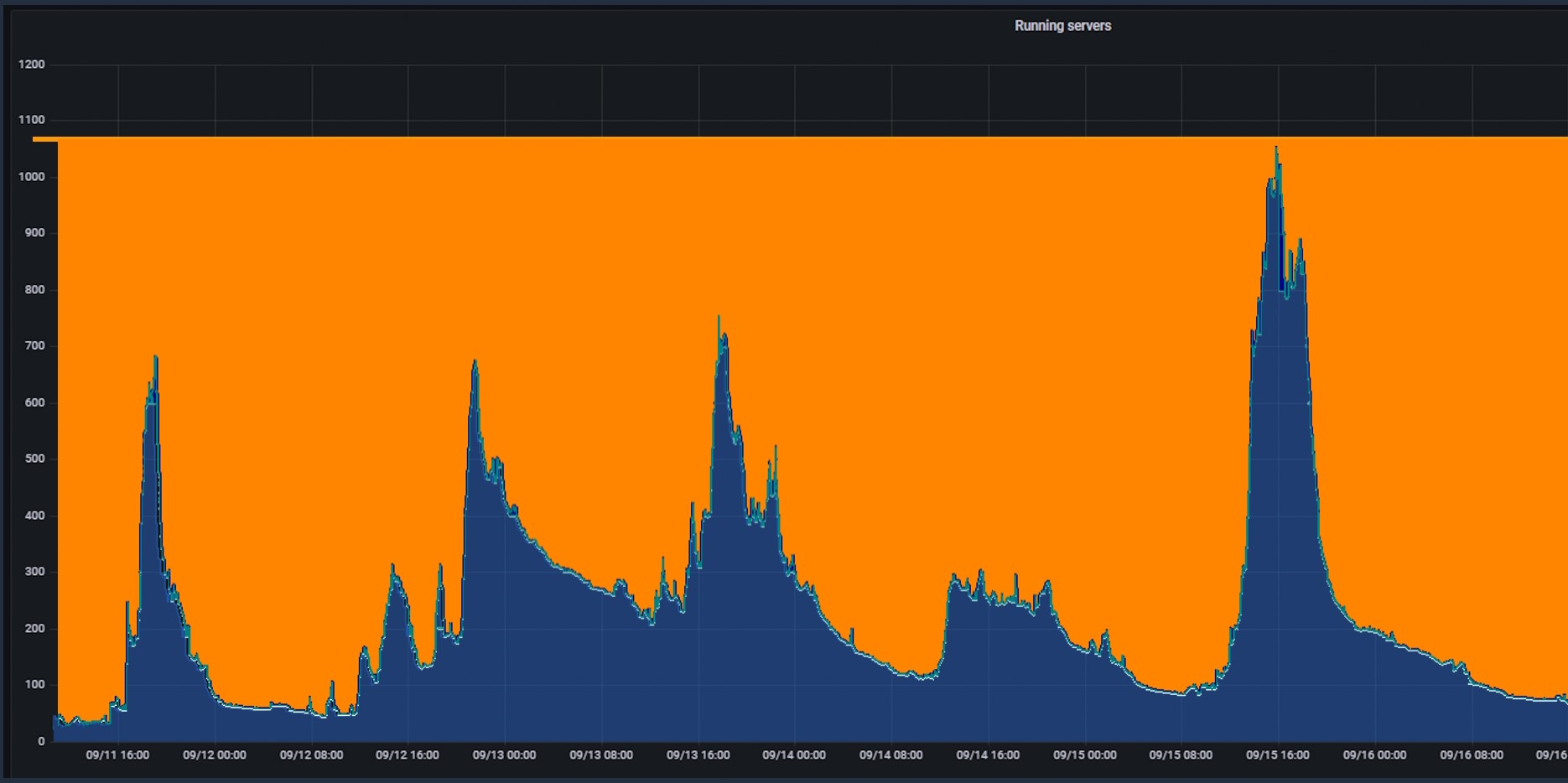

In my previous article, we touched on ways to pull in the schedule. This time I’d like to analyze how peak usage affects project timeline and cost. The above graph is based on real pattern taken from one development week in Annapurna Labs 5nm Graviton.

The Graph shows the number of variable servers per hour per day. There’s a baseline of “always on” compute that was removed from the graph, to focus on the variability of that usage per time in the week. We’ll touch how to address the baseline on a different article with saving plans or reserved instances.

Navigating the Uncertain Waters of On-Premises Compute

Looking at the next compute refresh cycle, estimating the required number of CPUs, memory size, and CPU type for diverse projects involves a significant amount of guesswork, often leading to inefficient resource allocation. While future articles will dive deeper into these specific aspects, this piece focuses on the limitations removed when adding AWS to your on-premises cluster.

When using on-premises compute, companies are forced to choose between oversizing the cluster (creating waste but enabling engineers) or under sizing it with opposite results. Imagine purchasing compute resources to accommodate the highest peak you see in the graph of Figure 1. The orange areas surrounding the peak represent unused, paid-for compute. This highlights the crucial tradeoff companies face: balancing the cost of resources against the potential impact on schedule requirements, quality and licenses utilization.

The Bottleneck Conundrum

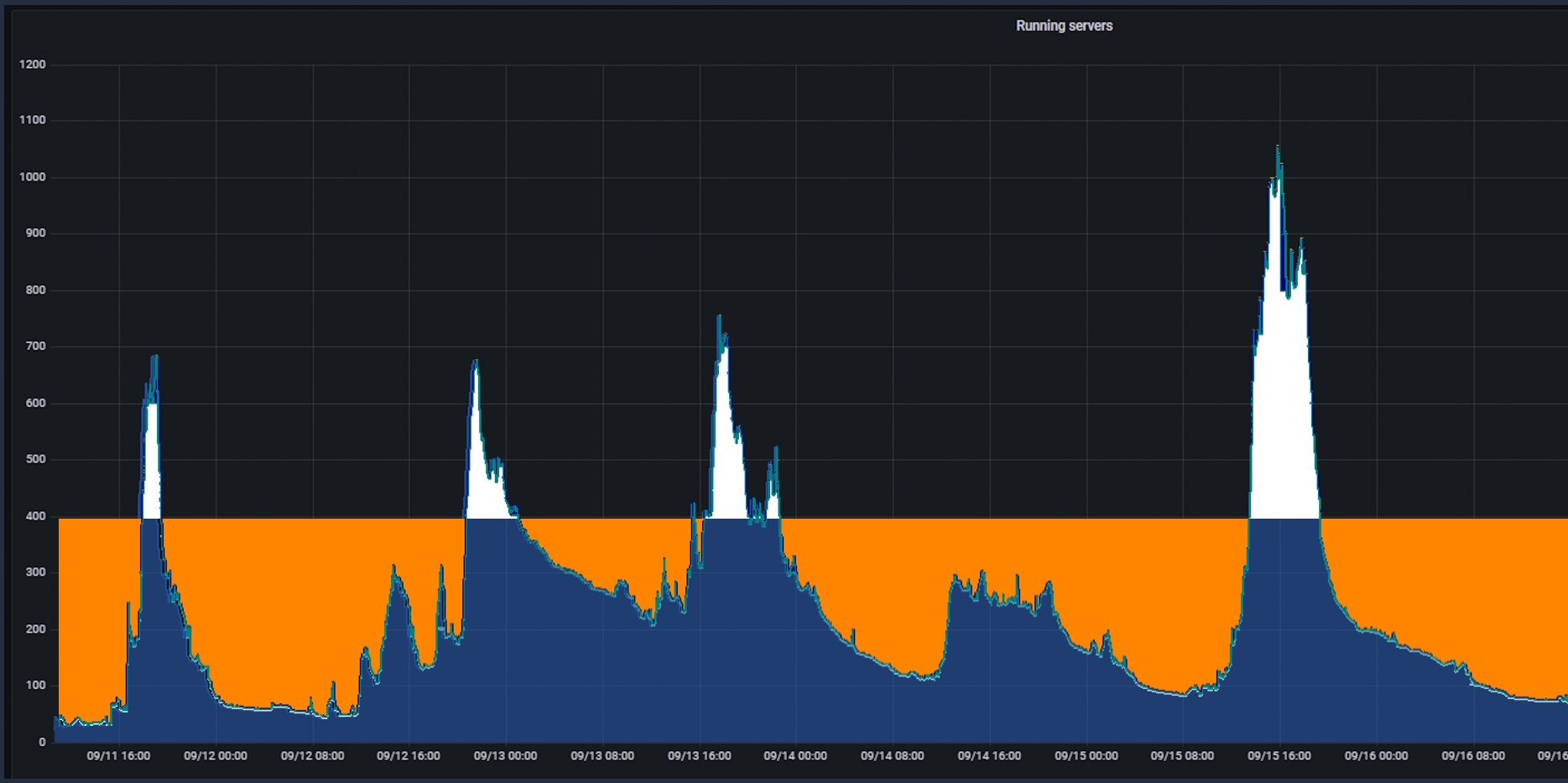

So, the IT team, together with R&D leadership would work to guess the right compute number and reduce the amount of unused compute (upper orange block). Nevertheless,

a significant portion of un-used compute still remains, representing unutilized, purchased resources as you can see in the graph on Figure2.

However, this is not the most critical issue.

The true challenge lies in daily peak usage (white areas). During these periods, projects may face delays due to insufficient on-premises compute capacity that cause engineers or licenses to wait for their jobs to run. This forces companies to make difficult choices, potentially compromising on crucial aspects like:

- Test coverage: Running fewer tests can lead to undetected issues, impacting product quality and potentially resulting in costly re-design, backend re-runs or ECOs after tapeout.

- Number of parallel backend trials: Limiting parallel trials slows down the development process, hindering overall project progress. In the new era of AI runs, EDA tools as Cerebrus (Cadence) or ai (Synopsys) could directly affect schedule and PPA (Power Performance Area) results.

- Simulations: Reduced simulations or regressions testing may potentially lead to problems later in the development cycle. As we all know, bugs that are found earlier in the product cycle have minimal impact on schedule. Bug that are found late in the process are often causing re-design, new place & route, timing, … and add redundant cycles to the critical path to tapeout.

- Formal methodologies: Similar to reduced simulations, cutting back on formal methodologies increases the risk of design flaws and errors. Same problems discussed above for simulations are also relevant here.

These compromises can add risk to both tapeout quality and time to market (posting a potential tapeout delay). Both factors are critical in today’s fiercely competitive landscape.

Some companies attempt to address peak usage issues by shifting capacity from other projects. While this might seem like a quick fix, it has cascading effects, impacting the schedule and quality of the other projects as well. Ultimately, if resources are limited, shifting them around is not always a sustainable solution. If you find yourself in a situation where you don’t have the compute resources for your project, your schedule would probably slip or you may have to compromise on specifications, quality and PPA (Power Performance Area).

Embracing the AWS Cloud

Fortunately, there exists a simple yet powerful solution: AWS Cloud computing eliminates the need for guesswork and waiting for jobs. You only pay for the resources you use, ensuring efficient utilization and eliminating the “orange cost” of unused resources. AWS cloud will help you overcome capacity limitations. Your engineering team should not have any license waiting for a compute node. This will increase your EDA license utilization and engineering productivity.

Here’s a short video that summarizes it all:

Addressing the License Challenge

One valid concern regarding cloud adoption might be the question of licenses: “While the cloud solves the compute issue, what about EDA licenses?”.

Your company might not have enough licenses, and you’ll be constrained by them anyway.

This is a legitimate point. Addressing run issues requires a comprehensive approach that considers both compute and licenses. Fortunately, all key EDA vendors are now offering innovative licenses as well as technical solutions specifically designed for cloud usage. By collaborating with your EDA vendors (Cadence, Synopsys, Mentor–Siemens), you can explore these options and find the solution that best suits your needs.

However, it’s crucial to remember that you may also have EDA licenses that are waiting for compute to be able to run. Those also represent a potentially large hidden cost. Idle engineers waiting for runs translate to wasted money on licenses (…and engineering) as well as lost time to market. Similar to the un-used compute waste in Figure2, the white area affects also “licensing cost”. Since it can significantly impact your bottom line and time to market. We will explore this topic further in future articles.

Unlocking the Potential of the Cloud for Faster Time to Market

By embracing the cloud, companies can secure several key benefits:

- Avoid delays: Cloud computing ensures access to the necessary compute power during peak usage periods. This prevents project delays and ensuring smooth development.

- Optimize resource allocation: With the cloud’s pay-as-you-go model, companies can eliminate the waste associated with unused on-premises resources.

- Variety of compute types: Other than access to quantity and not waiting for compute, there is also an aspect of the machine type itself: Memory size, CPU type etc. Those parameters affect performance as well and we’ll touch this topic in one of the future articles.

- Faster time to market: By eliminating delays and optimizing resources, cloud computing helps companies bring their products to market faster, giving them a competitive edge. ARM reported doing 66% more projects with the same engineering team.

The Cloud: Beyond Technology, a Mindset Shift

To sum up, the cloud is about enabling your team to achieve its full potential and optimize its workflow without having to guess the capacity. Ability to handle pick usage has a direct effect on quality, schedule and engineering/license utilization. In my next article, I’ll talk about more cloud aspects that would result faster time to market and better utilization of your engineering and licenses.

Disclaimer: My name is Ronen, and I’ve been working in the semiconductor industry for the past ~25 years. In the early days of my career, I was a chip designer and manager, then moved to the vendor side (Cadence) and now spending my days assisting companies in their journey to cloud with AWS. Looking at our industry along the years, examining the pain points of our industry as a customer and a service provider I am planning to write a few articles to shed some light on how chip design could benefit from the cloud revolution that is taking place these days.

C U in the next article.

Share this post via:

Facing the Quantum Nature of EUV Lithography