So… , we’re 4 months before tapeout. You were assigned to close place & route on three complex key blocks. You have 15 machines for the job, 5 per block.

You send your first batch, 5 runs per block. You’re not very surprised that your first batch fails. You modify the scripts, and run another batch. And… (Surprise 🙂 the runs do not converge. You have lots of ideas to improve the scripts, you make some changes and, …another run… and another…. and another…. and here we are, two months before tapeout. Still negative slacks.

What do you do?

Compromise on PPA (Power, Performance, Area)?

Delay the tapeout?

Similar questions are also relevant for verification. Think about the RTL freeze and your coverage. Think about formal verification and the properties you have proved so far.

Same with Analog, Characterizations, …

In one of the EDA vendor’s events, there was a presentation about AI for backend, how it saved few weeks of work and resulted better PPA for the tested blocks. It required 900+ CPUs. One of the engineers in the audience said… hmm… 900 CPUs!? I don’t have that compute capacity, all I have is 32CPUs or 96CPUs if I’m lucky.

Well…

Nowadays it has become easy to get as many CPUs as needed for a specific run utilizing AWS cloud.

You may rightfully say… “but I don’t have 150 (or 900) licenses just for my job”

There are two things you could do about your licenses:

- Run faster and make sure you never wait in queue for machines (I’ll talk about it in one of the next articles). This will allow better utilization of the licenses you already have.

- The 2nd thing you may want to do is go talk to your EDA vendors as they each have ways to address cloud flexibility licenses.

If the process of verification, place & route or any other run happens to be on your project’s critical path to tapeout, wouldn’t it make sense to pull in the schedule by utilizing resources you can use when needed most?

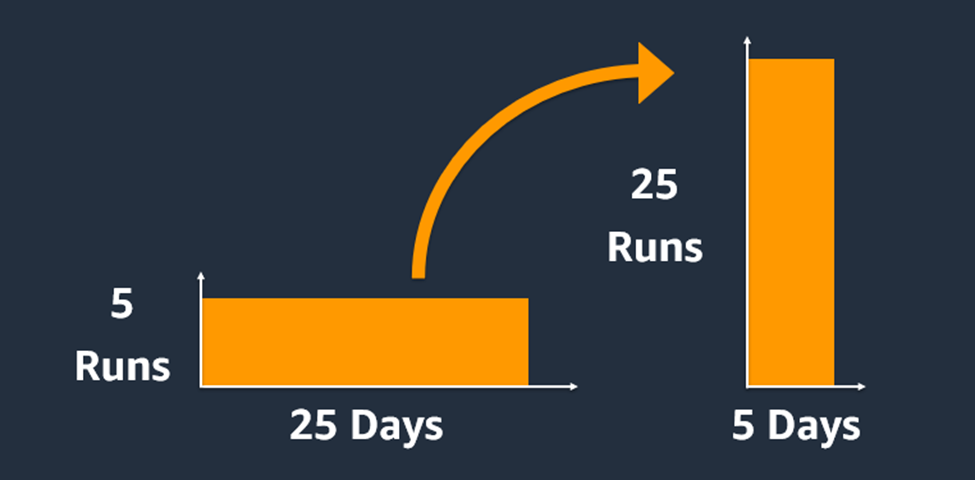

Back to the backend example (or verification regression), Assuming each run/regression takes ~5 days, and you happen to have only 5 machines at your disposal on premise. You could take 25 machines or more and finish your runs in 5 days instead of 25.

We should be seeing more and more usage of AI tools as Cerebrus and DSO-AI. Those tools usually require more compute than you might have available on-premise. Whoever utilize those, may cut his time to tapeout / time to market as well as improve PPA compared to manual runs.

The more adoption of AI tools, the more competitive the market would become.

With compute and AI same engineering teams could do more projects, faster, with better PPA.

Here are some public references.

Disclaimer: My name is Ronen, and I’ve been working in the semiconductor industry for the past ~25 years. In the early days of my career I was a chip designer, then moved to the vendor side (Cadence) and now spending my days in the cloud with AWS. Looking at our industry along the years, examining the pain points of our industry as a customer and a service provider I am planning to write a few articles to shed some light on how chip design could benefit from the cloud revolution that is taking place these days.

In the coming articles I’ll cover more aspects of performance & cost for chip design in the cloud.

C U soon.

Also Read:

Generative AI for Silicon Design – Article 4 (Hunt for Bugs)

SystemVerilog Has Some Changes Coming Up

ML-Guided Model Abstraction. Innovation in Verification

Share this post via:

A Century of Miracles: From the FET’s Inception to the Horizons Ahead