A paper was published last month on the Acuerdo Consultancy Services website authored by Joe Convey of Acuerdo and Bryan Dickman of Valytic Consulting. Joe and Bryan spent combined decades in the Semi and EDA World which means they have a great understanding of hardware bugs first hand, absolutely.

Here is a quick summary with a link to get the full paper at the end:

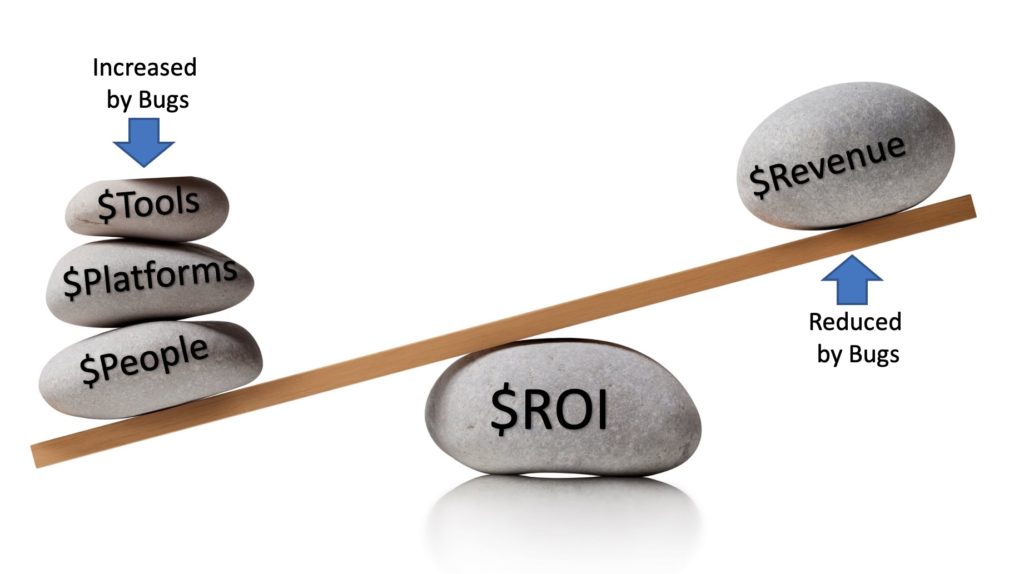

Whether you are developing a hardware product or a software product or both, understanding bugs, what causes them, how to avoid them, the cost of finding them and the cost of not finding them, becomes one of the biggest drivers that shape how teams develop products. Understanding all aspects of this will help you to reason about the balance between delivered product quality and ROI; too much or too little?

Economics of Bugs

In IP hardware product development, bugs drive a large part of the development cost and getting the investment balance wrong will have a big impact. How do teams deliver on time, with functionality, performance and integrity and what are the costs involved in ensuring an absence of bugs and the delivery of high-quality products?

Getting the investment vs risk balance right is hard…

Nobody wins if a design team get it wrong. Market reality means that costs will often be passed down the customer/consumer food chain and eventually impact end-users. Bug escapes can drive up mitigation and development costs and subsequently drive down ROI and customer confidence.

How to get the balance between investing too little (with unacceptably high risk), versus “too much” (low risk, but lousy ROI) is a long-standing challenge that design teams and the CFO have to grapple with. Engineering usually win the investment battle with the CFO, because few in senior management challenge the assertion that “you can never spend too much on finding bugs”. This results in a spiraling investment in compute and EDA tools as designs get more complex. Sound familiar?

People are your most valuable asset

The success of the products comes down to the quality of the staff; how well trained they are; how experienced they are and how innovative they are; how well equipped they are with the ‘best-in-class’ tools and resources to do their jobs. Engineers love to solve challenging problems, innovate, build ‘cool’ things, be experts and craftsmen, have access to the latest and best-in-class platforms and tools and work with teams of talented colleagues. Do they really understand how much this costs?

Get engineering teams on-side by growing a shared understanding of engineering platform costs

An interesting challenge for any business developing complex products that consume costly tools and resources is how to educate engineering teams to be cost-conscious and use the available resources sparingly and effectively. Of course, engineers understand cost, but providing the teams with clear data showing the relationship between the cost of providing the engineering platform and the process of designing IP can create positive perspectives on how to create cost efficiencies;

Wow, did we really spend that much? Surely, we can do something about that by improving xyz?

A huge part of improving product ROI is the drive for design efficiencies. Sharing the business challenge can provide a very positive environment to encouraging innovations in methodologies and deployment of tools, at a cost profile that fits the company’s business plans and provides engineering best in class facilities.

Work with engineering to drive great partnerships with EDA and other platform vendors

Having engineering as a willing partner makes a difference to the success of tool evaluations and subsequent negotiations with vendors. Creating a culture of partnership that transcends internal barriers but also extends to the company’s EDA and IT suppliers is really valuable, as it encourages vendors to become “partners” working with engineering in a joint effort to confront the technical challenges involved in a process like IP verification and debug.

Compute, compute and even more compute…

We need more compute, otherwise there will be product delays and missed revenue targets… Over the last two decades the industry has seen the compute requirement for Verilog simulation grow by several orders of magnitude and the compute infrastructures have scaled up accordingly. Businesses are migrating compute capacities from on-prem to cloud, which offers much greater capacity agility and enables teams to reason better about cost versus time to delivery.

We’re going to spend more money over a shorter time and deliver earlier.

For many, this means operating a hybrid on-prem/cloud environment. EDA vendors are adapting their tools and their business models to cope with this new hybrid-cloud world.

For complex IP products such as processors, most of this simulation consumption is taken up by constrained-random verification strategies where the ability to consume cycles is open-ended.

But I ONLY want to run GOOD verification cycles!

So, when is a verification cycle a “good cycle”? Only if “Verification Progress” has been made. By that we mean that it has either found a new bug, or it has measurably increased the testing space i.e. demonstrated correctness. The former is easy to track (we can count bugs), but the latter is harder because we don’t know precisely how many bugs are present. Instead, we track endless metrics such as coverage and cycles of testing.

Extensive operational analytics are needed in order to operate a resilient and performant platform service delivering the appropriate QoS. These analytics will monitor system performance and capacities, track operational metrics over time and may exploit machine learning prediction algorithms to alert to pending failures so that mitigations can be deployed ahead of a critical failure point.

Conclusion

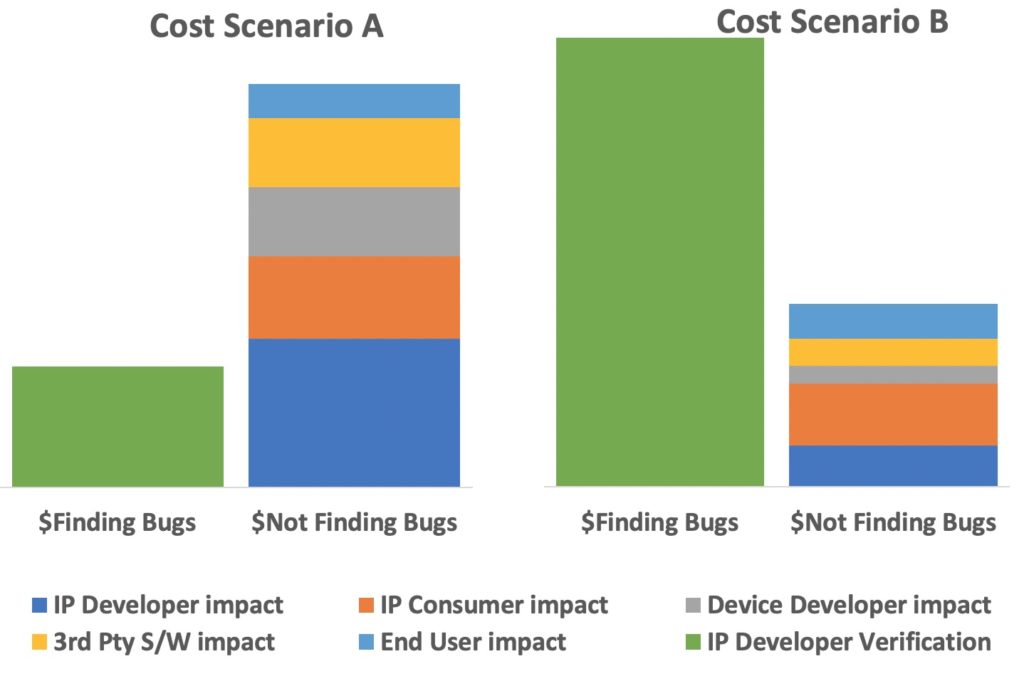

It boils down to understanding the total cost of bugs expressed as the costs expended in finding bugs, versus the cost of not finding bugs, i.e. what is the total impact cost when bugs are missed?

Which of the above 2 scenarios are you in? Do you have enough data to be able to reason about this and decide which scenario applies to your operations? If not, then what actions are you going to take to find out where you are and what action to take in each situation?

If your analysis shows that you are in scenario A, it looks like you need to address product quality urgently. You’re not really investing sufficiently in IP Product Verification and the impact costs are significantly reducing your products’ ROI. Scenario B certainly feels more comfortable, but you might have nagging doubts about the efficiency of your operations….

Now you have the right information to make the appropriate investments and get the balance right – and by the way, don’t lose your job!

Get the full paper “On the Cost of Bugs”.

Share this post via:

Facing the Quantum Nature of EUV Lithography