Last week Mentor hosted a virtual event on designing an AI accelerator with HLS, integrating it together with an Arm Corstone SSE-200 platform and characterizing/optimizing for performance and power. Though in some ways a recap of earlier presentations, there were some added insights in this session, particularly in characterizing various architecture options.

Accelerator Design

Mike Fingeroff kicked off with high-level design for the accelerator, showing a progression from a naïve implementation of a 2d image convolution with supporting functions (eg pooling, RELU) in software. This delivered 14 seconds per inference where the final goal was 1 second. His first goal was to unroll loops and pipeline. New here (to me at least) is that Catapult generates a GANTT chart, giving a nice schedule view to guide optimization. So Mike unrolls and finds he has memory bottlenecks, also highlighted by a Silexica analysis. Not surprising since he’s using a 1-port memory, again with naïve reads and writes. He switches to a shift-register and line-buffer architecture supporting a 3×3 sliding window in convolution and the bottleneck problem is solved. He also looks at Silexica analyses to decide how/if to buffer weights. Now he’s down to just over a second per inference with bias, RELU and pooling still in software (running on the embedded CPU).

Then he runs Matchlib simulations for a more comprehensive analysis (couple of hours) and find some outliers, such as one inference taking 4 minutes, principally caused by delays in CPU computations. He pushes these software functions into the hardware (which adds little overhead) and that problem goes away. While he’s met the performance goal, Mike also talks briefly about ways to further increase performance, through added output parallelism (compute 2 outputs per cycle) and input parallelism (fetch and compute on 2 inputs per cycle since the input bus is 64bit and he only wants 32bit accuracy in the inference).

Arm subsystem integration

Korbus Marneweck from Arm followed, introducing Arm IoT solutions with Corstone (the Mentor demo is integrated into this platform). Corstone provide reference designs for secure IoT implementation, with TrustZone, security IP and lots of other goodies and setup for an easy path for PSA certification. There’s quite a lot more detail on Corstone which I’ll skip in the interest of quick read. Korbus did talk about method to connect an accelerator, through a memory-mapped path, as a co-processor or through custom instructions. That raised some Q&A on working with custom instructions which may be interesting if you want to dig deeper into the video.

Characterization

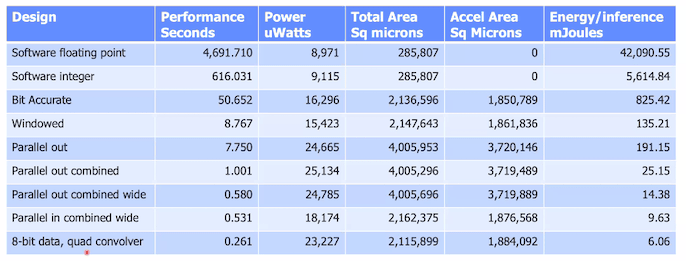

Russ Klein took the last part of the presentation, talking about integrating this all together and especially characterizing for performance, area power/energy per inference. This for me was the most interesting part of the talk because it puts hard numbers behind the benefits of an HLS-based approach to designing these AI accelerators. Quick clarification here, they measured characteristics just for the implemented accelerator, not the Corstone subsystem. However within the accelerator they are running full implementation (based on Mentor tools) and using parasitics from that implementation. The table opening this blog shows the results.

The first row is for a very naïve software-only implementation using floating point. That’s just a reference for grins. The second uses integers rather than floating point, delivering ~10 minutes/inference at ~5 joules/inference. First pass unoptimized CNN plummets to ~50 seconds and 800mW/inference. Windowing (shift registers and line-buffers) drops to 9 seconds and 135mJ/inference. Analysis continues through various combinations: parallel out, moving the RELU etc. functions into the kernel and parallel in, until they get down to 8-bit data running through a quad convolver deliver a quarter second and 6mJ per inference. That’s a lot of architecture options they explored, all enabled by starting with an HLS model and looking at tradeoffs in pipelining, windowing, memory architectures and input and output parallelism. None of that feasible on the network model side (which doesn’t understand hardware constraints and options) or on the RTL side (which would be impossibly painful to keep rearchitecting.

Good stuff.

You can check out more on this topic through Mentor’s on-demand webinars. See for example their webinar on sliding window memory architecture for performance.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.