Most embedded programming strategies involve decomposing the embedded application into chunks, which can then be executed as independent tasks. More advanced applications involve some type of data flow, and may attempt to execute operations in parallel where possible.

Both of these strategies view real-time applications as event-driven, where the response to an event requires attention within a given time window. For the application to remain in control, the response has to be deterministic, meaning it can start promptly and finish as predicted. Requirements for these event-driven applications are often stated in a simple state diagram or data flow diagram, and coding tasks is straightforward.

In the modern multicore SoC, the reality is things are not quite so straightforward. Cores are asymmetric: processing cores, graphics cores, audio and video cores, networking cores, security cores, and more. More “moving parts” mean the simple problem of synchronization becomes more challenging, and slinging more code at the problem often just makes things worse. To prevent a scenario where fixing one problem results in creating several others, the act of synchronization must be reexamined.

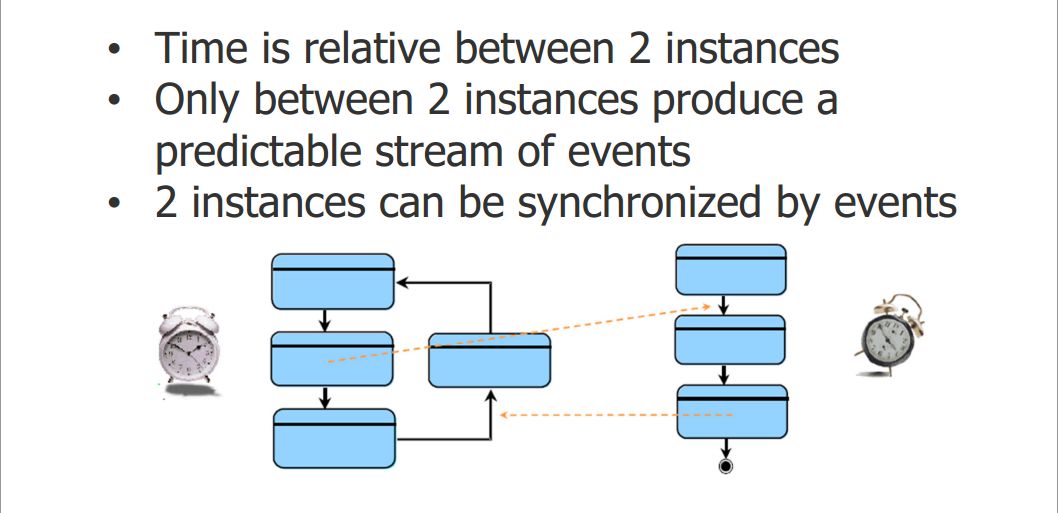

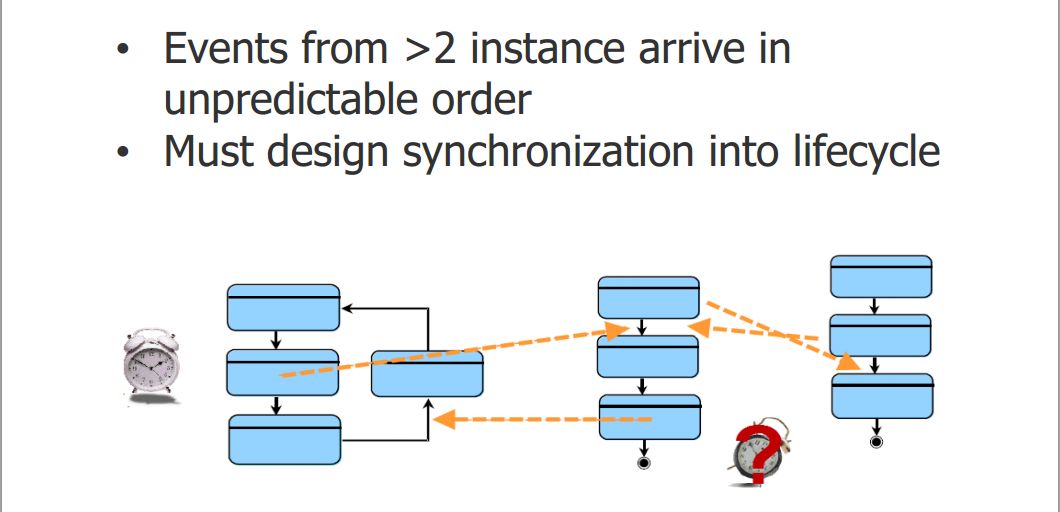

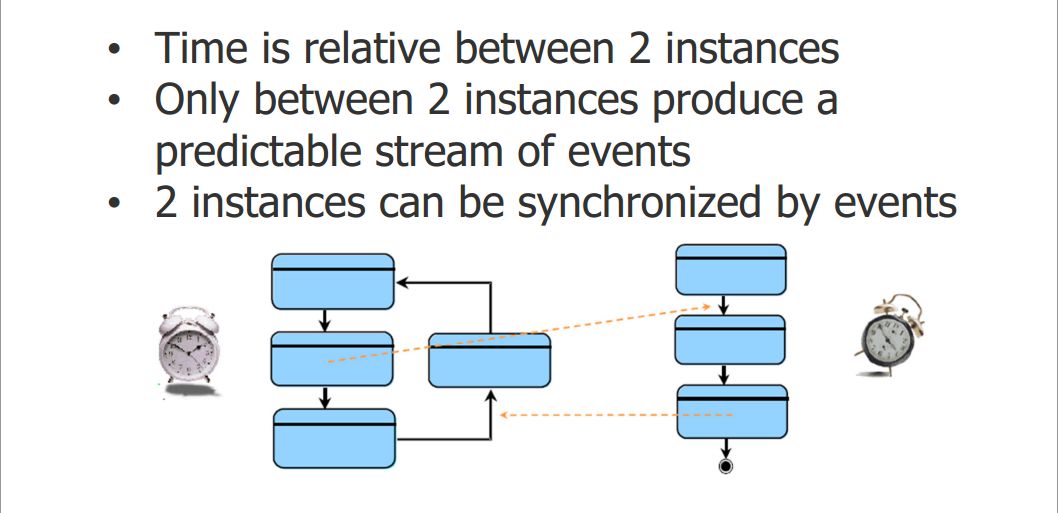

When one starts to involve more cores with different threads of operation, and more than two instances come into play, predictability starts to break down when events don’t necessarily arrive in the order they were expected. This is in fact typical as workloads and power-saving modes of operation change, and the time to respond to an event changes. Variability means time must be comprehended, both in terms of when events arrive relative to each other, and how much time a response consumes.

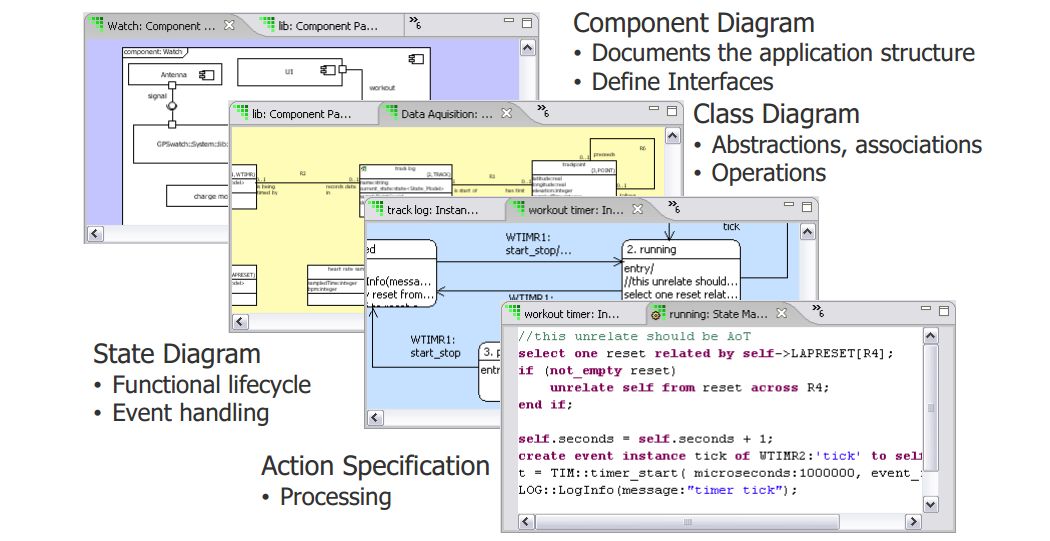

This is the thinking behind a recent Mentor Graphics webinar on “xtUML and the Notion of Time”. We’re already familiar with UML as a model-driven design language; xtUML adds structures that allow time to be treated as part of the models, and recasts concepts of order and pair-wise synchronization. Mentor’s Dean McArthur describes how BridgePoint xtUML can be used to create more platform-independent model and avoid timing issues such as the dreaded race condition.

In an xtUML executable model, components and classes are built into actions, which are the fundamental increment of processing providing the response to an event which cannot be interrupted. Actions are collected into activities, which encapsulate a state in the state machine. Activities execute concurrently, but there is an interesting point made in this webinar: the model validation must be non-deterministic since concurrency can vary between platforms.

Incorporating a sense of time into a modeling approach allows an up-front model of the entire system to be established, (mostly, except for interrupt and timer resources most platforms have) independent of the underlying hardware. Actions and activities are abstracted to a level allowing code to be created while maintaining synchronization between elements under varying system conditions. This cuts down on code surprises later in the process, where subtle timing bugs emerge and can be very difficult to solve.

Have you tried xtUML for a project? What are your thoughts on the potential and how it handles concurrency? Are model-driven design and multicore programming a good match for your needs?

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.