Sequential approaches to power reduction work well on logic implemented using standard cells. But part of every SoC, sometimes a very large part, is taken up with embedded memories for which alternative approaches are required. Not only do these memories occupy up to half of the area they also account for as much as 75% of the power dissipation, a mixture of static (leakage) and dynamic power.

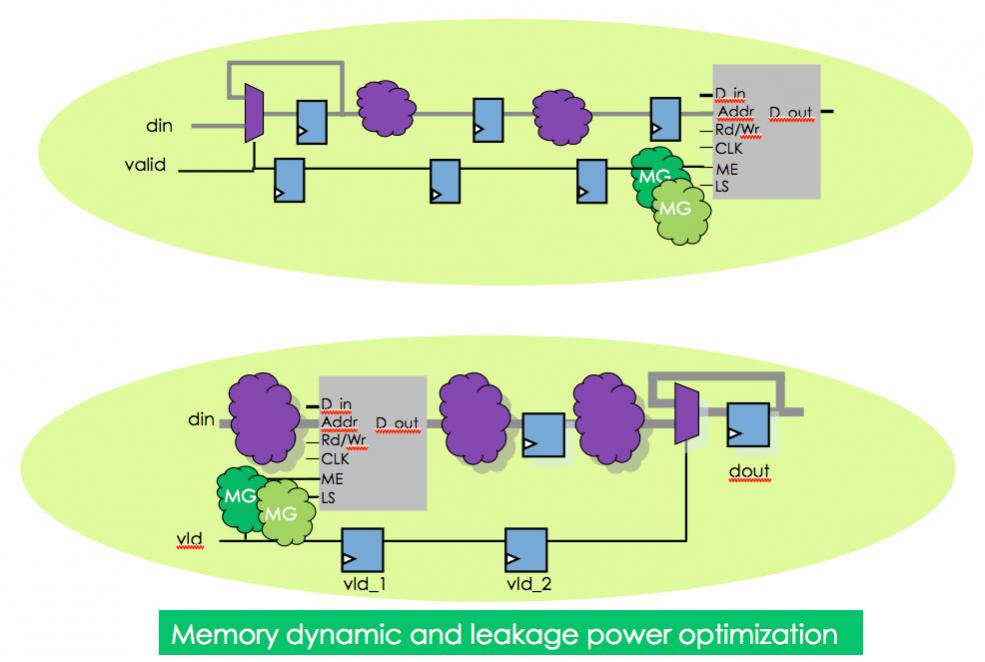

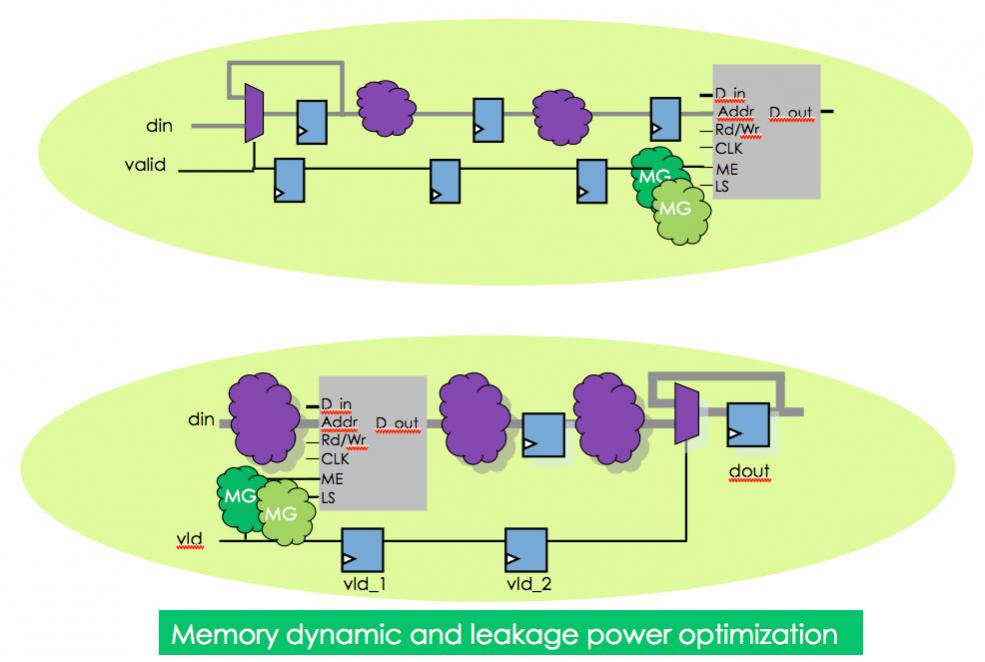

The basic idea of how to reduce dynamic power in memories is simple: if you are going to read the same address as last time then don’t bother to do the read, just use the old latched value. Similarly, if you are going to write the same value as last time to the same location, then don’t bother with the write since it has no effect. Of course, both these will save the most power when the address remains stable for large numbers of clock cycles.

Designers know this, of course, and over time they try and analyze the registers for redundant accesses and look for opportunities to shut them off. Doing this automatically during synthesis is beyond the scope of RTL synthesis tools. But doing it manually can be error-prone. Missed opportunities to shut-off redundant access results in less than the maximum power saving. Worse, shutting off an access that turns out not to be redundant will result in an error (reading the wrong value, for example) and probably a very obscure system failure.

Sequential analysis involves analyzing the entire design, including memories. It looks over a window of several clock cycles to find which values are propagated, which changes are not observable, which registers are known to remain unchanged. This is the basis for power optimization which results in shutting off unused or unobservable computations, preventing “new” values from propagating when they are known to be the same as the old value and so forth.

The same approach can be used with embedded memories. Even though at first glance every read and write to memory may seem to be essential, depending on the control sequence of the design they may not be required. Removing such redundant accesses typically results in significant reduction in the dynamic power consumption of memories.

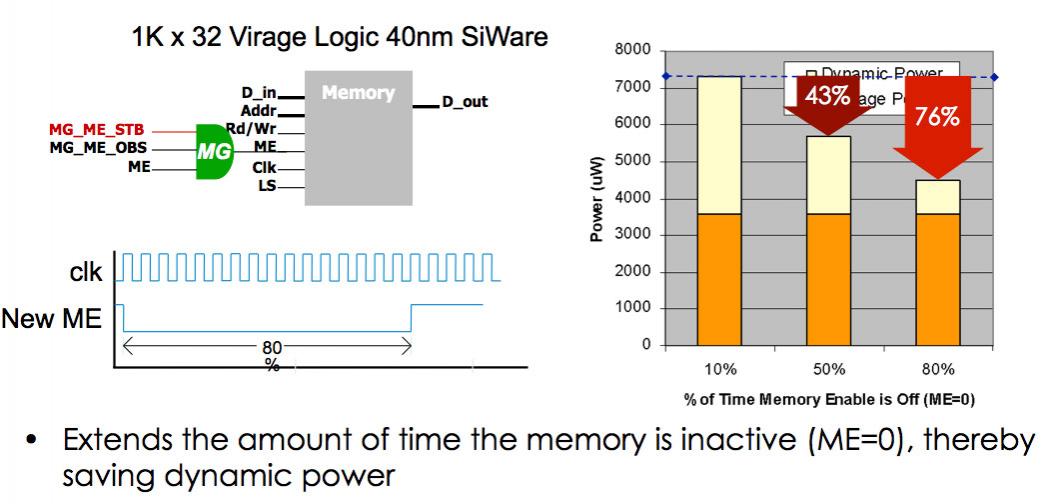

For a specific example, with the Synopsys/Virage 40nm memory above, the memory enable can potentially be held low for long periods, saving dynamic power.

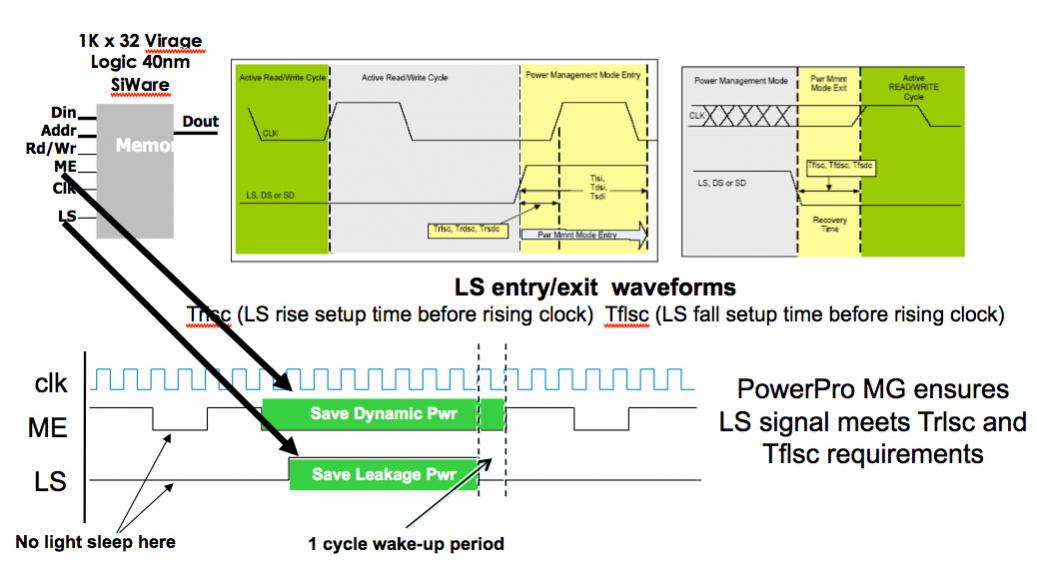

Embedded memory vendors provide capabilities to reduce not just dynamic power but also static leakage power in memories that are not in use. These involve sleep and wake signals but, in turn, that means logic to create those signals. Of course this is a tradeoff: the power saving from the sleep mode has to be greater than the power taken up generating those signals, but as long as memories are sometimes put to sleep for many clock cycles, this balance is likely to be positive.

Again, using the same Synopsys/Virage memory, further savings are possible by generating appropriate sleep signals, taking into account the requirement that the memory takes an extra clock cycle to wake.

These two optimizations work together powerfully. The more redundant accesses are suppressed, the more the memory is idle and so can be put into a sleep mode saving even more dynamic and leakage power.

Calypto’s PowerPro is a sequential power optimization tool capable of doing deep sequential analysis of the whole design, including memories, and either guides designers to make manual changes safely, or automatically updates the RTL to produced a power-optimized version.

There will be a webinar on reducing dynamic and leakage power in memories on Tuesday February 12th at 10am Pacific time. Webinar details, including registration, are here. There is a white-paper on the same topic on the Calypto download page here(look for Memory Power Reduction in SoC Designs Using PowerPro MG).

Share this post via:

Flynn Was Right: How a 2003 Warning Foretold Today’s Architectural Pivot