In an era where artificial intelligence workloads are growing in scale, complexity, and diversity, chipmakers are facing increasing pressure to deliver solutions that are not only fast, but also flexible and programmable. Semidynamics recently announced Cervell™, a fully programmable Neural Processing Unit (NPU) designed to handle scalable AI compute from the edge to the datacenter. Cervell represents a fundamental shift in how AI processors are conceived and deployed. It is the culmination of Semidynamics’ IP offerings evolution from modular IP components to a tightly integrated, unified architecture, rooted in the open RISC-V ecosystem.

The Roots of Cervell: A Modular Foundation

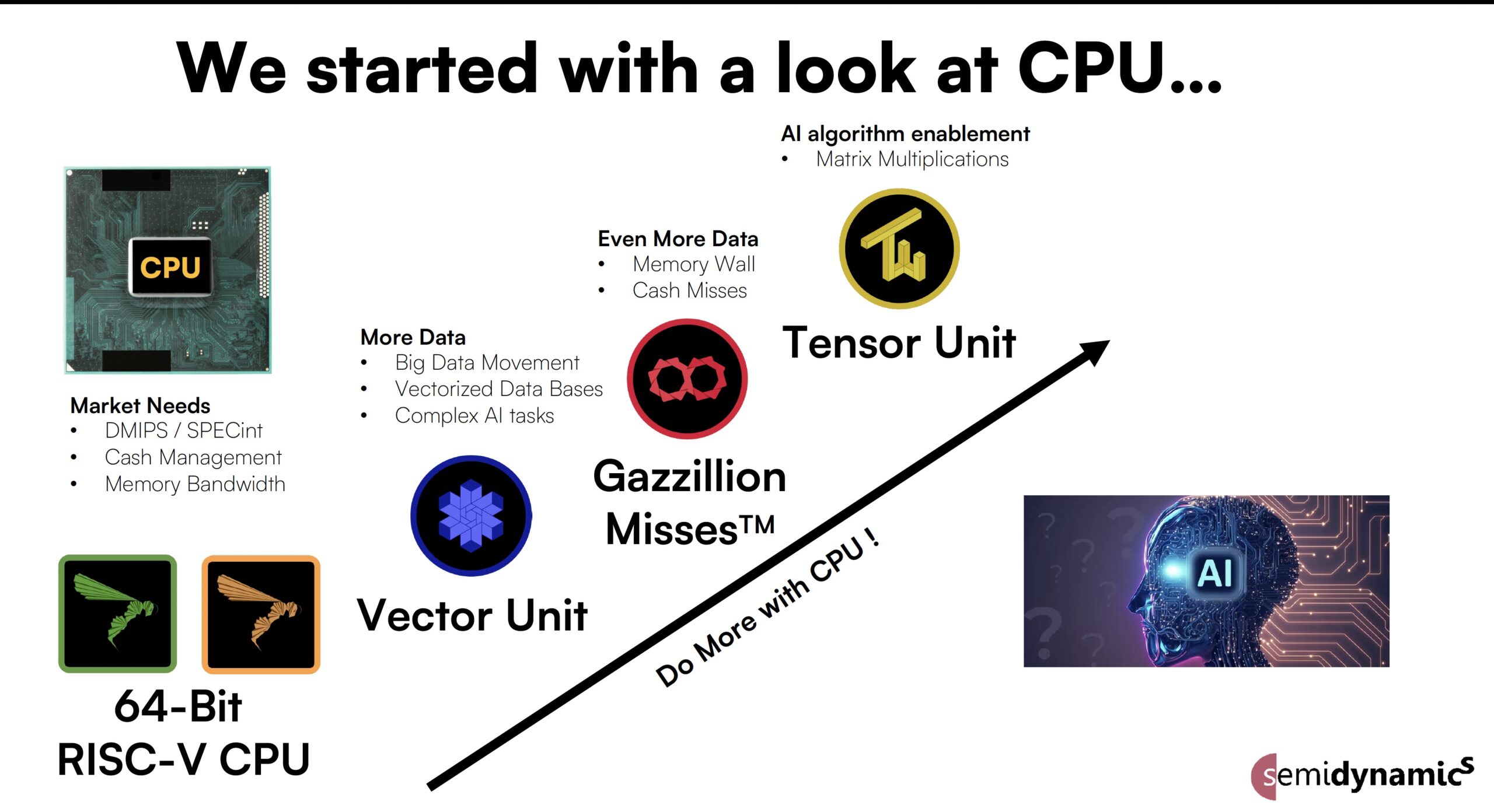

Cervell’s architectural DNA can be traced to Semidynamics’ earlier innovations in customizable processor components. The company began by developing highly configurable 64-bit RISC-V CPU cores that allowed customers to tailor logic and instruction sets to their unique requirements. These cores served as the foundation for control flow and orchestration in AI and data-intensive systems.

As AI workloads evolved, Semidynamics introduced vector and tensor units to extend the performance of its RISC-V platforms. The vector unit enabled efficient parallel processing across large data sets, making it well-suited for signal processing and inference tasks. Meanwhile, the tensor unit brought native support for matrix-heavy computations, such as those central to deep learning. Importantly, both units were designed to share the same register file and memory system, reducing latency and improving integration.

These components formed the basis of what the company called its “All-in-One” IP architecture—a modular approach that gave chip designers the freedom to assemble compute units tailored to their application. However, it still required developers to integrate, manage, and orchestrate these units at the system level. Cervell changes that.

Why Semidynamics Chose to Build an Integrated NPU

As AI models became larger and more complex, the need for a more unified compute platform became clear. Traditional approaches—where CPUs handle orchestration and discrete accelerators handle AI inference—were increasingly hindered by memory bottlenecks, data movement latency, and software complexity. Fragmented architectures that required separate cores for control, vector operations, and tensor math no longer met the performance and efficiency demands of modern AI workloads.

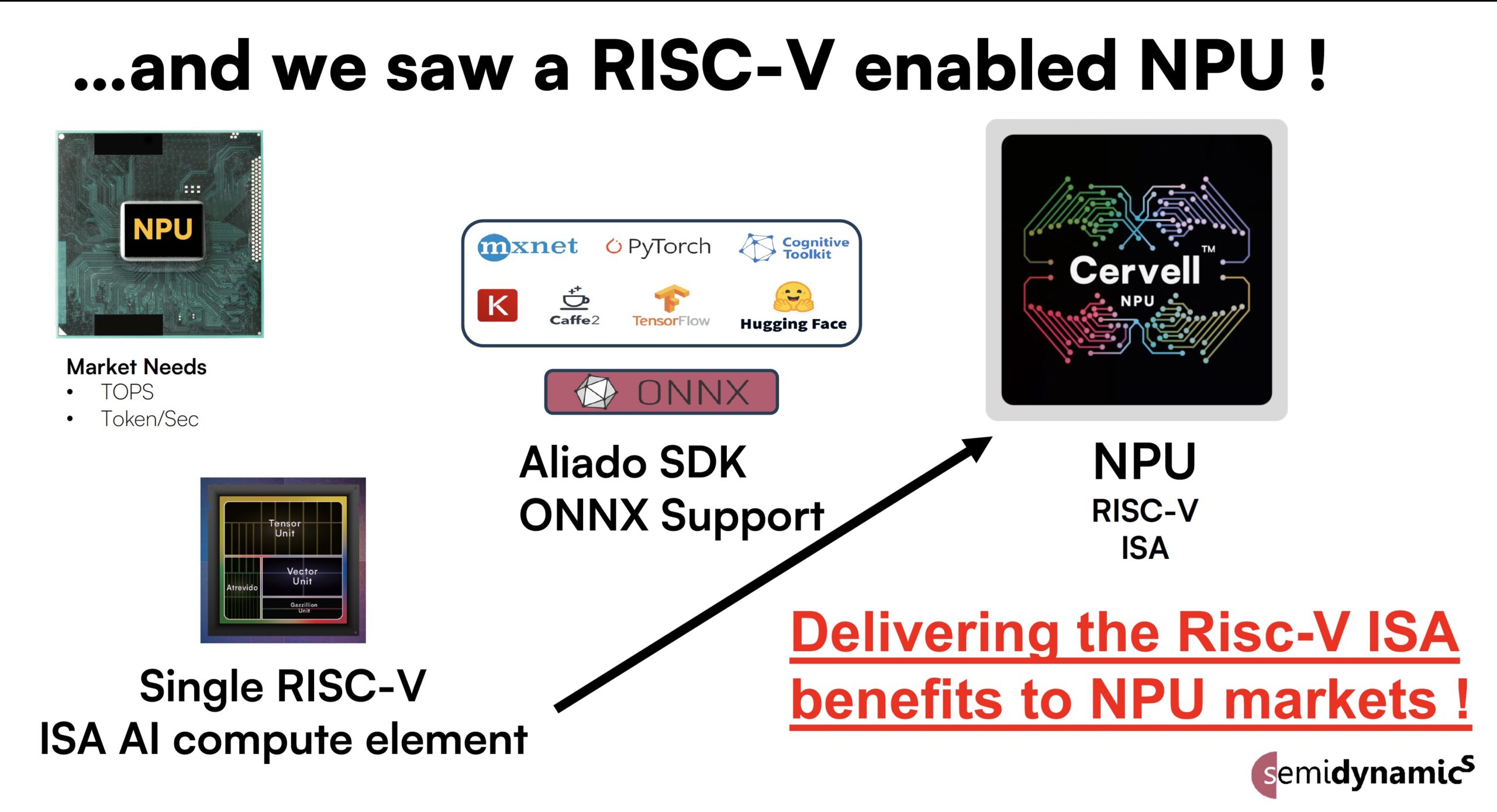

Moreover, as customers began to prioritize programmability and long-term flexibility, it was evident that an off-the-shelf NPU with fixed functionality would no longer suffice. Semidynamics saw the opportunity to converge its modular IP blocks into a single, coherent compute architecture. Cervell is a RISC-V NPU that is not only scalable and programmable, but also capable of eliminating the need for fallback or offload operations.

A New Category of NPU: Coherent, Programmable, and Scalable

What distinguishes Cervell from traditional NPUs is its unification of compute components under one roof. Rather than treating the CPU, vector unit, and tensor engine as separate blocks requiring coordination, Cervell integrates all three within a single processing entity. Each element operates within a shared, coherent memory model, meaning data flows seamlessly between control logic, vector processing, and matrix operations without needing DMA transfers or synchronization barriers.

This integration enables Cervell to execute a full range of AI tasks without falling back to an external CPU. Tasks that traditionally caused performance bottlenecks—such as control flow in transformer models or non-linear functions in recommendation engines—are now handled within the same core. With support for up to 256 TOPS in its highest configuration, Cervell achieves datacenter-level inference performance while remaining flexible enough for low-power edge deployments.

Cervell’s Market Impact

By removing the artificial boundaries between compute types, Cervell delivers a simplified software stack and more predictable performance for AI developers. Its design challenges the status quo of traditional NPUs, which often rely on closed, fixed-function pipelines and suffer from limited configurability. In contrast, Cervell empowers companies to tailor the architecture to their algorithms, allowing for truly differentiated solutions.

The Role of RISC-V in Enabling the Cervell Vision

None of this would be possible without the open RISC-V instruction set architecture. RISC-V allows developers and chip designers to deeply customize the ISA, add proprietary instructions, and maintain compatibility with open software ecosystems. In the case of Cervell, RISC-V serves not only as a technical enabler, but as a strategic differentiator.

Unlike proprietary ISAs that limit innovation to a vendor’s roadmap, RISC-V allows Cervell’s capabilities to evolve alongside customer needs. The openness of RISC-V means companies can build processors that match their business and technical requirements without being locked into closed ecosystems. This flexibility is crucial in AI, where workloads shift quickly and the ability to adapt can be a competitive advantage.

Cervell vs. Semidynamics’ Earlier All-in-One IP

While the All-in-One IP architecture laid the groundwork for what Cervell has become, the differences are profound. Previously, customers had to select and integrate CPU, vector, and tensor components on their own, often dealing with toolchain and memory integration complexity. Cervell consolidates these elements into a pre-integrated NPU that is ready to deploy and scale.

Cervell is a holistic product that includes a coherent memory subsystem, programmable logic across all compute tiers, and a software-ready interface supporting common AI frameworks. Furthermore, Cervell introduces performance scaling configurations, from C8 to C64, ensuring the same architecture can serve everything from ultra-low-power IoT devices to multi-rack datacenter inference systems.

Summary: The Future of Scalable AI Compute

Cervell brings together programmability, performance, flexibility and scalability to AI solutions. By building on RISC-V and eliminating the barriers between CPU, vector, and tensor processing, Semidynamics has delivered a unified architecture that scales elegantly across deployment tiers.

Cervell is a trademark of Semidynamics. Learn more at Cervell product page.

Also Read:

Andes RISC-V CON in Silicon Valley Overview

Vision-Language Models (VLM) – the next big thing in AI?

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.