Systolic arrays, with their ability to highly parallelize matrix operations, are at the heart of many modern AI accelerators. Their regular structure is ideally suited to matrix/matrix multiplication, a repetitive sequence of row-by-column multiply-accumulate operations. But that regular structure is less than ideal for inference, where the real money will be made in AI. Here users expect real-time response and very low per-inference cost. In inference not all steps in acceleration can run efficiently on systolic arrays, even less so after aggressive model compression. Addressing this challenge is driving a lot of inference-centric innovation and Arteris NoCs are a key component in enabling that innovation. I talked with Andy Nightingale (Vice President of Product Management & Marketing, Arteris) and did some of my own research to learn more.

Challenges in inference

Take vision applications as an example. We think of vision AI as based on convolutional networks. Convolution continues to be an important component and now models are adding transformer networks, notably in Vision Transformers (ViT). To reduce these more complex trained networks effectively for edge application is proving to be a challenge.

Take first the transformer component in the model. Systolic arrays are ideally suited to accelerate the big matrix calculations central to transformers. Even more speedup is possible through pruning, zeroing weights which have low impact on accuracy. Calculation for zero weights can be skipped, so in principle enough pruning can offer even more acceleration. Unfortunately, this only works in scalar ALUs (or in vector ALUs to a lesser extent). But skipping individual matrix element calculations simply isn’t possible in a fixed systolic array.

The second problem is accelerating convolution calculations, which map to nested loops with at least four levels of nesting. The way to accelerate loops is to unroll the loops, but a two-dimensional systolic array can only support two levels of unrolling. That happens to be perfect for matrix multiplication but is two levels short of the need for convolution.

There are other areas where systolic arrays are an imperfect fit with AI model needs. Matrix/vector multiplications for example can run on a systolic array but leave most of the array idle since only one row (or column) is needed for the vector. And operations requiring more advanced math, such as softmax, can’t run on array nodes which only support multiply-accumulate.

All these issues raise an important question: “Are systolic arrays accelerators only useful for one part of the AI task or can they accelerate more, or even all needs of a model?” Architects and designers are working hard to meet that larger goal. Some methods include restructuring sparsity to enable skipping contiguous blocks of zeroes. For convolutions, one approach restructures convolutions into one dimension which can be mapped into individual rows in the array. This obviously requires specialized routing support beyond the systolic mesh. Other methods propose folding in more general SIMD computation support in the systolic array. Conversely some papers have proposed embedding a systolic array engine within a CPU.

Whatever path accelerator teams take, they now need much more flexible compute and connectivity options in their arrays.

Arteris NoC Soft Tiling for the New Generation of Accelerators

Based on what Arteris is seeing, a very popular direction is to increase the flexibility of accelerator arrays, to the point that some are now calling these new designs coarse-grained reconfigurable arrays (CGRA). One component of this change is in replacing simple MAC processing elements with more complex processing elements (PEs) or even subsystems, as mentioned above. Another component extends current mesh network architectures to allow for various levels of reconfigurability, so an accelerator could look like a systolic array for an attention calculation, or a 1D array for a 1D convolution or vector calculation.

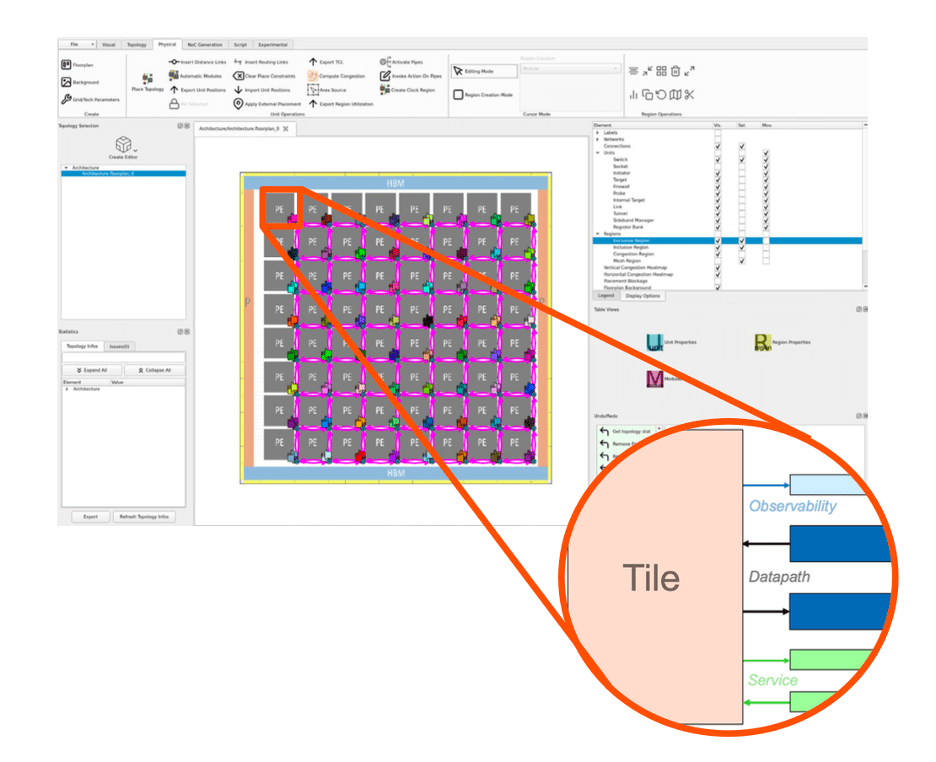

You could argue that architects and designers could do this themselves today without additional support, but they are seeing real problems in their own ability to manage the implied compexity. Leading them to want to build these more complex accelerators bottom-up. First build a basic tile, say a 4×4 array of PEs, then thoroughly validate, debug and profile that basic tile. Within a tile, CPUs and other (non-NoC) IPs must connect to the NoC through appropriate network interface units, for AXI as an example. A tile becomes in effect rather like an IP, requiring all the care and attention needed to fully validate an IP.

Once a base tile is ready, then it can be replicated. The Arteris framework for tiling allows for direct NoC-to-NoC connections between tiles, without need for translation to and from a standard protocol (which would add undesirable latency). Allowing you to step and repeat that proven 4×4 tile into an 8×8 structure. Arteris will also take care of updating node IDs for traffic routing.

More room for innovation

I also talked to Andy about what at first seemed crazy – selective dynamic frequency scaling in an accelerator array. Turns out this is not crazy and is backed by a published paper. Note that this is frequency scaling only, not frequency and voltage scaling since voltage scaling would add too much latency. The authors propose switching frequency between layers of a neural net and claim improved frames per second and reduced power.

Equally intriguing, some work has been done on multi-tasking in accelerator arrays for handling multiple different networks simultaneously, splitting layers in each into separate threads which can run concurrently in the array. I would guess that maybe this could also take advantage of partitioned frequency scaling?

All of this is good for Arteris because they have had support in place for frequency (and voltage) scaling within their networks for many years.

Fascinating stuff. Hardware evolution for AI is clearly not done yet. Incidentally, Arteris already supports tiling in FlexNoC for non-coherent NoC generation and plans to support the same capability for Ncore coherent NoCs later in the year.

You can learn more HERE.

Also Read:

Arteris at the 2024 Design Automation Conference

Arteris is Solving SoC Integration Challenges

Arteris Frames Network-On-Chip Topologies in the Car

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.