On the heels of Arm’s 2024 automotive update, Arteris and Arm announced an update to their partnership. This has been extended to cover the latest AMBA5 protocol for coherent operation (CHI-E) in addition to already supported options such as CHI-B, ACE and others. There are a couple of noteworthy points here. First, Arm’s new Automotive Enhanced (AE) cores upgraded protocol support from CHI-B to CHI-E and Arm/Arteris have collaborated to validate the Arteris Ncore coherent NoC generator against the CHI-E standard. Second, Arteris has also done the work to certify Ncore-generated networks with the CHI-E protocol extension for ASIL B and ASIL D. (Ncore-generated networks are already certified for earlier protocols, as are FlexNoC-generated non-coherent NoC networks.) In short, Arteris coherent and non-coherent NoC generators are already aligned against the latest Arm AE releases and ASIL safety standards. Which prompts the question: where are coherent and non-coherent NoCs required in automotive systems? Frank Schirrmeister (VP Solutions and Business Development at Arteris) helped clarify my understanding.

Automotive, datacenter/HPC system contrasts

Multi-purpose datacenters are highly optimized for task throughput per watt per $. CPU and GPU designs exploit very homogenous architectures for high levels of parallelism, connecting through coherent networks to maximize the advantages of that parallelism while ensuring that individual processors do not trip over each other on shared data. Data flows into and out of these systems through regular network connections, and power and safety are not primary concerns (though power has become more important).

Automotive systems architectures are more diverse. Most of the data comes from sensors – drivetrain monitoring and control, cameras, radars, lidars, etc. – streaming live into one or more signal processor stages, commonly implemented in DSPs or (non-AI) GPUs. Processing stages for object recognition, fusion and classification follow. These stages may be implemented through NPUs, GPUs, DSPs or CPUs. Eventually, processed data flows into central decision-making, typically a big AI system that might equally be at home in a datacenter. These long chains of processing must be distributed carefully through the car architecture to meet critical safety goals, low power goals and, of course, cost goals. As an example, it might be too slow to ship a whole frame from a camera through a busy car network to the central AI system, and then to begin to recognize an imminent collision. In such cases, initial hazard detection might happen closer to the camera, reducing what the subsystem must send to the central controller to a much smaller packet of data.

Key consequences of these requirements are that AI functions are distributed as subsystems through the car system architecture and that each subsystem is composed of a heterogenous mix of functions, CPUs, DSPs, NPUs and GPUs, among others.

Why do we need coherence?

Coherence is important whenever multiple processors are working on common data like pixels in an image, where there is opportunity for at least one processor to write to a logical address in a local cache and another processor to read from the same logical address in a different cache. The problem is that the second processor doesn’t see the update made by the first processor. This danger is unavoidable in multiprocessor systems sharing data through hierarchical memory caches.

Coherent networks were invented to ensure disciplined behavior in such cases, through behind-the-scenes checking and control between caches. A popular example can be found in coherent mesh networks common in many-core processor servers. These networks are highly optimized for regular structures, to preserve the performance advantages of using shared cache memory while avoiding coherence conflicts.

Coherence needs are not limited to mesh networks threading through arrays of homogenous processors. Most of the subsystems in a car are heterogeneous, connecting the multiple different types of functions already discussed. Some of these subsystems equally need coherence management when processing images through streaming operations. Conversely, some functions may not need that support if they can operate in separate logical memory regions, or if they do not need to operate concurrently. In these cases, non-coherent networks will meet the need.

A key consequence is that NoCs in an automotive chip must manage both coherent and non-coherent networks on a chip for optimal performance.

Six in-car NoC topologies

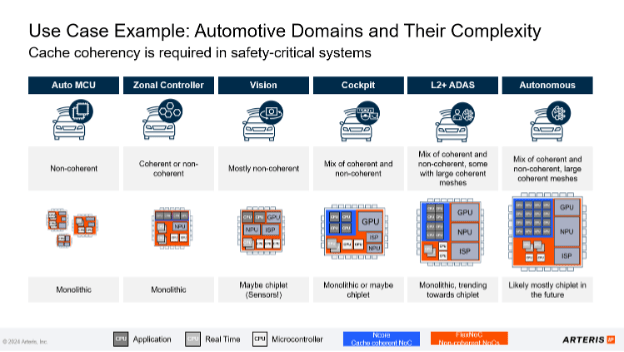

Frank illustrated with Arm’s use cases from their recent AE announcement, overlaid with the Arteris view of NoC topologies on those use cases (see the opening figure in this blog).

Small microcontrollers at the edge (drivetrain and window controllers for example) don’t need coherency support. Which doesn’t mean they don’t use AI – predictive maintenance support is an active trend in MCUs. But there isn’t need for high-performance data sharing. Non-coherent NoCs are ideal for these applications. Since these MCUs must sit right next to whatever they measure/control, they are located far from central or zonal controllers and are implemented as standalone (monolithic) chips.

Per Frank, zonal controllers may be non-coherent or may support some coherent interconnect, I guess reflecting differences in OEM architecture choices. Maybe streaming image processing is handled in sensor subsystems, or some processing is handled in the zonal controller. Then again, he sees vision/radar/lidar processing typically needing mostly non-coherent networks with limited coherent network requirements. While streaming architectures will often demand coherence support, any given sensor may generate only one or a few streams, needing at most a limited coherent core for initial recognition. Zonal controllers, by definition, are distributed around the car so are also monolithic chip solutions.

Moving into the car cockpit, infotainment (IVI) is likely to need more of a mix of coherent and non-coherent support, say for imaging overlaid with object recognition. These systems may be monolithic but also lend themselves to chiplet implementations. Centralized ADAS control (fusing inputs from sensors for lane recognition, collision detection, etc.) for SAE level 2+ and beyond will require more coherence support, yet still with need for significant non-coherent networks. Such systems may be monolithic today but are trending to chiplet implementations.

Finally, as I suggested earlier the central AI controller in a car is fast becoming a peer to big datacenter-like systems. Arm has already pointed to AE CSS-based Neoverse many-core platforms (2025) front-ending AI accelerators (as they already do in Grace-Hopper and I’m guessing Blackwell). Add to that big engine more specialized engines (DSPs, NPUs and other accelerators) in support of higher levels of autonomous driving, to centrally synthesize inputs from around the car and to take intelligent action on those inputs. Such a system will demand a mix of big coherent mesh networks wrapping processor arrays, a distributed coherent network to connect to some of those other accelerators and non-coherent networks to connect elsewhere. These designs are also trending to chiplet-based systems.

In summary, while there is plenty of complexity in evolving car architectures and the consequent impact on subsystem chip/chiplet designs, connected on-chip through both coherent and non-coherent networks, the intent behind these systems is quite clear. We just need to start thinking in terms of total car system architecture rather than individual chip functions.

Listen to a podcast in which Dan Nenni interviews Frank on this topic. Also read more about Arteris coherent network generation HERE and non-coherent network generation HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.