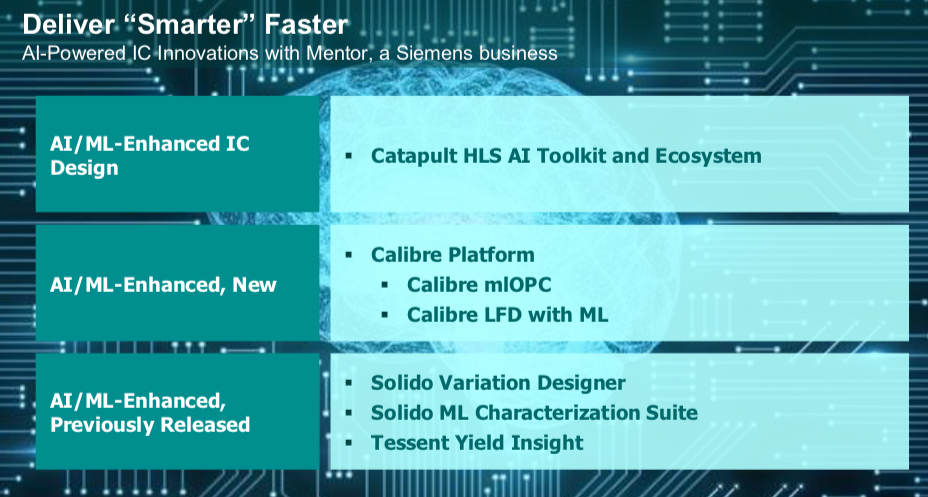

Mentor are stepping up their game in AI/ML. They already had a well-established start through the Solido acquisition in Variation Designer and the ML Characterization Suite, and through Tessent Yield Insight. They have also made progress in prior releases towards supporting design for ML accelerators using Catapult HLS. Now they’ve stepped up to better round out (in my view) Catapult support, also to introduce new ML-enabled capabilities in Calibre.

Joe Sawicki (who needs no introduction but for completeness is EVP of IC EDA at Mentor/Siemens) kicked off this announcement with some background on AI/ML, starting with a nice infographic on startups in AI (over 2000 with $27B in funding) and the AI chip landscape, estimated to be $195B by 2027. Will all or even most of the startups make it? Of course not – startups have a significant fallout rate in any field. But the practical stuff – computer vision, keyword/phrase recognition, localization and mapping for robots, among others – this is real, and has massive potential in many markets. Siemens particularly is very interested in the Industry 4.0 opportunities. Joe also noted that over half the fabless venture funding since 2012 has gone into AI startups, most of it relatively recently, which is even more impressive.

Joe sees challenges in this area in four domains: optimizing ML accelerator architectures, managing power, dealing with huge designs (up to reticle size) and dealing with high speed I/O for fast memory access and communication. This is driven in part by winner-take-all competition in these application domains, demanding differentiation in hardware architecture towards application-specific goals at the edge versus ultimate performance in data-centers (DCs). Edge nodes need ultra-low power for long battery life and DCs still need manageable power (no-one wants to scale-out power hogs). Performance requirements in DC ML accelerators demand deeply intermixed logic with multiple levels of embedded memory, driving massive die sizes and need for fast access to off-die working memory through interfaces such as HBM2 and GDDR6.

For Joe, this maps onto design needs in top-down optimization through HLS, higher capacity and faster, scalable tools everywhere (he noted particularly that he sees this domain driving huge growth in emulation, particularly for power verification), power budget management and need for a flexible AMS flow, especially at the edge where you need to optimize from sensors straight into inference engines (aka smart sensors).

Ellie Burns (Mktg Dir for digital design implementation solutions) followed to describe progress the have made in Catapult HLS for AI/ML design. I first wrote about what they are doing in this area about a year ago. The value proposition is pretty clear. HLS works well with neural net architectures, ML designers for edge applications want to functionally differentiate while also squeezing PPA as hard as they can (especially power, for e.g. wake-words/phrases), so fast analysis and verification through the HLS cycle is a great fit.

The Catapult team have been working with customers such as Chips and Media for a while, optimizing the architecture and flow and they now have an updated release, including (again in my view) some important advances. First, they now have a direct link to TensorFlow. Earlier you had to figure out yourself how to map a trained network (trained almost certainly on TensorFlow) to your Catapult input; do-able but not for the timid. Now that’s automated – big step forward. Second, they now have HLS toolkits for four working AI applications. And finally, they provide an FPGA demonstrator kit compatible with a Xilinx Ultrascale board. You can checkout and adapt the reference design and prove out your ML recognition changes from an HDMI camera through to an HDMI display. The kit provides scripts to build and download your design to the board; board and Xilinx IP such as HDMI are not included.

Steffen Schulze (VP Calibre product management) followed to share the latest ML-driven release info for Calibre OPC and Calibre LFD. Almost anything in implementation is for me a natural for ML – analysis, optimization, accelerated time to closure – all good candidates for improvement through learning. Steffen said they have done a lot of infrastructure work under the Calibre hood, including adding APIs for the ML engine, seeing potential for other applications to also leverage this new capability.

On ML-enabled OPC, Steffen first presented an interesting trend graph – the predicted number of cores required to maintain a similar OPC turn-around-time versus feature size. The example he cites is for critical layer OPC on a 100mm2 die using EUV and multiple patterning, starts at around 10k cores for 7nm and trends more or less linearly to around 50k cores at 2nm.

He said that, as always, scalability of the tools helps but customers are looking for more performance and increased accuracy through algorithmic advances to cope with these significantly diffraction-challenged feature-sizes. As an interesting example of real-world application of ML in a critical application, they use the current OPC model to drive training, then in application to the real design they use one ML (inference) pass to get close followed by two traditional OPC passes to resolve inconsistencies and problems with unexpected configurations (configs not encountered in the training I assume). This approach is delivering 3X runtime reduction and better yet, improved edge placement error (a key metric in OPC accuracy).

For Calibre LFD (lithography-friendly design), let me start with a quick explanation since I’m certainly no expert in this area. The dummies guide, at least as this dummy understands it, is that processes and process variability today are so complex that the full range of possibly yield-limiting constructions can no longer be completely captured in design rule decks. The details that fall outside the scope of DRC rules require simulation to model potential differences between as-drawn and as-built lithographies. The purpose of Calibre LFD is to do that analysis, based on an LFD kit supplied by the foundry.

The ML-based flow here is fairly similar, starting with labeled training followed by inference on target designs. The training is designed to identify high-risk layout patterns, passing only these through for detailed simulation. This delivers 10-20X improvement in performance over full-chip simulation. Steffen also said that using this approach they have been able to find yield limiters that were not previously detected. Here also, ML delivers greatly increased throughput and higher accuracy.

To learn more about what Mentor is doing in AI/ML in Catapult and Calibre, see them at DAC or click HERE and HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.