The need to exchange larger and larger amount of data from system to the external world, or internally into an application, has pushed for the standardization of interconnect protocol. This allows interconnecting different Integrated Circuits (IC) coming from different vendors. Some protocols have been defined to best fit certain types of applications, like Serial ATA (SATA) for Storage, and certain protocols are covering a wide range of applications, like Universal Serial Bus (USB) or PCI-Express (PCIe). The wide deployment of these High Speed Serial Interconnect (HSSI) has started at the beginning of the 2000’s (even if the first have appeared during the 90’s with USB, Ethernet and 1394), and the different standard organization are constantly working to define the next step, resulting in an increase of the effective data bandwidth. This evolution has been the guarantee to escape for a product commoditization: a new generation of the protocol is defined every 18 to 30 months. Thus, an IP vendor has the opportunity to release a new upgrade of a standard based product frequently enough to keep the selling price in the high range. This is true for almost all protocols, at least for the standard which will be used for a decent period (say at least 5 to 10 years).

There is so many protocols and so many possibilities of using it, that the real question is not to know if a product will use High Speed Serial Interconnect to exchange data, but which protocol to use, and which generation for a given protocol. If you want to secure your investment, you’d better choosing a protocol supported by a solid roadmap. If your product needs to be interoperable, you need to select a widely spread protocol, also supported by many IP vendors, with Customer Off-The-Shelf (COTS) available…

Quick overview of the existing protocols

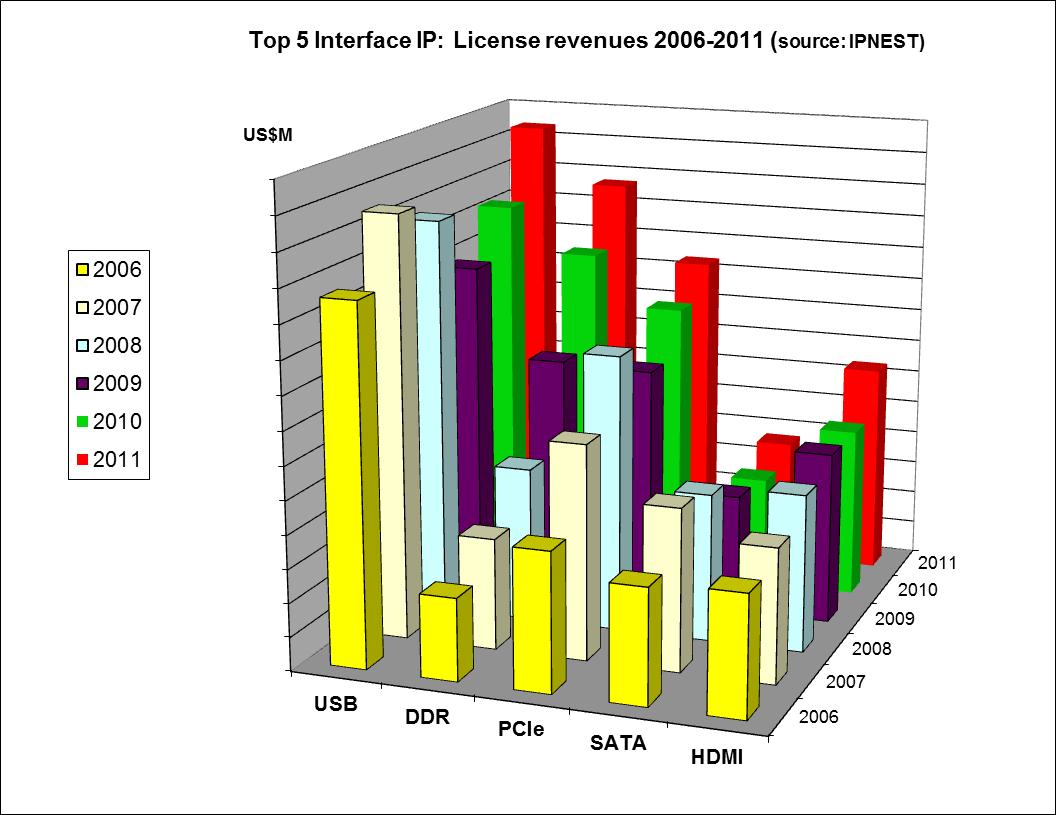

The IP market analysis has been organized to start from the well-established market: USB, then PCIe, SATA, HDMI, or DDR, for which Gartner reports results for several years, looking also at emerging protocols like MIPI or DisplayPort, to finish with the smallest, like Serial RapidIO, Hyper Transport or Infiniband, too small in term of IP market size to be reported, being consolidated by Gartner into “others”.

Universal Serial Bus (USB)

USB Standard is by far the oldest protocol, and the most employed, in various applications segment, the most important being PC and Consumer. So far, and before USB 3.0 being deployed, the Market for USB IP was above $60M in 2008. USB3.0 represents a technology break: USB2.0 bit rate was limited to 480 Mb/s although the PHY for USB3.0 supports 5 Gbps, being backward compatible with USB2. It is to be noticed that the specification are very close (but not exactly the same…) from the PCIe Gen-2 specifications, not only because the PHY frequency is the same, but also because USB3.0 protocol has been derived from PCI Express.

PCI-Express (PCIe)

Following the wide adoption of the PCI and PCI-X standard, the PCI-SIG has proposed the PCI-Express, based on high speed bidirectional differential serial link, S/W backward compatible with PCI. The early adopters were the PC chipset and GPU IDM, in 2004, followed by the Add-In-Card manufacturers using the Express Card, then by many OEM in various segments, using either FPGA or ASSP solutions, either ASIC technology. Targeted application for ASIC or ASSP are: PC GPU, PC Add-In-Card and External Disk Drives, Storage Blade servers with Bridges to others standards, Networking (Routers, Switches, Security Internet checks or IDS/IPS), High End Consumer (Gaming consoles). Through the use of FPGA technologies, the PCIe pervasion has also occurred in segments like: Vision and Imaging, Test and Measurement, Industrial and Scientific and Medical.

The main reason for the success of PCIe is the guarantee of interoperability between different systems based on the availability of numerous Customer-Off-The Shelf (COTS) products, FPGA or ASSP.

Serial ATA (SATA)

Serial ATA is by nature an extension of ATA, an application specific standard for Disk Drive Control. SATA is obviously used in HDD, also in Set Top Box (STB), Blue Ray Disk players and Optical Video Disk. The emergent Solid State Disk (SSD) segment has initially used SATA protocol, concurrently with PCI Express to interface to the host, but the recently defined NVM Express standard is probably indicating that SATA will disappear on the midterm as the preferred protocol for SSD. Serial Attached SCSI (SAS) and Network Attached Storage (NAS) IP business is included in SATA.

Fibre Channel (FC)

As no specific Market data are available for FC, it is part of the “Others Interconnect”, or included in the SATA report. This standard supports application being a combination of Storage and Networking, like Storage Area Network (SAN) applications. The FC is also evolving to Fibre Channel over Ethernet (FCoE). As we can see, FC is also an application specific standard, on a niche market if we consider SATA as the parent wide market.

the full article is continued here (search for this picture in the page to find the continuation)

Eric Esteve from IPNEST