My new iPad lasts about 10 hours on a single charge and the A5X processor is designed with a 45nm process from Samsung. Processor chips for tablets like this use a multi-voltage IC design flow to reduce total power by: Continue reading “Multi-Voltage IC Design Flow”

Oct. 18 Non-Volatile Memory Webinar, IBM validates OTP for Foundry program, & New Low-Risk Evaluation License

As the temperature drops and the bright red maple leaves have begun to pile up, so has the stack of projects at Novocell. If you are expecting to utilize their high reliability, easy-to-integrate OTP in a project taping out in late Q4 or early Q1, NOW is the time to contact them.

Novocell and global IP partner, ChipStart, will host an informational WEBINAR next Thursday, October 18, at 9:00am Pacific (12:00pm Eastern).

The 90 minute webinar will provide an introductory overview of antifuse one-time programmable memory, as well as review the specific technical advantages of Novocell’s Smartbit™ bit cell in relation to other antifuse technologies, to efuse and polyfuse technologies, and compare our hybrid OTP/MTP to EEPROM for multi-time write applications.

Whether or not you have used, or plan to use Novocell IP in future projects, you are invited to sit in and learn how Novocell is able to provide guaranteed 100% yield and reliability, the easiest drop-in NVM integration available in the category, and avoid inefficient ECC and bit redundancy, and costly post-programming “testing” to reprogram bad bits. Novocell OTP: Simply Done.

The Webinar will provide the opportunity for questions, and a lead engineer will be on hand to answer.

Overviews of our Novobits,NovoBytes, and NovoHDproducts will be provided. Feel free to contact us with any questions.

NOVOCELL SEMICONDUCTOR, founded in 2001, was an early innovator in the commercialization of nonvolatile memory design using antifuse technology. In 2005, Novocell moved its headquarters to the recently constructed LindenPointe Innovative Business Campus, a 115-acre planned technical park.

Novocell has the industry segment’s most unique and innovative patents, providing it with the ability to claim the highest reliability for it’s OTP NVM product IP of any competitor, and has an enviable customer list including some of the largest electronics and avionics firms in the world.

Novocell maintains a strong network of technical experts throughout the world which it leverages to drive innovation in its solutions. Included in this network are researchers and developers from Carnegie Mellon University, Penn State University, The University of Pittsburgh and other TTC member companies.

After 10 years promoting crossbar switch for interconnects, Sonics finally admit that NoC is better

Network on Chip (NoC) technology is probably one of the most fascinating new concepts that has been developed and is implemented in real chips. NoC can be integrated into various System on Chip (SOC), targeting several market segment: Video Processing, Consumer Electronics, Automotive, Networking, Multimedia (digital TV), but the vast majority of Application Processor SoC, targeting mobile segment (smartphone or media tablets) do include a NoC. Let’s have a look at the SC market dynamic in 2012, as it was forecasted by Gartner in December 2011:

Application Processor is clearly the segment exhibiting the higher growth area (+117%). This means that the design teams in charge of such designs, working with Qualcomm, TI, Nvidia, Samsung, Mediatek or Rockchip (to just name few), will get the highest Time To Market (TTM) pressure you can imagine, but not only, as they are required to provide the best possible optimization in term of power, performance and area. That is, before releasing their design to prototyping (or even layout), they will have done many architectural passes. Here come the NoC.

Some of these teams prefer to use internally developed solution, but we can see now that a majority tend to use an existing solution available as an Intellectual Property (IP) function. We clearly see that the chosen solution is FlexNoC from Arteris, as we have shown in this post. Semiconductor customers such as Samsung, Qualcomm and TI have standardized on the Arteris NoC for their application processors and more complex modem basebands.

The NoC integration allows to interconnect (multiple) CPU, GPU, DSP, on chip memories, off chip DRAM through the DDRn memory controller, and the various functions from video accelerator to audio processor and from SuperSpeed USB to MIPI DSI or HDMI. If NoC functionality would only be to interconnect all these pieces, it would already be useful, when you consider that, in 2012, an Application Processor SoC integrates about one hundred IP, like for example with OMAP5 from TI! The NoC real power is to create an autonomous communication Network within the chip, has we have explained here.

Implementing NoC technology as a customer-ready product requires years of close customer interaction and integration work with tools in the EDA design flow. Arteris is way ahead in this, since they have been working with customers on super sophisticated chips since 2006 and have really demanding customers like Qualcomm, TI and Samsung. Creating a complete NoC product requires not only fully verified RTL output, but advanced internal simulation for quick what-if analysis, “Virtual Prototyping” for system-level design and performance verification, and links with all the EDA tools, especially an automated verification testbench environment like Arteris FlexVerifier.

Moreover, the Because of this high level of integrations, the Arteris NoC will allows a faster SoC integration and I should rather say a better optimized SoC, integrated faster. Indeed, when SoC architect is working to optimize the IC architecture, he has the possibility to do “Virtual Prototyping”, using for example Carbon Design Systems, the integrated FlexExplorer simulation set of tools to optimize a fabric (another name for the NoC), and literally play with the SoC architecture, change it, improve it, as he can explore the various possibilities. He can also export SystemC models for use within Synopsys Platform Architect are Carbon SoCDesigner to create a system-level far much faster with virtual prototype concept thanmore quickly and that runs faster than by doing traditional RTL simulations. Don’t forget: power, performance and area are the three magic words the design architect has to keep in mind during chip integration. Virtual prototyping the SoC has allowed him to optimize performance. In fact, you can select the fastest CPU core on the market, but if you don’t manage properly the data flows, you will end up with a fraction only of the expected performance.

For example, why should you running run your NoC at frequency range above GHz, when the limiting factor will be the memory controller, running at half a GHz maximum? If you do it, run your NoC at GHz range when the memory controller runs at 500 MHz or so, you just waste power! Of course it is possible to create a 1GHZ+ NoC because the technology is based on synthesizable RTL and is only limited by the chosen semiconductor fabrication process. It just isn’t smart to do it.

What’s about area? We have to remember that these Application Processors are sold by dozens of million units, so you can imagine the cost of the single square mm you can save! Very often, a picture value more than words: on the above picture, you can see, on the same SoC design that the routing congestion (left) linked to a crossbar architecture used for interconnects has disappeared when you have moved to a NoC based architecture. Everybody who has once been involved in real chip design knows the cost of layout iterations in term of TTM. You can save (lose) months if you have to reiterate the full process again and again.

So, you are now convinced that NoC (or chip fabric) are one of the best technologies which emerged during these last 5 to 10 years?

Then, you have joined Sonics, a company who has sold crossbar switches based chip fabric during the last ten years, and has suddenly discovered that the NoC based architecture was far better, as they have communicated recently in DeepChip. I think this communication from Sonics is the best tribute you could make to NoC architecture, as it comes from a company claiming that 1 billion IC have been shipped in production by Sonics customers… all of them using crossbar switches! Arteris claims that (only) 100 million IC have been shipped, designed by Arteris’ customers, but all of them without exception are using FlexNoC Network on Chip.

Bravo Sonics, better too late than never!

Eric Esteve

Apple and The Road Ahead to Building an x86 Processor

A small blurb last week announced that Apple had hired Jim Mergard away from Samsung after just 15 months on the job. Previously to that he was a 16-year AMD veteran who headed up their low power x86 Brazos processor team. In near synchronicity, AMD hired Famed Apple Designer Jim Keller to be its chief microprocessor architect. When all is said and done we might be witnessing the biggest re-alignment in the processor industry as Apple embarks on an audacious path to building a family of x86 processors to power their Mac PCs and iCloud servers thereby relieving them of Intel’s high prices and putting further daylight between themselves and competitors. Both individuals may end up working together in driving the vision.

The steady stream of talent hemorrhaging from AMD’s Austin design facility since the summer of 2011 has been readily scooped up by the likes of Samsung, ARM, Apple and Altera. It is, as one friend remarked to me an incredibly deep pool of talent. And yet here, as we witness the demise of Intel’s greatest competitor, the door is opening up to a new threat whose only goal is to serve its own vast internal needs. If Apple were to form a team of several hundred engineers or perhaps collaborate with the remnants of AMD serving as the design house and legal Intel cross-licensed manufacturer, there could be a new high volume product and business model that advances Apple’s goals. Specifically, Apple is looking four years out and sees a $10B payment to Intel for processors that could be reduced dramatically. After spending $500M to buy PA Semi and develop the custom A6, would it be out of the question to drop another several hundred million in order to save billions a year?

For the past several years, Apple has been hammering Intel about the high thermal power (TDP) of the mobile x86 processors. Intel has finally responded, but only marginally. The current Ivy Bridge measures in at 17W, which is 4 times higher than what is ideal for the MAC Air formfactor. Next year, Haswell will arrive with what looks to be about a 9-10W TDP. Again, this is too high for what Apple wants, which leads to CPU throttling and performance degradation.

Moreover, what sticks in Apples craw is that the Intel ULV mobile chips sell for over $220 vs sub $50 for standard chips. If Apple could have their way and had such a chip today, they would probably build something that performs between an A6 and Intel’s Ivy Bridge but consume less than 5W TDP max. With this lower TDP chip, Apple could push the envelope on their enclosures like they have on the iPhone 5. Ultimately this design philosophy drives premium prices with no competitors in sight. Get that, the driver of premium prices today is form factor compared to processor MHz in the 1990s. This is the result of Moore’s Law over delivering for the past decade despite Intel’s attempts to price based on performance.

Intel operates on a plan that purposely doesn’t want Apple to get ahead of the pack. They rely on an even playing field and to be able to prep the market a year or more in advance with processor roadmaps shared with everyone equally so all mechanical designs are in flight simultaneously. Thus the Ultrabook campaign.

In the game that is to come, the shake out in the PC industry will result in the major software and ecommerce players owning the hardware or should we say handing out hardware like it was an AOL disk floating in mail boxes from the 1990s. Silicon, in the end wants to be free and mobile. Microsoft has already tipped their hand to a business model where Windows and Office will be preloaded on tablets selling into corporations, even at the expense of its customers. Google’s acquisition of Motorola is a precursor to a similar model, if they believe that vendors like Lenovo and Samsung are caught in the snares of Apple’s legal team.

But what will Apple do about the treadmill?

Intel has been notorious over the years of being able to minimize competition from AMD, Cyrix and others by turning the treadmill several times a year. They continue to do so with the Tick-Tock model. However Apple has shown that in mobiles it likes to run at a pace slower than the 2 year cadence of Moore’s Law so that the digital pieces (i.e. Processors, NAND, DRAM etc) eventually follow an asymptotic price curve towards $0. Treadmill slowing is death to Intel in the mobile space but perhaps an accelerator on the Cloud side as more devices lead to more servers and storage.

If we assume Intel will continue to ship x86 processor into legacy PCs and the high performance, high priced x86 treadmill continues in the cloud, then it makes sense that an Apple x86 effort would push Intel into the arms of someone like Google or Microsoft because they, in the end, would need a low cost supply of cloud and client processors and Intel could deliver based on their process technology lead.

Apple’s market share gains in the PC space have continued despite the fact that product refreshes have slowed and a strict price umbrella has been imposed at $999 and above. The calls by many ARM enthusiasts to convert the Mac Air’s processor has gone unheeded for good reasons: the maintenance of strong brand separation and the allowance of users to naturally migrate to the iPAD. The coming year will be decisive in understanding the true size of the PC market, especially within corporations. Either way, the Vertical compute model is seeking the establishment of a single company to drive both the x86 processor platform and software stack that is more economical than what Wintel + PC OEMs can deliver.

Side note: AMDs current market cap is now less than $2B and it should be interesting to see if a suitor shows up at their door soon in order to acquire the rights to build an x86.

Full Disclosure: I am Long AAPL, INTC, ALTR, QCOM

A brief History of Mobile: Generations 1 and 2

Mobile is one of the biggest markets for semiconductor, especially if you count not just mobile handsets but also the base-station infrastructure. No technology has ever been adopted so fast and so completely. There are approximately the same number of mobile phone accounts as there are people in the world. A few people have more than one, of course, and a few have none. However, incredibly, almost every person in the world can make a phone call or send at text to almost every other person. Anecdotally more people use a cell-phone than a toothbrush, or even pencil and paper.

This has all happened in around 25 years. In that time mobile phones have gone from being a luxury for a few rich bankers, to something that every salesperson had in their car. Then, in the mid-1990s the market really took off as phones became cheap enough for everybody. Most people under 30 will never have a landline phone since they have never not had a mobile phone since they were a teenager. Of course, just like all of electronics, this has largely been driven by changes in semiconductor technology that has made computer power exponentially cheaper until we have affordable smartphones (already half the market worldwide) that, apart from screen-size, are fully featured personal computers.

The computer power was not just used for Angry Birds or surfing the web. Under the hood in the cell-phones, increased computer power has made it feasible to build radio interfaces that make more efficient use of the available spectrum, compressing voice to be encoded in fewer and fewer bits and more and more phones into the same radio bandwith.

The true cell-phone call (as opposed to simply using walkie-talkie type radio technology from a car) was made in 1973 from Motorola to Bell Labs. Japan launched the first cellular network in 1979. In 1981, in what would turn out to be an important first step towards standardization, the Nordic countries of Finland, Sweden, Norway and Denmark launched a network that worked in all four countries. Nokia and Ericsson, based in Finland and Sweden respectively, became leaders in handsets and base-stations. Many other countries, especially in eastern Europe, also adopted the Nordic standard giving even more economies of scale. The first US network launched in 1983.

The first generation of cell-phones were all analog. Also, there were few economies of scale since many countries decided to have their own proprietary standards. Motorola, with years of experience in radio communications, became the market leader. In the early days of cell-phones, getting the radios to work efficiently was one of the big challenges.

The details vary depending on the precise standard, but at a high-level cell-phones all work the same way. There is a network of base-stations, which are big towers or building-mounted, with large antennas. Sometimes these are disguised as trees or cacti, but often they are clearly visible especially along freeways.

The radio interface between the cell-phone and the base-station consists of a number, usually fairly large, of channels that are used for calls (voice or data transmission). But there are also at least two other channels that are important: the control channel and the paging channel. The control channel is used by cell-phones to communicate with the base-station (and a second control channel is used by base-stations to communicate back to the cell-phones). The paging channel is used to tell cell-phones when there is an incoming call.

At any time, each powered-up cell-phone is communicating with one base-station. In fact, most likely it isn’t communicating much, it is powered down most of the time, waking up occasionally to see if there is an incoming call by briefly listening to the paging channel. It also monitors any other base-stations in range and measures their signal strength. If a neighboring base-station has higher power, as will happen as a phone moves in a vehicle, then the phone disconnects from the original base-stations and picks up off the new one, called a handover. Making this work cleanly while you are on a call, which doesn’t just require the phone to connect to a new base-station but also requires the call in progress to be routed to the new base-station is not straightforward.

The most common reason for cell-phone calls to be dropped is that when this handover takes place there is no free channel on the new base-station to switch to. A similar problem can happen when trying to initiate a call. If a channel doesn’t free up within a given time then the call is abandoned and “call failed” or something similar appears on the phone.

A third channel is transmitted by the base-station so that it can be located. When a phone is first turned on, it searches all channels for this special channel, typically by looking for the channel with the highest power being transmitted. This then allows it to find the control channel and the phone then sends a message to the base-station announcing its existence.

In normal use the phone wakes up every few seconds at a carefully timed moment and listens to the paging channel. If there is an incoming call for the phone, the base station will inform the handset of this during this moment when it is fully powered up. The handset then uses the control channel to set up the call (all calls, even incoming ones, are really initiated from the handset).

Of course, the other way to initiate a call is that the user dials a number. Again, a message is sent on the control channel to set up the call and get a channel to transmit and receive the call itself.

In addition to voice networks, which get a dedicated channel during a call, there are packet-switched data networks used for email and internet access.

In 1982 the mish-mash of different cellular standards was clearly suboptimal. For one thing, a phone from, say, France would not work in Germany for example. But because French and German cell-phones were different they were both manufactured in comparatively low volumes. Work started on a new European standard in a committee originally called Group Spécial Mobile (GSM). In 1987 a large number of countries signed onto the standard, and over the years many more, not only from Europe, also adopted it as the most viable standard. Notable countries that did not adopt GSM were Korea and Japan, and some networks in the US which went with proprietary standards. The GSM standards were finally published in 1990. There is a packet-switched data network too, called GPRS.

Almost every country in the world adopted GSM and so the volumes involved in the industry grew to be enormous. It had approximately 80% share worldwide. Since Japan, Korea and the US did not adopt GSM, the Europeans ended up with a huge advantage. Motorola had to protect its domestic market and completely failed to be an early force in GSM. The same for Samsung and other Korean suppliers, and all the Japanese suppliers who were all focused on their domestic markets. As a result, European handset manufacturers, especially Nokia and Ericsson, became world leaders.

GSM became a huge market for semiconductors too, as handset sales went up to huge volumes. For example, around 2000 there were a billion cell-phones a year being sold, one third by Nokia who were thus shipping about a million phones every day. By the mid-1990s a GSM baseband (pretty much everything except the radio) would fit on about 5 chips but by 2000 it was down to a single chip. This drove prices down and volumes up.

Power Integrity Challenges for High Speed and High Frequency Designs

There is an interesting discussion on the LinkedIn SoC Power Integrity Group in regards to the power integrity challenges for high speed and high frequency designs. More specifically, the additional attention an on-chip power delivery network (PDN) requires as the operating frequency of ICs and SoCs increases.

The PDN has to provide stable power to the circuit across a broad frequency range, with sufficiently low voltage fluctuations at circuit supply pins. This means that PDN input impedance has to be below a certain value, from DC up to the highest frequency of interest (e.g. ~2X the fundamental switching frequency).

Typically, decoupling capacitors (or decaps) are shunt-connected in several locations of the on-chip PDN, in order to shape the impedance characteristic to the desired level. Proper decap design (size and layout placement) which is of utmost importance and dominates the performance of the PDN, calls for a very accurate high-frequency model of the PDN metallization across several layers. That’s the key component for PDN analysis, but is also very difficult to obtain by conventional extraction and simulation methods…

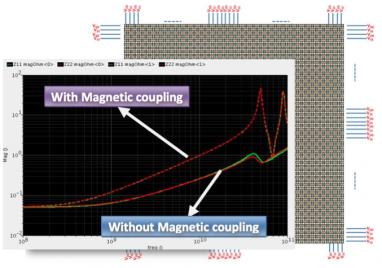

In most cases designers resort to either an RC or an RLC grid model, neglecting magnetic coupling effects (represented by K elements in the SPICE syntax), since these are very hard to extract and simulate. An accurate RLCK model for a medium complexity PDN structure (e.g. 5 levels of metals, size 2mm x 2mm) would contain millions of K elements. Using a conventional Electromagnetic (EM) solver, typically producing S-parameter models would be an alternative, but most EM tools fail to efficiently handle these highly complex structures. But can we really afford to neglect magnetic coupling effects?

One possible solution: Helic’s VeloceRaptor/X™tool was used to model a medium complexity PDN (2 metal layers, occupying 1mm x 1mm total area with ~2000 via transitions per net) in a 28nm CMOS node. VeloceRaptor/X is the industry’s highest capacity extraction tool for high frequency and RF design. RLC and RLCK models were extracted for the PDN and the resulting impedance were compared over a wide frequency range. The PDN layout and impedance plots for the RLC/RLCK (i.e. without/with magnetic coupling) scenarios are shown below. It is clearly shown that magnetic coupling has a significant impact on the PDN impedance characteristic beyond 1 GHz. Therefore, for high-speed and high frequency design in GHz range, magnetic coupling cannot be ignored anymore. A high-capacity extraction solution is needed to accurate model the PDN, so that a proper supply decoupling scheme can be subsequently designed.

HelicCTO Dr. Sotiris Bantaswill be at the TSMC OIP Forum this week. Prior to Helic, he was a Research Engineer with the Institute of Communications and Computer Systems of the National Technical University of Athens (NTUA) and was involved in the design of silicon RF circuits, high-frequency integrated filters and data converters in the course of EU-funded research. Working closely with leading European semiconductor companies during his research years, he developed a deep understanding of design automation needs which he later transcribed into Helic’s innovative EDA products. Sotiris is an engaging guy so please stop by the Helic booth and introduce yourself.

Advanced Node Design Webinar Series

At advanced process nodes, variation and its effects on the design become a huge challenge. Join Cadence® Virtuoso® experts for a series of technical webinars on variation-aware design. Learn how to use advanced technologies and tools to analyze and understand the affects of variation. We’ll introduce you to the latest Virtuoso techniques, best practices, and methodologies you can use to design and verify your advanced node designs.

In these concise 1-hour sessions, we’ll address hot topics including:

- How variation affects design performance at advanced process nodes

- Advanced technologies and tools to analyze and understand the affects of variation

- Using sensitivity analysis to identify devices and parameters that impact circuit performance

- Using optimization techniques to tune design performance and meet specs across the operating conditions

- Using worst-case corners analysis to narrow the number of corners against which the design needs to be verified

- How to use high-sigma yield analysis to predict up to 6 sigma yield

- Layout-dependent effects (LDE) on circuit functionality at advanced process nodes

- Using LDE analysis technologies to detect, mitigate LDE effects at the early phase of the design and reduce design iterations

These webinars are methodology- and application-based. Plus, you don’t need to travel—you can view these presentations and demonstrations from the comfort of your home or office!

Nov 7, 9:00am PT Variation-Aware Design: Understanding the “What If” to Avoid the “What Now”

In this webinar, we will define variation, how it affects design performance, and what you can do to analyze, understand, and limit these effects. We’ll demonstrate how to use sensitivity analysis to identify devices and design parameters that impact circuit performance, and how to use sensitivity analysis results to tune and improve design robustness.

Nov 14, 9:00am PT Variation-Aware Design: Efficient Design Verification and Yield Estimation

In this webinar, we’ll show you how to use advanced capabilities, such as worst-case corners and high-sigma yield analyses, to efficiently cover the design space and estimate design yield.

Dec 5, 9:00am PT Variation-Aware Design: Detecting and Fixing Layout-Dependent Effects using the Virtuoso Platform

In this webinar, you’ll see how advanced Virtuoso technologies can help you detect and fix LDE problems by rapidly producing layout and verification results that feed the industry’s first LDE-aware design flow. Learn how to fine-tune the corrections necessary to make sure LDE problems don’t stop you from getting your design manufactured on time.

Register now: https://www.secure-register.net/cadence/SILR_VAD_4Q12

Silicon-Accurate Mixed-Signal Fractional-N PLL IP Design Paper

Silicon Creations will be presenting a paper with Berkeley Design Automation at the TSMC Open Innovation Platform (OIP) Ecosystem Forum next week where TSMC’s design ecosystem member companies and customers share real-case solutions for design challenges within TSMC’s design ecosystem:

This presentation will describe the challenges in achieving silicon-accurate design and verification of a fractional-N PLL IP fabricated in the TSMC 28nm HP process. Silicon Creations supplies high-performance semi-custom analog and mixed-signal IP that can be optimized for each individual application. The designs include PLLs, DC-to-DC converters, data converters, high-speed I/O, and SerDes. Silicon Creations uses the Analog FastSPICE™ (AFS) Platform from Berkeley Design Automation for nanometer circuit verification. With the AFS Platform, designers increase their productivity and can perform large analog circuit signoff with nanometer SPICE accuracy. AFS is certified in TSMC SPICE-Qualification Programand AFS device noise sub-flow validated in TSMC AMS Reference Flow.

This paper provides details on the verification of programmable delta-sigma fractional-N PLL IP used as a multi-function, general purpose frequency synthesizer. The post-layout netlist of this circuit includes ~108 K elements with ~18.1 K MOS devices. The IP was fabricated using the TSMC 28nm HP process. AFS was used to characterize the VCO and to verify the closed-loop behavior of the post-layout PLL. For the VCO, the dominant noise source of the PLL, AFS Periodic Noise analysis was used to characterize the circuit over 12 temperature, frequency, and process corners, with excellent silicon correlation. For the postlayout closed-loop PLL verification, AFS demonstrated up to 18X speedup when compared with traditional SPICE for locking simulation and AFS Transient Noise results for PLL phase noise were within1-2 dB of silicon measurement.

For more information, see http://www.hwacomms.com/TSMC2012/OIPEcosystemForum/Attendee/agenda.html

Silicon Creations offers high-value integrated circuit design services. As product specifications continually grow more aggressive, trade-offs between performance, power, and area frequently require custom-optimized solutions that are not found with traditional off-the-shelf IP. Silicon Creations supplies high performance custom analog and mixed signal designs that can be optimized for each individual system.

Silicon Creations is focused on providing world class silicon IP for precision and general purpose timing (PLLs), Chip-chip SerDes and high-speed differential IOs. Silicon Creations’ IP is proven from 28n to 180n. With a complete commitment to customer success their IP has an excellent record of first silicon to mass production in customer’s designs. Silicon Creations was founded in 2006 is self-funded and growing. The company has development centers in Atlanta, GA and Krakow, Poland and world-wide sales representation. For more information, visit http://www.siliconcr.com/.

[TABLE] style=”width: 100%”

|-

| align=”left” valign=”top” style=”width: 540px” | Berkeley Design[SUP]®[/SUP] Automation(BDA[SUP]®[/SUP]), Inc. is the recognized leader in nanometer circuit verification. Analog, mixed-signal, RF, and custom digital circuitry are the biggest differentiators in nanometer integrated circuits. Implemented in GHz nanometer CMOS, these circuits introduce a new class of verification challenges that traditional transistor-level simulators cannot adequately address. Accurately characterizing these circuits’ performance is essential to our customers’ silicon success.

Berkeley Design Automation delivers the world’s fastest nanometer circuit verification platform, Analog FastSPICE Platform, together with exceptional application expertise to uniquely address our customers’ challenges. More than 100 companies worldwide rely on Berkeley Design Automation to verify their nanometer-scale circuits.

The AFS Platform is a single executable that seamlessly integrates into the industry’s leading design environment to deliver:

- 5x-10x faster nanometer SPICE accurate simulation on a single core

- Certified to 28nm by the world’s leading foundries

- >120 dB transient dynamic range

- 2x-4x more performance via 4-8 core multithreading

- Industry’s most accurate and efficient analog/RF circuit characterization

- Full-spectrum device noise analysis silicon-correlated within 1-2 dB

- Near-linear performance scaling with increasing number of cores

- Fastest full-circuit and post-layout transistor-level verification

- Industry’s fastest near-SPICE-accurate functional verification

- >10M-element capacity with no accuracy degradation

- Mixed-mode co-simulation with leading Verilog simulators

Berkeley Design Automation has received numerous industry awards and is widely recognized for its technology leadership and contributions to the electronics industry. The company is privately held and backed by Woodside Fund, Bessemer Venture Partners, Panasonic Corp., NTT Corp., IT-Farm, and MUFJ Capital.

|-

Dear Meg, HP is Still a Goner

A year ago, Meg Whitman decided it was time to venture back into the business world by grabbing onto the HP CEO baton from a badly wounded Leo Apotheker. What for? My best guess is to enter the Pantheon of Great Turnaround CEOs of failing companies, best exemplified by the work of Lou Gerstner with IBM in the early 1990s. It comes too late though, as the $120B company has none of the core legacy that IBM had with mainframes that ultimately allows a company to rise from the ashes. HP along with Dell and the other PC vendors are all locked into a business model whose fate is determined by others. It wasn’t supposed to be this way when the decision was made in the 1990s to cast off Bill and Dave’s legacy for a promise of dominating Computing from Big Iron all the way down to PCs. Many people forget, that HP once designed and built RISC processors in their own leading edge fabs. The surrender of this capability, ironically was not supposed to open them up to their eventual destruction at the hands of system competitors who would build their own (i.e. Apple and Samsung).

Whitman’s warning a week ago that HP would enter the valley of Death for at least another year and then rise again in 2014 or 2015 sent nervous investors scampering for the exits. As a result, HP’s stock is now down 50% in the past six months (Dell is also down 50%). The squeeze in PC profits is most painful in the consumer market, where preferences and pocketbooks seek out sleek smartphones and tablets. A retreat to corporate is in motion but hardly a rampart to the coming onslaught.

A little over a year ago, I wrote in a blog that the disaster that culminated in the dismissal of then HP CEO Leo Apotheker over his attempt to spinout the PC Group, could be traced all the way back to July 6, 1994 when they signed an agreement with Intel to stop their own RISC development in exchange for a partnership to define the 64 bit Itanium architecture. This set in motion the decisions whereby the highly profitable instrumentation groups (the true legacy of Bill and Dave) were spun off and in their place a full range of proprietary architectures were acquired (Tandem, DEC VAX and Alpha etc). All would be converted to Itanium and customers would have their one stop shop fulfilled by HP. Intel would make it all so seamless by paying Billions to port the Software Industry over to the new architecture all the while telling a story that 32 bit x86 processors were fairly soon going to hit a performance wall. The two were married to the vision of dominance and off they went.

Software, however, would not be so easily led to the new architecture and in the late 1990s the never-ending trend of computers always desiring to be smaller and more mobile would extend x86 as Itanium wallowed in overextended developments. WiFi entered the picture in the early 2000s and thus drive mobile volumes past desktops. AMD saw an opening in the server space and pressed ahead with 64 bit x86 processors and within a year Intel was forced to concede with its own 64 bit x86 chips. Itanium was defacto dead for all the world to see. Itanium De jure meant that the parking meters would still be left running as the big Iron computers would require constant R&D maintenance $$$ from both Intel and HP. At the end of the day, one has to wonder what the bill would have been had HP stayed with their RISC development for workstations.

Steve Jobs has been called a Genius Control Freak for good reasons. It must have occurred to him sometime after the Portal Player driven iPOD was released that to truly own the marketplace without competitors chomping at his heals he had to do the opposite of what HP did and get into the processor business. Let’s not gloss over this because building a mobile processor on a near leading edge process has resulting in many $100M+ sinkholes in Silicon Valley (I speak from experience) . However, freedom from Intel’s $200 mobile processors, adds up quickly. The new A6 processor has been described in glowing terms due to its outstanding performance and low power capabilities. I think it is somewhat of overkill for the iPhone 5 but it most likely is targeting a broad set of new devices in the coming year. The greater point of Apple’s effort and that of Samsung is that it has proven that going vertical in the computer industry was the only path to success; something HP abandoned in 1994 and which in the end will make Meg Whitman’s turnaround effort an exercise in futility.

FULL DISCLOSURE: I am Long AAPL, INTC, ALTR, QCOM

Altera’s Use of Virtual Platforms

Altera have been making use of Synopsys’s virtual platform technology to accelerate the time to volume by letting software development proceed in parallel with semiconductor development so that the software development does not need to wait until availability of hardware.

In the past, creating the virtual platform has been comparatively time-consuming, but the entire ecosystem of partners providing models, TLMcentral, DesignWare cores and peripherals has come together so that creating the virtual prototype is no longer hard. Plus, stealing some of the fast iteration approach from agile software development means that people realize that the entire prototype does not need to exist before it can be used. The software and platform can be co-developed incrementally.

The software development environment does not need to be changed at all, the same tools can be used for the virtual prototype as for the actual silicon. Furthermore, whereas actual hardware is really tough for debugging when you get down to the signal level, a virtual prototype is simple. There is complete observability and no need for oscilloscopes and logic state analyzers to pick up the signals of interest when they are even available.

So what did Altera actually do? They used Synopsys’ Virtual Prototyping Solution to create a virtual target of their new Cyclone® V and Arria® V SoC FPGA devices. The Altera® SoC FPGA Virtual Target is a fast functional simulation of a dual-core ARM® CortexTM-A9 MPCoreTM embedded processor development system. This complete prototyping tool, which models a real development board, runs on a PC and enables customers to boot the Linux operating system out of the box. Designed to be binary- and register-compatible with the real hardware that it simulates, the Virtual Target enables the development of device-specific, production software that can run unmodified on real hardware.

The result of this, the big advantages that have always been there coupled with the lower barriers to use, mean that broad market adoption really (finally!) seems to be starting. For a company like Altera that doesn’t ship its own systems, there are two big advantages, one direct and one indirect.

The indirect advantage is the Altera’s customers and OEMs can accelerate their own software development and thus bring their systems to market sooner and with higher quality. Application software development can’t start until the operating system is at least partially running, which can’t start until there is some substrate (virtual or silicon) on which to run and test it. Virtual prototypes pull in the start and thus the finish of software development.

The direct advantage is that by accelerating the software development of their OEMs, they accelerate the time when they can ship in volume, which only happens when the customers’ software development is ready for market. In turn that means that there is less of a delay from, for example, introducing a new product or a new process node, to when Altera starts to get a real return on the investment.

The Altera/Synopsys success story is here. The Altera Q&A is here.