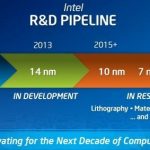

The announcement by Intel during their January earnings call that they were going to hike Capex in 2013 over 2012 left many folks scrambling as to the reasons and the what-the hecks? Here was a company that was exiting 2012 with 50% utilization of their advanced 22nm process and yet signaling more building was to come. Furthermore, this increase seems counter to the claim that most of its current 22nm tools can be repositioned to the 14nm Fabs that ramp later this year. It was Paul Otellini who spoke the words of the strategy that likely came from Intel Chairman and Manufacturing Guru: Andy Bryant. Could Intel be saying that to ultimately win they had to hit the gas pedal guessing that only they could get to 14nm and then 10nm with cash still in the bank? Or is there something else at play (i.e. a Breakup of x86 from Foundry followed by the signing of multiple large customers).

If one goes back just 12 months ago, you will hear Paul Otellini articulate very clearly that Intel receives equivalent value from the x86 architecture and from its fab process. With the slowdown in the PC market and the likely strong corporate adoption of iPADs, the x86 architecture value fades and we are left with the Process Technology and Manufacturing as the asset ready for a true breakout. As mentioned in a previous article, Intel could continue to operate as a strong cash flow generating company with a Data Center that doubles over the next five years and a client side x86 business that is 2/3 or better than current revenue and margins. The assumption is that Capex was dialed back by nearly 40% and everything wafer was utilized. But that scenario is now off the table.

Intel’s continued capacity buildout is of such a magnitude that it will not only support the entire x86 market (assume no AMD or NVDA at the extreme) but also that of a combination of several smartphone and tablet silicon leaders (i.e. Apple and Qualcomm, Broadcom, and nVidia). With this build out we should expect that wafer pricing for next generation leading edge process technology (20nm or below) should come down significantly as Intel rifles in low ball pricing to TSMC and Samsung customers who in turn ask their suppliers to sharpen their pencils. If Intel can’t get TSMC customers then they will reset the margins in the industry. One expects though that in the end Intel will sign on customers at favorable 22nm or 14nm pricing and try to make back the profit at 10nm.

Three years ago, I was convinced that Intel put in place a plan that would allow them to wrap up most of the x86 market by building processors that focused on much higher performing graphics while simultaneously forcing down TDP thermals making it extremely difficult to design a thin notebook that could utilize a standalone graphics controller. Die area shifted from the x86 core to the graphics to close the gap with AMD and nVidia. This business plan, unbeknownst to most, is still modus operandi with Haswell being perhaps the pinnacle of their cannibalization strategy. Soon many analysts will declare that Haswell as overshot the performance target at the expense of a larger die size relative to that of Ivy Bridge. Intel will need to immediately go on a die size crash diet in order to meet pricing requirements at good margins that stabilize the declining PC market. The flip side of this is that x86 client capacity with drop as a % of Intel’s total fab footprint, especially at 14nm. Again, what was the $13B in 2013 CapEx targeted at?

During this same period of three years ago, I was exchanging emails with a long time semiconductor analyst on the prospect of Apple moving to Intel as a foundry due to the expectation that Samsung would be an eventual competitor. It appears Intel made a run for Apple’s business based on its dramatically increased Capex in 2011 and 2012. However, the ambitions of its internal mobile group to pursue the smartphone and tablet markets with the slow off the mark Atom processors likely made Apple reticent to deal. This it should be noted was the Paul Otellini strategy that was operating in parallel to the Andy Bryant Fab build out strategy.

The resignation of Paul Otellini came as a surprise to many but may in hindsight be seen as significant a moment in Intel’s history as that of Andy Grove and Gordon Moore exiting the DRAM market and focusing on x86 during the early days of the PC. I believe what it says is that we have reached the point in time when the Grove-Otellini model of tightly integrated custom product design with manufacturing that maximized performance and margins in the PC market is not transferrable to the new mobile market. The pace of innovation in the applications processor and the rise of the Baseband-Wireless infrastructure as the most valuable component in mobiles has exposed the design side weakness of Intel. Andy Bryant knows that Intel’s most valuable asset is its process technology and the dollars to be gained servicing the mobile silicon suppliers will be many times greater than today’s $35B x86 client business.

Think about it for a moment. The model that has driven Intel since 1968 is soon to be relegated to Server processors and legacy x86 clients as the Foundry takes the company reigns. Android and iOS broke the link Wintel computing link requiring x86.

At what stage is Intel at in its ascendancy of the Foundry model. First, there has to be a true breakdown of the old model. A signpost appeared just last week of what we should expect in the months that lead up to Foundry Independence.

With a loud Bronx cheers from Wall St. analysts, Apple dropped the price of its 13” Mac Book Pro notebook from $1699 to $1499. The press attributed it to slowing Apple sales. Not likely, as Apple has resisted Mac Book Pro price cuts for the past three years in order to provide an umbrella under which the iPad could flourish.

My investigation suggests that Intel cut the price of their Ivy Bridge i5 processor by roughly $100 to spur notebook demand and close the gap with tablets. Intel has chips to move and must remain competitive on cost even as Haswell rolls in later this summer. More importantly, I see this the first of many price reduction steps that effectively move the Ivy Bridge i5 into the Celeron space as the i7 moves down to take the i5 space. It represents the collapse of the Intel x86 price model that has been the driver of its business for the past 20 years. Thus diminishes the x86 client business. It is incredible to think that Andy Bryant may for the sake of saving Intel, have to toss to the side a $30B+ business with large profits in order to chase the much larger mobile business.

At some point soon, Andy Bryant will have to consider implementing one or both of the following options. One is to radically scale back Intel’s mobile group to focus on just the traditional PC and Mac market and thereby signal to Apple, Qualcomm and others that there will be no competition from the Intel product groups. The second option is to spin out the whole x86 business (client and Data Center). The value of the pieces would be much greater than the whole and Intel Foundries could then open themselves up to an even broader set of customers (e.g. nVidia, AMD, Broadcom). Competing and taking customers from Samsung and TSMC is the only business plan that unlocks Intel’s true valuation and based on Intel’s CapEx plans it seems to be on the horizon. Look for a dramatically New Intel to Communicate this plan as soon as their Analyst Meeting in May.

Full Disclosure: I am Long AAPL, INTC, QCOM, ALTR