The Electronic Design Process Symposium (EDPS) is April 18 & 19 in Monterey. The workshop style Symposium is in its 20[SUP]th[/SUP] year. The first session is titled “ESL & Platforms”, which immediately follows the opening Keynote address by Ivo Bolsens, CTO of Xilinx.

In his keynote presentation Ivo will present how the integration of SoC with reprogrammable fabric will introduce increased opportunity for performance, power, and cost tradeoffs with the HW/SW architectural options available. And therefore increased opportunity for design technology improvement in ESL. What will Ivo say about OpenCL? I’m not telling you – you will have to come and find that out yourself. Xilinx is a GOLD sponsor of EDPS.

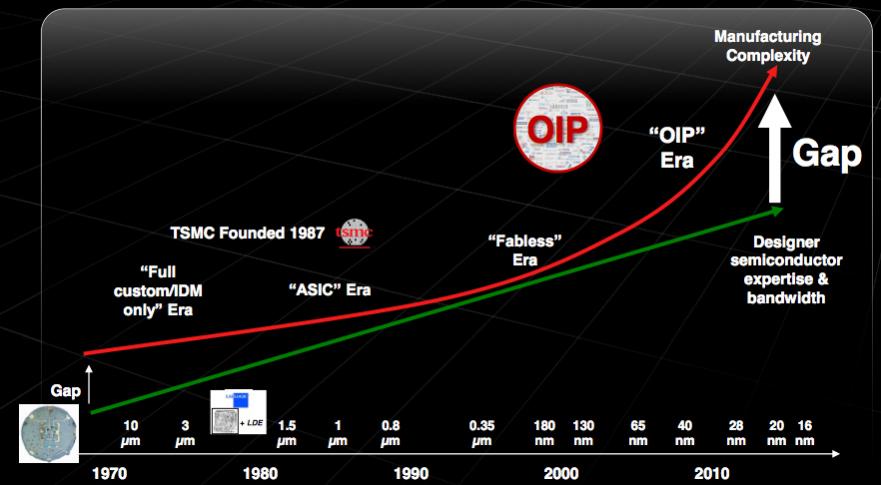

Chart from Ivo’s presentation showing performance opportunity

ESL & Platforms Session

There will be two presentations, then a panel.

Efficient HW/SW Codesign and Cosynthesis for Reconfigurable SoC

This talk will be presented by Guy Bois, CEO of Space Codesign, and will cover their ESL technology that allows a System Architect to drag-and-drop a functional block between HW and SW implementations. In either direction. And monitoring support for full profiling during simulation at ESL.

Embedded HW/SW Co-Development – It May be Driven by the Hardware, Stupid!

Presented by Frank Schirrmeister, Senior Director at Cadence. Frank always brings something new to his presentations and he will not disappoint you here. His talk title is a leading indicator. Frank will discuss the broad needs and opportunities in design methodology from application SW down to the HW. And a part of that is how a “DPU” will help. (something else to come hear about)

Panelists:

Gregory Wright, Member of Technical Staff at Alcatel-Lucent. Gregory is a Zynq user and will talk about the practical challenges to SoC design at ESL – and consequently digging out of the RTL rut. He has a solid design experience history from which to present his position.

Gene Matter, Senior Applications Manager at Docea Power. Docea does power and thermal architectural exploration and validation at ESL. Gene is another person with a deep design experience. He has years of experience doing power exploration at Intel, and now helps other professionals succeed from his current position in an EDA company. Other EDA companies could use people like him.

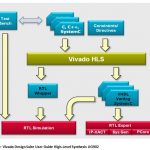

Michael McNamara (Mac), CEO at Adapt-IP. Finally, what I have been waiting for in our profession: ESL based IP using High Level Synthesis (HLS). Mac comes in the opposite direction as Gene – moving from life at EDA companies to an EDA user company. The initial approach is to quickly generate higher functionality IP with targeted performance and area using HLS. This approach was started by Cebatech in recent years, which is now part of Exar (Cebatech –> Altior –> Exar).

Guy Boisand Frank Schirrmeister will also be joining the panel, so we will have a broad representation with many years of total experience. Who knows, maybe Ivo Bolsens will get the itch to join the panel – and he is welcome!

Save $50 through Tues. with promo code SemiWiki-EDPS-JFR.

lang: en_US