Promoted by Accellera, SystemC User Groups are in work worldwide; NASCUGin North America, ESCUGin Europe and ISCUG in India. While I was shuffling between my day-to-day work and strategy management course/exams, I received an invitation from my long time colleague, President and CEO of Circuitsutra Technologies, Mr. Umesh Sisodia to attend the ISCUGconference on 14[SUP]th[/SUP] and 15[SUP]th[/SUP] April. I was delighted as I found the conference quite interesting, enhancing my knowledge and knowing about some good work being done in this part of the world. Also there were important messages about SystemC initiative, related technologies and about progress in semiconductor and electronics industry in this developing country.

Umesh Sisodia

First day was filled with several tutorials on SystemC, TLM, HLS, SystemC based verification, advanced modelling and the like. I could not attend all, but tried to gain insight from these as much as I could by switching between these sessions; can talk about a few some other times when my schedule allows. An important meeting of ISCUG steering committee was scheduled in the evening, in which again Umesh invited me, my pleasure! I will talk about the message from that meeting at the end of this article.

Dennis Brophy

So coming into the 2[SUP]nd[/SUP] day, in the morning we had keynote speeches from Industry leaders which were eye openers. Right after the introduction & background about ISCUG by Saurabh Tiwari from Technical Review Committee, Dennis Brophy, Vice Chairman, Accellera Systems Initiative and Director of Strategic Business Development at Mentor gave a very nice presentation about various activities Accellera is supporting and promoting; from formation of SystemC user groups in different parts of the geography to various working groups such as Language WG, Synthesis WG, Verification WG, TLM WG, SystemRDL WG, CCI (Configuration, Control and Inspection) WG, the latest being SystemC AMS WG and Multi-language WG and various subcommittees. There is open invitation for experts to join these working groups and strengthen the standards around SystemC for greater productivity, performance and interoperability of the systems built with these. It was a great informative speech.

Jaswinder Ahuja

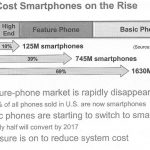

Jaswinder Ahuja, Founder Member of IESA(formerly India Semiconductor Association) and Corporate VP & Managing Director at Cadence presented a detailed update about the rapid developments in semiconductor space and electronics in general in India. A key eye popping message was that the overall electronics market in India will grow to $400B by 2020. That’s amazing news! Another message was the great demographic dividend India has in terms of young population. Here I am a little disappointed and purposely I have omitted ‘working’ from population. Employment has been a major objective since 2[SUP]nd[/SUP] five year plan of India after its independence and has been repeated in most of the subsequent plans. Still major portions of Indian population do not have meaningful employment. In my opinion, demographic dividend can be obtained only if the young population is educated and vocationally trained and more than 90% are employed. Government, business institutions and people need to work together to achieve this.

Sri Chandra

Sri Chandra, Standards Manager at IEEEprovided update about India IEEE activities to promote technical education activities among professionals and students. The community is growing with local chapters in cities like Delhi, Bangalore, Hyderabad etc. IEEE standards and publications are there in most of the areas and that greatly helps in growing the knowledge and skills.

Mike Meredith

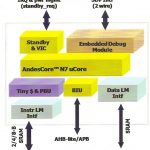

Mike Meredith, Past President of OSCI (Open SystemC Initiative) and now Vice Chairman of Accellera Synthesis WG and Technical Marketing VP at Forte talked at length about SystemC from its origin, various developments, how it helps the SoC design community and the future insight. It was interesting to note that SystemC came into existence in 1999-2000 and is evolving since then into multiple applications as is evident from various working groups around it. I am willing to talk about it in detail in a separate article.

At the end of the keynote lectures, Umesh Sisodia, Organizing Committee Chairman talked about future roadmap of ISCUG. This is where; a summary of what was discussed on the previous day evening in the steering committee meeting was disclosed. Although the conference is being attended by world class people (130 people attended this conference) and specialized focus is given to SystemC and which should be considering SystemC’s versatility as well as exclusiveness, there was a need felt to increase participation in this conference in order to make it more outreaching and economically viable. More sponsorship is needed and a non-profit organization needs to be formed to support it. So, how can it be done? More ideas are welcome, but a few of them are – open it up to wider areas, even associate it IEEE, Embedded Systems Conference and so on. Another idea was to start DVCon India which can include all standards of Accellera and not just SystemC. In that case SystemC must be co-located event along with DVCon India such that it retains its exclusivity as it is attended and used by involved and dedicated experts.

So what do you think? Considering the growth potential in India as elucidated in Jaswinder’s presentation, shouldn’t the ISCUG conference expand and build its brand?