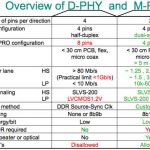

For those taking a quick look at the various MIPI Interface specification, the first reaction is to realize that they will have to look at MIPI more closely, and that it will take them longer than expected to make sure they really understand the various specifications! Let’s start with the PHY. One specification defines the D-PHY, up to 1 Gbps (1.5 Gbps is also defined, but not really used), another defines the M-PHY, to support higher data bandwidth and higher speed. Look simple? In fact, we did not mention the various “gear” supported by M-PHY (per lane): Gear 1 is up to 1.25 Gbps, Gear 2 up to 2.5 Gbps when gear 3 is defined up to 5 Gbps. In fact there are many more differences between D-PHY and M-PHY, if you take a look at MIPI Org web site, you will find this comprehensive picture:

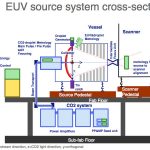

Now, you clearly understand the various MIPI PHY, and you know that a PHY is nothing without a Controller, the digital part of the function in charge of processing the protocol layers, like “Link Layer”, “Transport Layer” and so on. Let’s stay with the M-PHY example. If life would be simple, you would attach one MIPI Controller to this M-PHY. But, if we are (more or less) well paid engineers, it’s because SC related life is not simple… Just take a look at the picture below:

In order to ease SoC integration, M-PHY can support up to six different protocols. This means that when a chip maker decides to integrate several MIPI protocols on the same chip, he wills also instantiates several times the same PHY IP, and the various controllers attached. All controllers are not made equal: DigRF (interfacing with RF chip), LLI (interfacing the SoC and a modem chip, to share a unique DRAM) and SSIC (SuperSpeed USB IC protocol, for board level inter-chip connection) can be plugged directly with the M-PHY. But, another group of Controllers (CSI-3, DSI-2 and UFS) require another piece of IP, UniPro, to be inserted between M-PHY and, for example, MIPI CSI-3 controller (Camera Serial Interface specification).

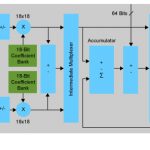

When a chip maker designs an Application Processor for smartphone or media tablet, he is integrating over 100 IP, from ARM A9 to I2C or SRAM. Such a chip maker will certainly appreciate the fact that Synopsys propose a complete Camera Serial Interface 3 (CSI-3) host solution, including the new DesignWare MIPI CSI-3 Host Controller IP combined with the MIPI UniPro Controller and multi-gear MIPI M-PHY IP. With support for up to four lanes in Gear1 to HS-Gear3 operation, the CSI-3 host solution simplifies the system-on-chip (SoC) interface for a wide range of image sensor applications, giving SoC designers maximum flexibility to increase throughput while reducing pin count requirements and integration risk.

I agree with Joel Huloux, MIPI Alliance Chairman when he says: “IP supporting the MIPI CSI-3 v1.0 specification, along with a HS-Gear3 M-PHY, gives designers the ability to rapidly build host configurations into their SoCs,”. “Synopsys’ DesignWare MIPI CSI-3 Host Controller promotes the MIPI ecosystem while furthering the realization and reach of the latest MIPI specifications.” Working in the IP business for about 10 years, I have realized how important it is for a chip maker who decides to outsource a certain function, split into PHY and Controller, to have the opportunity to acquire a complete solution with a single supplier. This is the guarantee that the function has already been integrated (by the vendor), and also validated and verified before he will integrate it. In this case of a Camera solution, we are talking about three different functions! Last but not least, this new MIPI CSI-3 Host Controller, simplifying CSI-3 image sensor interface integration, is a low power solution.

By Eric Esteve from IPNEST

lang: en_US