Speaking from experience, it is very difficult to get an OEM customer to talk about how they actually use standards and vendor products. A new white paper co-authored by Broadcom lends insight into how a variety of technologies combine in a flow from IP block simulation verification with assertions to complete SoC emulation with assertions.

The basic problem Broadcom faces is the typical one for all SoC vendors: how to efficiently verify IP blocks from a variety of sources when integrated into a complex SoC design. Simulation technology used at the IP block level becomes problematic when interactions and real-world stimuli enter the picture at the system level.

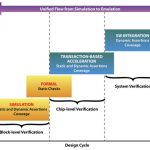

Many vendors use different technologies to verify designs as they progress from low-level IP to system level implementation, but that often spawns inefficiency. Is it possible to use a unified assertion-based flow, whether on a simulator or an emulator, as a way to improve productivity and coverage? That is the question Broadcom has set out to answer, and their experience is valuable in illustrating what works and what still needs improving.

The fundamental approach of using assertions is to pass information, without gory levels of detail, from an IP block up to a subsystem and finally up to the complete system. The white paper cites four sources of assertions Broadcom uses:

1. Inline assertions supplied by designers

2. Assertions stitched by the verification team using SystemVerilog bind construct

3. Protocol monitors supplied by EDA vendors for standard bus protocols and memories, developed internally for custom designs, or supplied by third-party IP providers

4. Automated checks added by simulation and emulation compilers

The power of an emulator may be alluring, but the fact is an emulator – such as Mentor Graphics Veloce – is an expensive piece of capital equipment. The interesting point Broadcom makes is to use the emulator to only target coverage points and checks that were not previously included, except for scenarios difficult to cover in simulation. By bringing forward assertions from the lower cost simulation environment, duplication is removed and precious emulator time focused on what the simulator cannot do – without a lot of rewriting verification code, and within an overall view of coverage.

The rewriting of verification code was addressed by working with Mentor in developing developing a single assertion format. The coverage is addressed by using the Unified Coverage Database (UCDB) and its UCIF interchange format, so tools can read and stamp the coverage matrix at each stage. Interestingly, Broadcom merged their coverage data and assertions into a single UCDB file.

As we’ve seen in other discussions, assertions are only as good as they are written. Broadcom ended up with a set of guidelines for assertion writing, improving flow from simulation to emulation. They identified five rules that can help verification teams:

1. Label each assertion individually and descriptively – this label can be used to filter expected failures, if you need to, and is significantly clearer; e.g., “acknowledge _ without _ request: assert ”

2. Associate a message with each assertion along the lines of “assert else $error(…)” ($error is just like $display except that it sets the simulation return code and halts an interactive simulation)

3. Reduce the severity by using $warning or increase the severity by using $fatal

4. Exclude assertions that are informational in nature and occur frequently from emulation runs

5. Avoid open-ended or unbound assertions as these potentially have a very large hardware impact

They also found a couple of difficulties in passing assertions forward. One is much of the black-box IP out there is not really SystemVerilog compliant, using reserved keywords that interfere with assertions in emulation. Another issue relates to memory accesses outside the memory size that can crash an emulator, handled with automatically inserted assertions. Also, there are some suggestions on using saturation counters for coverage, rather than just go/no-go on the matrix.

The complete white paper is available from Mentor Graphics:

Localized, System-Level Protocol Checks and Coverage Closure Using Veloce

We don’t often get a look at real-world experience with a variety of tools and standards in play, and although some of the findings are specific to the Mentor Graphics Veloce platform, much of the Broadcom experience applies to the general problem of using assertions in verification of complex designs. Thanks to both Broadcom and Mentor for sharing the learning from their use.

How do these findings match with your experience in assertion-based verification? Are there other important considerations for developing a unified flow that works beyond simulation? What else should verification teams and vendors consider in streamlining an approach for both simulation and emulation environments?

lang: en_US