We have gotten to the point with automotive safety where we are happy to hear that ONLY 18,000 people died on the nation’s highways in the first six months of 2017. That’s right. That is good news – 100+ people were killed every day so far this year in, by or as a result of cars.

Apple iPhone Super Cycle Update!

In 2014 Apple released the iPhone 6 which included the first SoC built on a TSMC (20nm) process. This phone started what many call a “Super Cycle” of people upgrading. According to Apple, they now have more than 1 billion activated devices so this super cycle could be seriously super, absolutely.

Continue reading “Apple iPhone Super Cycle Update!”

Getting More Productive Coding with SystemVerilog

HDL languages are a matter of engineering personal preference and often corporate policy dictates which language you should be using on your next SoC design. In the early days we used our favorite text-based editor like Vi or Emacs, my choice was Vi. The problem with these text-based editors of course is that they really don’t understand that you’re using an HDL, so they don’t check for the most common syntax requirements, which leads to lots of iterations of finding and fixing typos and syntax errors. Engineers have more important work to accomplish than iterating the HDL design entry.

In the web world when coding with CSS, Javascript and HTML5 languages we can use a beautiful IDE like Dreamweaver from Adobe to color code the keywords, match braces, and in general speed up the design entry task. Likewise, in the HDL world we have a similar IDE from Sigasi in their product called Sigasi Studio. To get an update I had an email chat with Hendrik Eeckhaut.

Q: What’s happening with designers choosing to code with SystemVerilog these days?

Most SystemVerilog users have a love/hate relationship with SystemVerilog. This hardware description and verification language is really powerful, but also really complex. It is an extremely big language and it does not protect you at all from making mistakes. So SystemVerilog users will benefit even more from the assistance that Sigasi Studio offers, than their VHDL colleagues. Sigasi Studio helps you focus on what is really important, the design, instead of loosing time making the compiler understand your intentions.

Q: So Sigasi started out with an IDE for VHDL, how did that help with SystemVerilog?

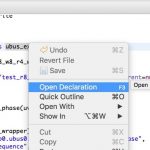

Based on Sigasi’s experience with providing excellent VHDL support, the Sigasi development team knew how to tackle most challenges in providing a good development environment for SystemVerilog: immediate feedback about syntax errors, autocomplete, open declaration, and so on. And because Sigasi studio understands what SystemVerilog means, you get very accurate feedback.

Q: What helps me code in SystemVerilog faster?

The biggest technical challenge was providing good support for SystemVerilog’s Preprocessor. The preprocessor does a textual transformation of SystemVerilog source files. So it can completely rewrite the code that goes into the actual compiler. This preprocessor is stateful and depends on the compilation order. This makes it difficult to keep track of what exactly is going on. To remedy this, Sigasi Studio 3.5 provides features to easily inspect or preview preprocessed code:

- Source code that is excluded with the Preprocessor is automatically grayed out in the editor.

- The result of Macro’s can be easily previewed in an addition view, or simply by hovering your mouse over the macro.

- Syntax errors are immediately reported

Q: When I code a large design there are plenty of include files, how is that supported in your IDE?

Another example of how Sigasi Studio helps SystemVerilog users, is “include files”. A typical pattern in SystemVerilog is to include sources into other source files (with the Preprocessor). In this case the included file can see everything in the scope of the including file. With an ordinary editor, you have to think about all of this yourself. With Sigasi Studio however, this information is available whenever you require it. For example during autocompletes. And the nice thing is, this does not require any additional setup. Again, this allows you to focus on the real job: getting your design ready, or making sure it is well tested.

Q: If I want to give Sigasi Studio a quick test, what should I do?

If this has triggered you to try Sigasi Studio on you own SystemVerilog designs, you can request a full trial licenseon our website www.sigasi.com. This enables you to try all Sigasi Studio’s features on your own projects and feel how Sigasi empowers you. And please tell us all about your experience.

Related blogs

Virtual Prototyping With Connection to Assembly

Virtual prototyping has become popular both as a way to accelerate software development and to establish a contract between system/software development teams and hardware development and verification. System companies with their tight vertical integration lean naturally to executable contracts to streamline communication within their organizations. Similarly, semiconductor groups increasingly more integrated in automotive system design and validation (for example) are now being asked to collaborate with Tier 1 and OEM partners in a similar way, not just in a contract for behavior with software but also with sensors and complex RF subsystems (5G in all its various incarnations as well as radar, lidar and other sensing technologies).

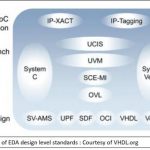

A challenge however can be that communication between the prototype and hardware assembly/verification may be limited thanks to the gap in abstraction between these models. One way to bridge this gap is through co-design and verification. IP-XACT-based modeling, which always aimed to support this sort of end-to-end platform design covering everything from system-level modeling to implementation assembly, is one possible approach.

Some organizations made significant progress in developing these flows. Philips/NXP and ST were a couple of notable examples who were able to start prototyping and architecture development in SystemC (which the standard supported) and carry this all the way through to back-end design, supporting co-design and co-verification all the way through. While original platform-based design efforts were disrupted by market shifts (the iPhone et al), system markets are now more settled and new drivers have become apparent. Now some of these original approaches are re-emerging, sometimes with the same over-arching goal, sometimes to serve more tactical needs.

In the examples I used to open this blog, virtual prototypes can drive top-down executable requirements; another quickly growing application is in bottom-up system demonstrators: here’s how my proposed solution will perform, you can plug into your system to test how it will interoperate in realistic use-cases and we can iterate on that demonstrator to converge on a solution you find to be acceptable, before I start building the real thing.

In both classes of usage, there is obvious value in the hardware development team being able to connect more than through just this prototype to real assembly – the more comprehensive the connection, the more efficient the flow will be through to final assembly and verification and the less chance there will be for mistakes. This isn’t through automated system synthesis (except perhaps in certain sub-functions). The ideal connection should be a common platform to support co-design/verification starting from a virtual prototype with incremental elaboration of system behaviors into implementation choices. And there should be common points of reference between these views, such as memory/register maps, establishing verifiable behaviors at both levels.

Another especially interesting (and related) application of the virtual prototype is to drive verification of the hardware implementation. Thanks to extensions to UVM, there is now a UVM-System-C interface standard. From what I see at the Accellera website this still seems to be in early stages but I’m sure solution vendors are already helping to bridge gaps. What this enables is a system-based driver for hardware testing, allowing for realistic use-case verification based on tests written at the system level by the system experts, allowing for connection through familiar UVM-based verification platforms but without needing to reconstruct system-level use-cases in UVM.

Magillem has been working in the platform-based design space around IP-XACT since the Spirit days (the standard was originally called Spirit and is now formalized as IEEE-1685) and are pretty much the defacto tool providers in this area. They have been very active since the beginning in development of the standard and have been updating their support along with that evolution, including support for SystemC views, all within in a common platform. Recently they extended their product suite, built around this platform, through the introduction of MVP, the Magillem Virtual Prototype system for the assembly and management of virtual prototypes. MVP does not provide a simulator, though you can manage simulation from within the platform; you can link to your favorite vendor simulator or the freeware OSCI simulator. They are also partnered with Imperas for interoperability in building systems around OVP (open virtual prototype) models.

So you can build and manage virtual prototyping within MVP, but where this becomes especially interesting is in the ability to link between the virtual prototype and increasing elaboration of the design and verification. Magillem provide multiple capabilities; here I’ll touch here on a couple of domains very closely related to the bridge between the virtual prototype and assembly – developing (memory-map) register descriptions and developing register sequence macros. Both have become very complex tasks in large systems – importing legacy descriptions, editing to adapt to a new design, statically checking against assembly constraints and exporting to formats supporting driver development, dynamic verification and documentation. Synchronizing these functions between the virtual prototype and the hardware assembly is critical – and is already tied in to the development flow.

If you’re interested in having this level of linkage between virtual prototyping and hardware assembly, you can learn more about Magillem MVP HERE. I can also recommend a couple of interesting conference papers which get into more depth, one on using virtual prototypes for requirements traceability in embedded systems and another on using a SystemC testbench to drive hardware verification. You will need to request these from Magillem through this link.

12 Myths about Blockchain Technology

Blockchain, the “distributed ledger” technology, has emerged as an object of intense interest in the tech industry and beyond. Blockchain technology offers a way of recording transactions or any digital interaction in a way that is designed to be secure, transparent, highly resistant to outages, auditable, and efficient; as such, it carries the possibility of disrupting industries and enabling new business models.

Continue reading “12 Myths about Blockchain Technology”

High Bandwidth Memory ASIC SiPs for Advanced Products!

When someone says, “2.5D packaging” my first thought is TSMC and my second thought is Herb Reiter. Herb has more than 40 years of semiconductor experience and he has been a tireless promoter of 2.5D packaging for many years. Herb writes for and works with industry organizations on 2.5D work groups and events at conferences around the world. I have worked with Herb on various conferences and recommend him professionally at every opportunity.

Next month Herb is moderating a webinar with Open-Silicon on High Bandwidth Memory ASIC SiPs for High Performance Computing and Networking Applications on Tuesday, September 19, 2017 from 8:00 AM – 9:00 AM PDT. I strongly suggest you register today because this one will fill up!

This Open-Silicon webinar, moderated by Herb Reiter of eda 2 asic Consulting, Inc., will provide an overviewHBM2 ASIC SiPs (System in a Packages) for density and bandwidth-hungry systems based on silicon proven Open-Silicon’s High Bandwidth Memory (HBM2) IP subsystem solution. . Attendees will also learn about the system integration aspects of 2.5D HBM ASIC SiP, and performance results of various memory access patterns suiting different applications in High Performance Computing and Networking.

The webinar also summarizes silicon validation results of a 2.5D HBM2 ASIC SiP validation/evaluation platform, which is based on Open-Silicon’s HBM2 IP subsystem in TSMC’s 16nm in combination with TSMC’s CoWoSTM 2.5D silicon- interposer technology and HBM2 memory They will discuss the significance of the results and how they demonstrate functional validation and interoperability between Open-Silicon’s HBM2 IP subsystem and the HBM2 memory die stack. Attendees will learn about HBM2 memory and its advantages, applications and use cases.

The panelists will also discuss the HBM2 IP subsystem roadmap and Open-Silicon’s next generation multi-port AXI (Advanced eXtensible Interface) based HBM2 IP subsystem development targeting 2.4Gbps per-pin data rates, and beyond, in TSMC’s 7nm technology. This webinar is ideal for chip designers and system architects of emerging applications, such as high performance computing, networking, deep learning, neural networks, virtual reality, gaming, cloud computing and data centers…

For those of you who don’t know, TSMC’s CoWoS® (Chip-On-Wafer-On-Substrate) advanced packaging technology integrates logic computing and memory chips in a 3-D way for advanced products. CoWos targets high-speed applications such as: Graphics, networking, artificial intelligence, cloud computing, data center, and high-performance computing. CoWos was first implemented at 28nm in 2012 and continues today at 20nm and 16nm. Next up is 7nm which should be a banner node for CoWos, absolutely.

About Open-Silicon

Open-Silicon transforms ideas into system-optimized ASIC solutions within the time-to-market parameters desired by customers. The company enhances the value of customers’ products by innovating at every stage of design — architecture, logic, physical, system, software, IP — and then continues to partner to deliver fully tested silicon and platforms. Open-Silicon applies an open business model that enables the company to uniquely choose best-in-industry IP, design methodologies, tools, software, packaging, manufacturing and test capabilities.

The company has partnered with over 150 companies, ranging from large semiconductor and systems manufacturers to high-profile start-ups, and has successfully completed 300+ designs and shipped over 125 million ASICs to date. Privately held, Open-Silicon employs over 250 people in Silicon Valley and around the world. To learn more, visit www.open-silicon.com

Why Open and Supported Interfaces Matter

Back in the early 1980’s during the nascent years of electronic design automation (EDA), I worked at Texas Instruments supporting what would become their merchant ASIC business. Back then, life was a bit different. The challenge we faced was to make our ASIC library available on as many EDA flows as we could to give as many users as possible a path to our silicon. CAD flows then tended to be one-vendor flows but even so I was all to keenly aware of the need for open and supported interfaces. What good was an EDA tool flow if my customers had no easy way to hand-off a design to our fab? In those days, we literally hand-crafted each interface. I still remember my first programming assignment at TI was to get a Daisy Logician system to talk to a TI 990 mini-computer through a RS232 serial port. Ouch… My second program was to write the Daisy netlister as there were no standards for such things back then.

Fast forward to the present, and the need for open and supported interfaces has not diminished in the least. We made significant progress in three decades with all kinds of data formats for designs, libraries, constraints, floorplanning, test vectors, packaging etc. You would think by now that interfaces would be a solved problem and not much of a concern any more. Well if you are thinking that way, think again.

As time has moved forward, we have indeed solved many different interface issues, however the size and complexity of our designs have rushed ahead at a pace far exceeding what we imagined when we invented those interfaces and many of them are now showing their age.

The funny thing about standards, whether they be defacto or otherwise, is that by their very nature they imply stability. Yet, stability is problematic in an industry that literally reinvents itself about every 18 to 24 months with the complexity of its designs rising exponentially at every evolutionary step. Take GDSII for example. It has been the staple format for design hand-off to the fab for the last thirty years. Ten or so years ago it was already struggling to keep up and the industry created a new format called OASIS to take its place. Yet here we are a decade later and the very stake in the ground that made GDSII so stable and useful is what has been holding the industry back from widely adopting OASIS.

Over the last three decades we’ve had the COT revolution that delaminated the entire supply chain, an explosion of EDA companies that eventually got acquired and merged into the big three, the fighting over Joe Costello’s proverbial dog food bowl with all-in-one flows and now, another resurgence of smaller EDA companies again providing solutions for the new challenges that come with each new process node. We are in a continual cycle of reinventing the industry and all the while, at each step, designers are scrambling to use the best tools available for the job at hand.

So, what’s the key to survival?

The first is to recognize that one size does not fit all. I’ve proven this repeatedly in my 30+ years in EDA. All I need do is to look in my closet and see three different sizes of clothes that I’ve managed to grow out of and into and realize that design teams and companies do much the same thing. A startup’s needs are vastly different than the needs of an Intel or Qualcomm and we should not assume that the same set of EDA tools and flows will work equally well for both. The acquisition of Tanner by Mentor seems to be a good indication that at least one of the big three have recognized that times are again changing.

The second thing is that no matter what the size of your company or what silicon platform you are using, having a well thought-out and controlled design process that fits your problem is essential. As one of my old bosses at TI used to say, “you can’t improve something unless you can measure it and control it first”. And, don’t assume that you existing tool flow must do everything the way it used to. Or perhaps a better way to think of it is to use the right tool for the right job. A good example of this is the merging of design blocks into a large SoC. Our first inclination is to merge all the blocks into the place and route (P&R) tool, finish up the connections and then stream out the GDSII (or now OASIS). However, this is not necessarily a core strength of P&R. Instead perhaps a better way might be to truly do hierarchical design and use another tool to merge the layouts for the final repair and stream-out process. I’m not advocating one flow over another here, I just saying you need to be open to re-engineering your flow every so often just like the fabs re-tool to handle the next process node.

Lastly, since we do re-engineer ourselves every couple of years it’s time as an industry to truly embrace open and supported interfaces as a necessity of life. They are not a luxury, nor are they something that should be taken for granted. At best, life if miserable without them and at worst the lack of good interfaces can literally mean the lack of a design flow needed to get your job done.

The Calibre team at Mentor seem to get this point. Mentor does an exemplary job of maintaining links to the rest of the EDA tool ecosystem. I can tell you this does not come for free. It takes time, vision, foresight and willingness to keep all these interfaces updated and working properly. Mentor should be applauded for understanding the importance of this work as it normally isn’t readily associated with driving product revenue for the company.

Translated, this all boils down to the industry financially valuing good, clean, open and reliable interfaces. After all, financially rewarding vendors who do a good job of creating and supporting open interfaces is one way of protecting the valuable investment you’ve made in their tools.

See also:

Mentor Calibre Interfaces

Analysis and Signoff for Restructuring

For the devices we build today, design and implementation are unavoidably entangled. Design for low-power, test, reuse and optimized layout are no longer possible without taking implementation factors into account in design, and vice-versa. But design teams can’t afford to iterate indefinitely between these phases, so they increasingly adapt design to implementation either by fiat (design components and architecture are constructed to serve a range of implementation needs and implementation must work within those constraints) or through restructuring where design hierarchy is adjusted in a bridging step to best meet the needs of power, test, layout and other factors.

This raises an obvious question in the latter case – “OK, I’m willing to restructure my RTL, but how do I decide what will be a better structure?” This requires analysis and metrics but it’s not easy to standardize automation in this domain. SoC architectures and use-cases vary sufficiently that any canned approach which might work well on one family of SoCs would probably not work well on another. But what is possible is to provide a Tcl-based analytics platform, with some supporting capabilities, on which a design team can quickly build their own custom analytics and metrics.

A side note here to head off possible confusion. Many of you can probably construct ways this analysis could be scripted in synthesis or a layout tool. But if this has to be done in RTL chip assembly with a minimal learning curve, and the restructuring changes have to be reflected back in the assembly RTL for functional verification, and if you’re also going to use a tool to do the restructuring, then why not use the same platform to do the analysis? This is what DeFacto provides in the checking capabilities they include in their STAR platform.

This always centers around some form of structural analysis but generally with more targeted (and more implementation-centric) objectives than in global hookup verification. One objective may be to minimize inter-block wires to limit top-level routing. Or you may want to look at clock trees or reset trees to understand muxing and gating implications versus partitioning. And you want to be certain you know which clock is which by having the analysis do some (simulation-free) logic state probing to see how those signals propagate. This logic-state probing is also useful in looking at how configuration signals affect these trees. Again, you will script your analysis on top of these primitives as part of inferring a partitioning strategy.

Another driver of course is partitioning for low power. I mentioned in my last blog the potential advantages of merging common power domains which are logically separate in the hierarchy. Another interesting consideration is in daisy-chaining power switch enables to mitigate inrush current when these switches turn on. How you should best sequence these will depend in part on floorplan and that depends on partitioning, hence the need to analyze and plan your options. In general, since repartitioning may impact the design UPF, you will want to factor that impact into you partition choices.

Similar concerns apply to test. I talked previously about partitioning to optimize MBIST controller usage. Naturally a similar consideration relates to scan-chain partitioning (collisions between test and power/layout partitioning assumptions don’t help shift-left objectives). There are sometimes considerations around partitioning memories. Could a big memory be split into smaller memories, possibly simplifying the MBIST strategy? This by the way is a great example of why canned solutions are not easy to build; this is a question requiring architecture, applications and design input.

Finally, the tool provides support for an area I think is still at an early stage in adoption but is quite interesting – complexity metrics. This idea started in software, particularly in the McCabe cyclomatic complexity metric, as a way to quantify testability of a piece of software. Similar ideas have been floated from time to time in attempt to relate RTL complexity to routability (think of a possible next level beyond Rent’s rule). You might also imagine there being possible connections to safety and possibly security. DeFacto have wisely chosen not to dictate a direction here but rather provide extraction of a range of metrics on which you can build your own experiments. One customer they mentioned have been actively using this approach to drive partitioning for synthesis and physical design.

In all these cases, the goal is to be able to iterate quickly through analysis of options and subsequently through restructuring experiments. The STAR platform provides the foundation for you to build your own analytics and metrics highlighting your primary concerns in power, test and layout, to translate those into a new structure and then to validate your analytics and metrics confirm that you met your objective. You can learn more about STAR-Checker HERE.

Customizable Analog IP No Longer a Pipe Dream

Configurable analog IP has traditionally been a tough nut to crack. Digital IP, of course, now provides for wide configurability for varying applications. In the same way that analog design has remained less deterministic as compared to digital design, analog IP has also tended to be less flexible. However, the tide may be turning for configurable analog IP. The Brazilian analog IP company Chipushas been working with MunEDA tools to bring this about.

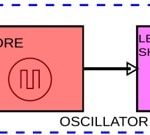

Of course, it all starts with a robust design where forethought has been given to ensuring a wide operating range. For Chipus this is the basis of their CM4018hlf flexible ring oscillator. This circuit is tunable to frequencies between 200kHz and 20MHz – quite a large range. Chipus was founded in 2009 and now offers over 180 IP blocks, many of them silicon proven and in production. They are using MunEDA’s WiCkeD to design across this wide range of frequencies and then offer models for any desired operating point on their web portal. The alternative of manually tuning and then optimizing the design for a wide range of frequencies would be prohibitive.

To help us better understand the behind the scenes work that was done to enable this wide range of configuration, the folks at Chipus put together a demonstration design using a similar set up. I had a chance to look over their write up on this and found it quite interesting.

For their example the ring oscillator running at 170kHz is designed on SilTerra C18G, a 0.18um CMOS process. The circuit consists of a current reference, an oscillating core and a level shifter. There are about 60 transistors in the circuit, including the current reference and trimming. This size design typically takes 2 or 3 days of work to adapt to a given specification. There are a number of knobs in the design that offer the necessary flexibility, but they also offer a potentially complicated design space – especially after variation and environmental factors are accounted for.

Chipus in one of their examples chose 4.5MHz as their target speed. They used WiCkeD’s deterministic optimization capabilities to modify the fval for the design. It took several iterations. Part of the process involved managing the temperature behavior because the bias current affects the operating frequency. Once the frequency was dialed in, they proceeded to optimize current (Itot). The WiCkeD software can optimize to the lowest current value that does not affect the ability to meet the other specifications for the design. The images below show the various steps of each optimization phase.

Where this example moved away from conventional usage of their tool is with the addition of Python scripts to allow recursive iteration that used previous optimization results as the starting point for new versions running at different frequencies. An added feature of comprehensively covering the design space over a range of frequencies is that a Pareto curve can be produced showing possible operating points.

Chipus ran the algorithm to create a family of 7 oscillators that run from 140kHz to 9MHz. This was accomplished in approximately 2 days using a typical desktop workstation and 4 Spectre licenses. The Python code was not necessarily design specific, so applying this technique to other designs should be straightforward. This shows the power of combining advanced analog optimization tools and scripting create some very impressive results. It looks like the day of rapid customization of analog IP is closer than it might have at first seemed. For a complete write up on the utilization of WiCkeD, I suggest looking at the MunEDA website.

There is also a tutorial aboutlow power circuit optimization for IoT from the 30th IEEE System-on-Chip Conference 2017 that is close to this topic featuring Michael Pronath of MunEDA:

Abstract:

Designing circuits for enhanced IoT (Internet of Things) applications is one of the current growth driver for the electronics industry. Optimizing such circuits for lowest power consumption while maximize functionality and performance is key for successful implementation of such circuits in the IoT systems. IoT devices are diverse in nature but are typically constrained by limited power availability, limited area budget and the need for modularity of design. The burden of ultra-low-power budget unfortunately doesn’t necessarily mean that other performance requirements are relaxed. The tutorial is therefore geared towards designers of IoT devices including sensors, MEMS, mobile devices, medical sensors, wireless communication devices, near field communication devices, energy harvesting designs, mobile devices, and wireless communication devices. It will focus on how automated circuit sizing and tuning methodologies can be used to enhance existing design expertise to reduce power consumption while trade-off with other circuit performances. Additionally it will be shown how features like circuit sensitivity analysis can be used for confirming design hypotheses. Using such a verification and optimization environment can help systematically and fully explore design’s operating, design and statistical design space.

Bluetooth 5 IP is Ready for SoC Integration

Bluetooth®, WiFi, LTE, and 5G technologies enable wireless connectivity for a range of applications. While each offer unique features and advantages, designers need now to decide which protocol to integrate in a single chip after having test the market by using wireless off-chip solutions. Bluetooth 5 builds upon the success of Bluetooth-enabled audio, wearable, and other small portable devices. Bluetooth 5 expands into smart home applications, extends beacon capabilities, and opens the door to many other new and feature-rich applications requiring wireless technology. The adoption of Bluetooth by the mobile phone has positioned it as a leading candidate to solve interoperability hurdles and the use of Bluetooth has grown beyond traditional applications and into audio, wearable, and other small portable device and toy applications. The benefits of wireless integration into a single SoC for Internet of Things (IoT) applications in term of power, performance and cost is becoming evident, especially as designs move to more aggressive process nodes like 55nm and 40nm.

There are many wireless standards including ZigBee, wirelessHART, Z-Wave, WiSun and more, that have served niche applications such as smart homes, remote controls, building automation, and metering, but the industry is having difficulty finding interoperability between the Internet of Things devices among the fragmented set of standards and options available. In 2016, the SIG addressed the key requirements of simple and secure wireless connectivity by introducing Bluetooth 5, which “quadruples range, doubles speed, increases data broadcasting capacity by 800%” according to the June 2016 Bluetooth SIG press release. The evolution of Bluetooth to Bluetooth 5 continues to build momentum and “will deliver robust, reliable Internet of Things (IoT) connections” that make wearables and now smart homes a reality.

In fact, increasing operation range offered by Bluetooth 5 will enable connections to IoT devices that extend far beyond the walls of a typical home, while doubling the speed allow supporting faster data transfers and software updates for devices. Bluetooth 5 specification is also defining key features like adaptive frequency hopping, to pave the way for operation in densified wireless installations anticipated with the development of future IoT solutions, including 5G.

Some of the key features of Bluetooth 5 are listed below:

- Data rates from 1Mbps to 2Mbps with more flexible methods to optimize power consumption

- Longer range via larger link budget and supporting up to 20 decibel-milliwatts (dBM) where local law allows

- Higher permission-based advertising transmission to deliver Bluetooth messages to Bluetooth-enabled devices, especially beacons

- Adaptive Frequency Hopping (AFH) based on channel selection algorithm to improve connectivity performance in environments where other wireless technologies are in use

- Limited high duty cycle non-connectable advertising using intervals of less than 100ms for limited periods of time re-connectivity to improve user experience and battery life with faster connections

- Slot availability masks to detect and prevent other wireless band interferences

In the past, Bluetooth has been implemented in systems via combo chipsets that include WiFi and other wireless technologies. As a result, a vast number of implementations are dual mode (low energy and classic) combo wireless chipsets, but solutions only supporting the Bluetooth low energy specification are rapidly moving to be fully integrated into a single monolithic system, or a SoC. This approach allow to take full advantage of power consumption, process node alignment and higher performance. We can see that MCUs are now adopting Bluetooth low energy IP into their chipsets. There is a clear trend that Bluetooth low energy will continue to penetrate MCU solutions as a de facto standard feature, and process nodes such as ultra-low power 55-nm and 40-nm processes play a critical role in the ability to integrate Bluetooth.

Last but not least, the DesignWare Bluetooth Low Energy IP is qualified by the Bluetooth SIG and has gone through a rigorous validation process from a complete design verification flow to full characterization of power, voltage, temperature (PVT) corners and interoperability with the ecosystem.

You can get more information in this article from Ron Lowman:

https://www.synopsys.com/designware-ip/technical-bulletin/bluetooth5-dwtb-q217.html

Eric Esteve from IPNEST