Over the past several years, the number of devices connected via Internet of Things (IoT) has grown exponentially, and it is expected that number will only continue to grow. By 2020, 50 billion connected devices are predicted to exist, thanks to the many new smart devices that have become standard tools for people and businesses to manage many of their daily tasks.

Smart connected devices boost customer’s engagement, increase visibility, and streamline communications, especially with new human-machine interfaces like Voice User Interface (VUI) the favorite interface for new digital assistants like HomePod, Alexa and Google Assistant for a good reason—80 percent of our daily communications is conducted via speech.

In the future, IoT will continue to advance at an extraordinarily rapid pace, with remarkable growth in many directions. The ultimate goal is to have a smart and completely secure IoT system, however many obstacles will need to be overcome before that goal can become a reality.

IoT and Blockchain convergence

The current centralized architecture of IoT is one of the main reasons for the vulnerability of IoT networks. With billions of devices connected and more to be added, IoT is a big target for cyber attacks, which makes security extremely important.

Blockchain offers new hope for IoT security for several reasons. First, blockchain is public, everyone participating in the network of nodes of the blockchain network can see the blocks and the transactions stored and approves them, although users can still have private keys to control transactions. Second, blockchain is decentralized, so there is no single authority that can approve the transactions eliminating Single Point of Failure (SPOF) weakness. Third and most importantly, it’s secure—the database can only be extended and previous records cannot be changed.

In the coming years manufactures will recognize the benefits of having blockchain technology embedded in all devices and compete for labels like “Blockchain Certified.”

IoT investments on the rise

IoT’s undisputable impact has and will continue to lure more startup venture capitalists towards highly innovative projects in hardware, software and services. Spending on IoT will hit 1.4 trillion dollars by 2021 according to the International Data Corporation (IDC).

IoT is one of the few markets that has the interest of the emerging as well as the traditional venture capitalists. The spread of smart devices and the increase dependency of customers to do many of their daily tasks using them, will add to the excitement of investing in IoT startups. Customers will be waiting for the next big innovation in IoT—such as smart mirrors that will analysis your face and call your doctor if you look sick, smart ATM machine that will incorporate smart security cameras, smart forks that will tell you how to eat and what to eat, and smart beds that will turn off the lights when everyone is sleeping.

Fog computing & IoT

Fog computing is a technology that distributed the load of processing and moved it closer to the edge of the network (sensors in case of IoT). The benefits of using fog computing are very attractive to IoT solution providers. Some of these benefits allow users minimize latency, conserve network bandwidth, operate reliably with quick decisions, collect and secure a wide range of data, and move data to the best place for processing with better analysis and insights of local data. Microsoft just announced a $5 billion investment in IoT, including fog/edge computing.

AI & IoT will work closely

AI will help IoT data analysis in the following areas: data preparation, data discovery, visualization of streaming data, time series accuracy of data, predictive and advance analytics,and real-time geospatial and location (logistical data). Here are a few examples.

Data Preparation: Defining pools of data and cleaning them, which will take us to concepts like Dark Data and Data Lakes.

Data Discovery: Finding useful data in defined pools of data.

Visualization of Streaming Data: On-the-fly dealing with streaming data by defining, discovering data, and visualizing it in smart ways to make it easy for the decision-making process to take place without delay.

Time Series Accuracy of Data: Keeping the level of confidence in data collected high with high accuracy and integrity of data

Predictive and Advance Analytics: Making decisions based on data collected, discovered and analyzed.

Real-Time Geospatial and Location (Logistical Data): Maintaining the flow of data smoothly and under control.

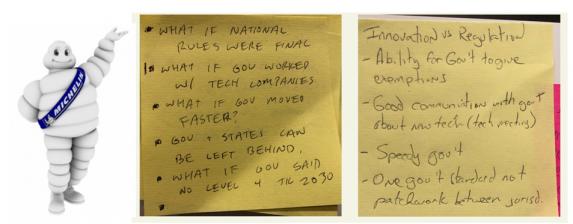

Standardization battle will continue

Standardization is one of the biggest challenges facing growth of IoT—it’s a battle among industry leaders who would like to dominate the market at an early stage. Digital assistant devices, including HomePod, Alexa, and Google Assistant, are the future hubs for the next phase of smart devices, and companies are trying to establish “their hubs” with consumers, to make it easier for them to keep adding devices with less struggle and no frustrations.

But what we have now is a case of fragmentation, without a strong push by organizations like IEEE or government regulations to have common standards for IoT devices.

One possible solution is to have a limited number of devices dominating the market, allowing customers to select one and stick to it for any additional connected devices, similar to the case of operating systems we have now have with Windows, Mac and Linux for example, where there are no cross-platform standards.

To understand the difficulty of standardization, we need to deal with all three categories in the standardization process: Platform, Connectivity, and Applications. In the case of platform, we deal with UX/UI and analytic tools, while connectivity deals with customer’s contact points with devices, and last, applications are the home of the applications which control, collect and analyze data.

All three categories are inter-related and we need them all, missing one will break that model and stall the standardization process.

IoT skills shortage

The need for more IoT skilled staff is rising, including a growing need for those with AI, big data analytics and blockchain skills.

Universities cannot keep up with the demand, so to deal with such shortage, companies have established internal training programs to build their own teams, upgrading the skills of their own engineering teams and training new talents. This trend will continue, representing an opportunity for new engineers and a challenge for companies.

Original article was published on R&D Magazine : https://www.rdmag.com/article/2018/05/looking-ahead-whats-next-iot

Ahmed Banafa Named No. 1 Top VoiceTo Follow in Tech by LinkedIn in 2016

Read more articles at IoT Trends by Ahmed Banafa